Documentation

Getting Started

Model Context Protocol (MCP)

Models (model.yaml)

User Interface

Getting Started

Model Context Protocol (MCP)

Models (model.yaml)

User Interface

When loading a model, you can now set Max Concurrent Predictions to allow multiple requests to be processed in parallel, instead of queued. This is supported for LM Studio's llama.cpp engine, with MLX coming soon.

Please make sure your GGUF runtime is upgraded to llama.cpp v2.0.0.

Parallel Requests via Continuous Batching

Parallel requests via continuous batching allows the LM Studio server to dynamically combine multiple requests into a single batch. This enables concurrent workflows and results in higher throughput.

Setting Max Concurrent Predictions

Open the model loader and toggle on Manually choose model load parameters. Select a model to load, and toggle on Show advanced settings to set Max Concurrent Predictions. By default, Max Concurrent Predictions is set to 4.

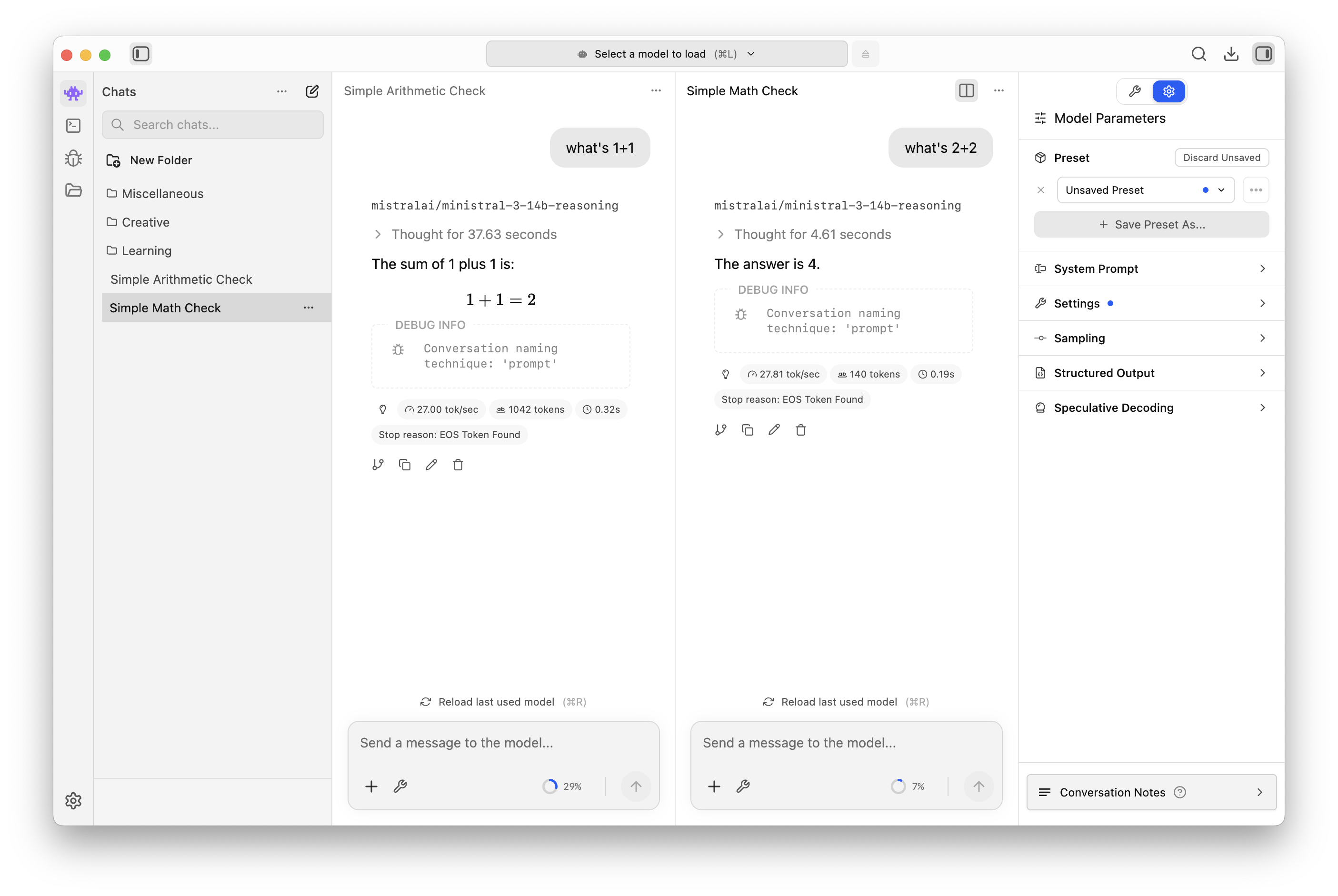

Sending parallel requests to chats in Split View

Use the split view in chat feature to send two requests simultaneously to two chats and view them side by side.

Send parallel requests using split view in chat

This page's source is available on GitHub

On this page

Parallel Requests via Continuous Batching

Setting Max Concurrent Predictions

Sending parallel requests to chats in Split View