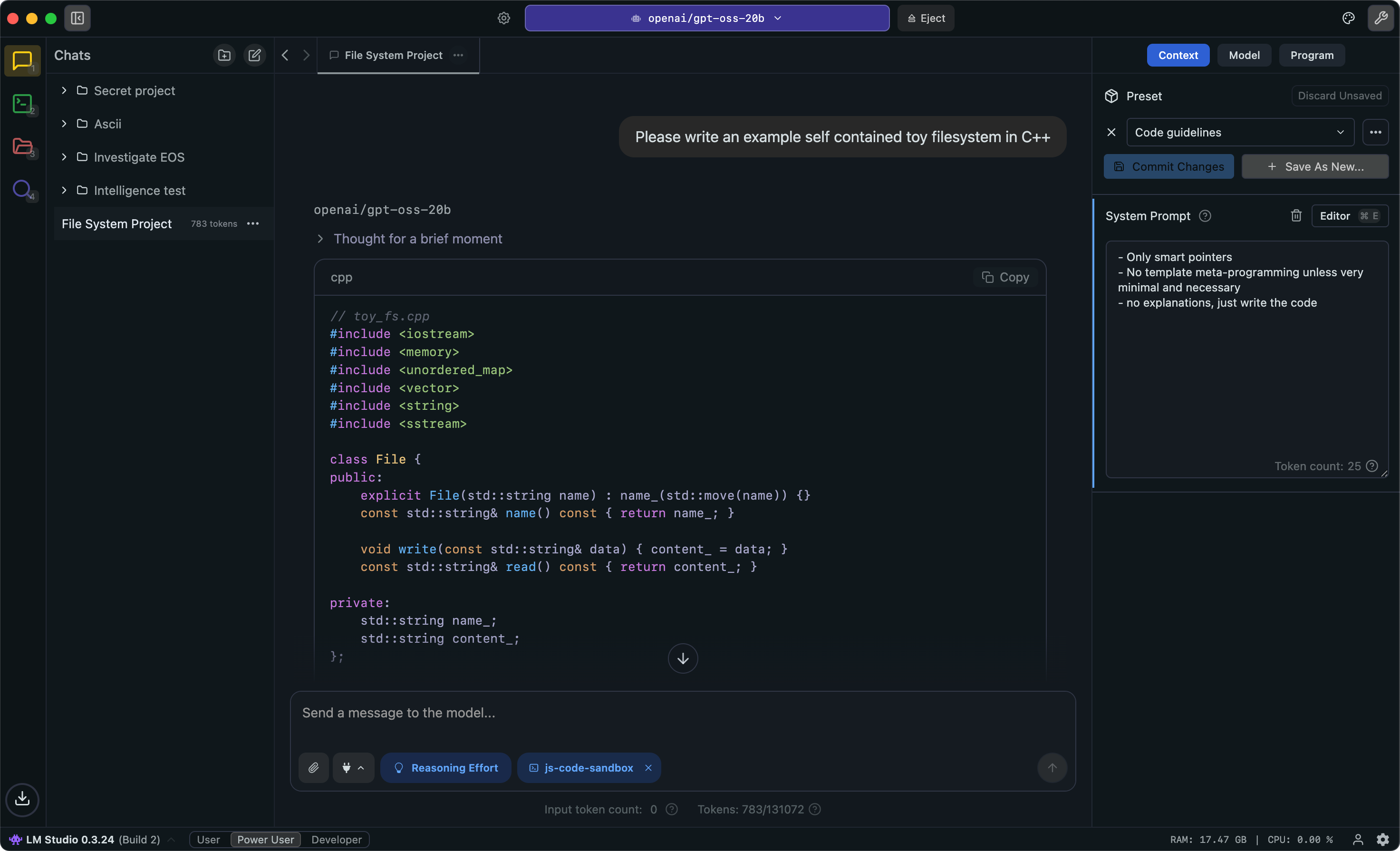

Introducing:

Connect to remote instances of LM Studio, load your models, and use them as if they were local.

Get startedRun AI models, locally and privately.

LM Studio is free for home and work use • terms

Developer Resources

Sign up for updates

Hear about new releases, features, and updates from the LM Studio team.