gpt-oss-safeguard

gpt-oss-safeguard

gpt-oss-safeguard-20b and gpt-oss-safeguard-120b are open safety models from OpenAI, building on gpt-oss. Trained to help classify text content based on customizable policies.

Memory Requirements

To run the smallest gpt-oss-safeguard, you need at least 12 GB of RAM. The largest one may require up to 65 GB.

Capabilities

gpt-oss-safeguard models support tool use and reasoning. They are available in gguf and mlx.

About gpt-oss-safeguard

gpt-oss-safeguard is an open weight reasoning model from OpenAI specifically trained for safety classification tasks to help classify text content based on customizable policies. As a fine-tuned version of gpt-oss, gpt-oss-safeguard is designed to follow explicit written policies that you provide. This enables bring-your-own-policy Trust & Safety AI, where your own taxonomy, definitions, and thresholds guide classification decisions. Well crafted policies unlock gpt-oss-safeguard's reasoning capabilities, enabling it to handle nuanced content, explain borderline decisions, and adapt to contextual factors.

Who should use gpt-oss-safeguard?

gpt-oss-safeguard is designed for users who need real-time context and automation at scale, including:

- ML/AI Engineers working on Trust & Safety systems who need flexible content moderation

- Trust & Safety Engineers building or improving moderation, Trust & Safety, or platform integrity pipelines

- Technical Program Managers overseeing content safety initiatives

- Developers building projects/applications that require contextual, policy-based content moderation

- Policy Crafters defining what is accepted by an organization who want to test out policy lines, generate examples, and evaluate content

Run gpt-oss-safeguard locally

Download either the 20B or 120B variant into your LM Studio, by using the GUI or with lms in your terminal:

# download the 20b variant lms get openai/gpt-oss-safeguard-20b # download the 120b variant lms get openai/gpt-oss-safeguard-120b

Then utilize LM Studio's SDK or OpenAI Responses API compatibility mode to use the model from your own code.

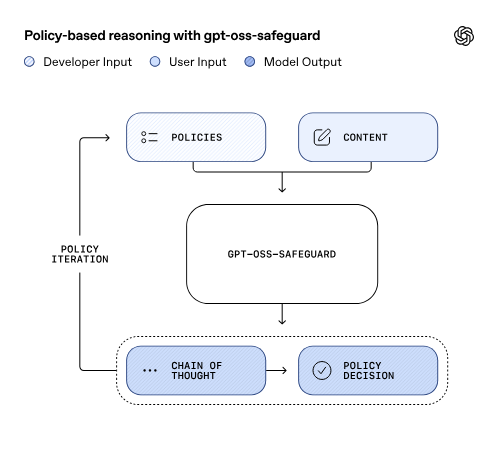

How oss-safeguard uses Policy Prompts

gpt-oss-safeguard is designed to use your written policy as its governing logic. While most models provide a confidence score based on the features it was trained on and require retraining for any policy changes, oss-safeguard makes decisions backed by reasoning within the boundaries of a provided taxonomy. This feature lets T&S teams deploy oss-safeguard as a policy-aligned reasoning layer within existing moderation or compliance systems. This also means that you can update or test new policies instantly without retraining the entire model.

Example Policy Prompts

Copy this template and customize it in a new named Preset

## Policy Definitions ### Key Terms **[Term 1]**: [Definition] **[Term 2]**: [Definition] **[Term 3]**: [Definition] ## Content Classification Rules ### VIOLATES Policy (Label: 1) Content that: - [Violation 1] - [Violation 2] - [Violation 3] - [Violation 4] - [Violation 5] ### DOES NOT Violate Policy (Label: 0) Content that is: - [Acceptable 1] - [Acceptable 2] - [Acceptable 3] - [Acceptable 4] - [Acceptable 5] ## Examples ### Example 1 (Label: 1) **Content**: "[Example]" **Expected Response**: ### Example 2 (Label: 1) **Content**: "[Example]" **Expected Response**: ### Example 3 (Label: 0) **Content**: "[Example]" **Expected Response**: ### Example 4 (Label: 0) **Content**: "[Example]" **Expected Response**:

License

gpt-oss-safeguard models are provided under the Apache-2.0 license.