###### [👾 LM Studio 0.3.6](blog/lmstudio-v0.3.6) • 2025-01-06 ### Tool and Function Calling API Use any LLM that supports Tool Use and Function Calling through the OpenAI-like API. Docs: [Tool Use and Function Calling](/docs/api/tools). ---

###### [👾 LM Studio 0.3.5](blog/lmstudio-v0.3.5) • 2024-10-22 ### Introducing `lms get`: download models from the terminal You can now download models directly from the terminal using a keyword ```bash lms get deepseek-r1 ``` or a full Hugging Face URL ```bash lms get

###### We are actively working to add support for more platforms and configurations. If you noticed an error in this page, please let us know by opening an issue on [github](https://github.com/lmstudio-ai/lmstudio-bug-tracker). ### macOS - Chip: Apple Silicon (M1/M2/M3/M4). - macOS 13.4 or newer is required. - For MLX models, macOS 14.0 or newer is required. - 16GB+ RAM recommended. - You may still be able to use LM Studio on 8GB Macs, but stick to smaller models and modest context sizes. - Intel-based Macs are currently not supported. Chime in [here](https://github.com/lmstudio-ai/lmstudio-bug-tracker/issues/9) if you are interested in this. ### Windows LM Studio is supported on both x64 and ARM (Snapdragon X Elite) based systems. - CPU: AVX2 instruction set support is required (for x64) - RAM: LLMs can consume a lot of RAM. At least 16GB of RAM is recommended. ### Linux - LM Studio for Linux is distributed as an AppImage. - Ubuntu 20.04 or newer is required - x64 only, aarch64 not yet supported - Ubuntu versions newer than 22 are not well tested. Let us know if you're running into issues by opening a bug [here](https://github.com/lmstudio-ai/lmstudio-bug-tracker). - CPU: - LM Studio ships with AVX2 support by default ## Offline Operation > LM Studio can operate entirely offline, just make sure to get some model files first. ```lms_notice In general, LM Studio does not require the internet in order to work. This includes core functions like chatting with models, chatting with documents, or running a local server, none of which require the internet. ``` ### Operations that do NOT require connectivity #### Using downloaded LLMs Once you have an LLM onto your machine, the model will run locally and you should be good to go entirely offline. Nothing you enter into LM Studio when chatting with LLMs leaves your device. #### Chatting with documents (RAG) When you drag and drop a document into LM Studio to chat with it or perform RAG, that document stays on your machine. All document processing is done locally, and nothing you upload into LM Studio leaves the application. #### Running a local server LM Studio can be used as a server to provide LLM inferencing on localhost or the local network. Requests to LM Studio use OpenAI endpoints and return OpenAI-like response objects, but stay local. ### Operations that require connectivity Several operations, described below, rely on internet connectivity. Once you get an LLM onto your machine, you should be good to go entirely offline. #### Searching for models When you search for models in the Discover tab, LM Studio makes network requests (e.g. to huggingface.co). Search will not work without internet connection. #### Downloading new models In order to download models you need a stable (and decently fast) internet connection. You can also 'sideload' models (use models that were procured outside the app). See instructions for [sideloading models](advanced/sideload). #### Discover tab's model catalog Any given version of LM Studio ships with an initial model catalog built-in. The entries in the catalog are typically the state of the online catalog near the moment we cut the release. However, in order to show stats and download options for each model, we need to make network requests (e.g. to huggingface.co). #### Downloading runtimes [LM Runtimes](advanced/lm-runtimes) are individually packaged software libraries, or LLM engines, that allow running certain formats of models (e.g. `llama.cpp`). As of LM Studio 0.3.0 (read the [announcement](https://lmstudio.ai/blog/lmstudio-v0.3.0)) it's easy to download and even hot-swap runtimes without a full LM Studio update. To check for available runtimes, and to download them, we need to make network requests. #### Checking for app updates On macOS and Windows, LM Studio has a built-in app updater that's capable. The linux in-app updater [is in the works](https://github.com/lmstudio-ai/lmstudio-bug-tracker/issues/89). When you open LM Studio, the app updater will make a network request to check if there are any new updates available. If there's a new version, the app will show you a notification to update now or later. Without internet connectivity you will not be able to update the app via the in-app updater. ## basics ## Get started with LM Studio > Download and run Large Language Models (LLMs) like Llama 3.1, Phi-3, and Gemma 2 locally in LM Studio You can use openly available Large Language Models (LLMs) like Llama 3.1, Phi-3, and Gemma 2 locally in LM Studio, leveraging your computer's CPU and optionally the GPU. Double check computer meets the minimum [system requirements](/docs/system-requirements).

```lms_info You might sometimes see terms such as `open-source models` or `open-weights models`. Different models might be released under different licenses and varying degrees of 'openness'. In order to run a model locally, you need to be able to get access to its "weights", often distributed as one or more files that end with `.gguf`, `.safetensors` etc. ```

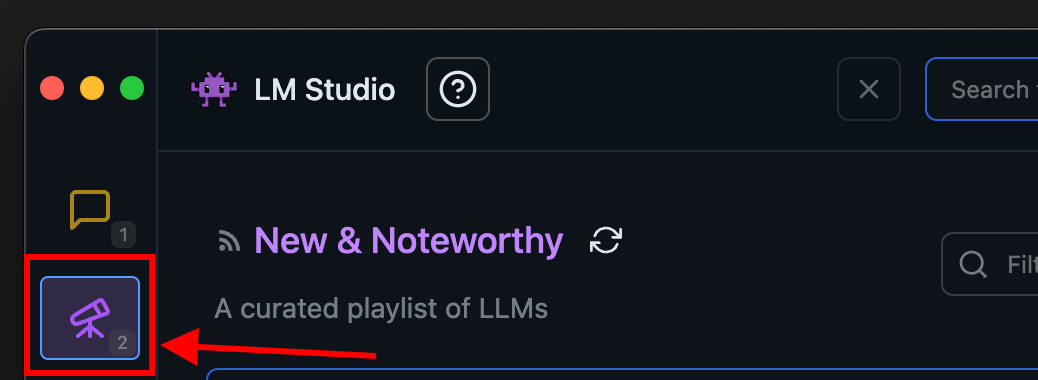

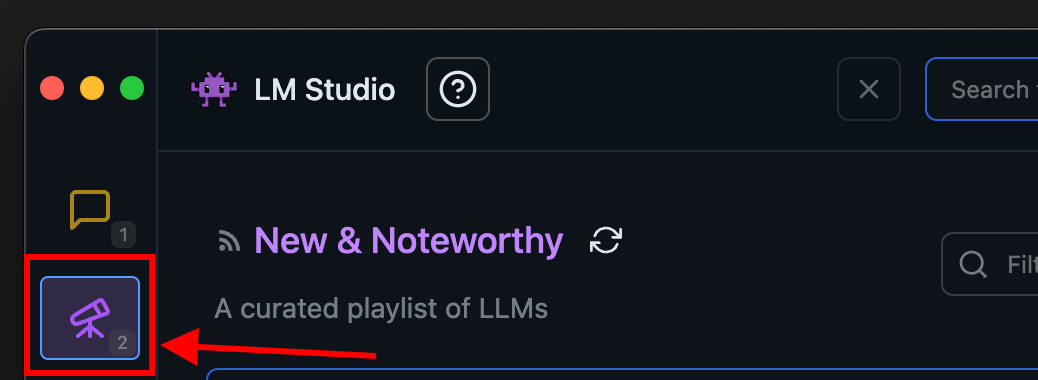

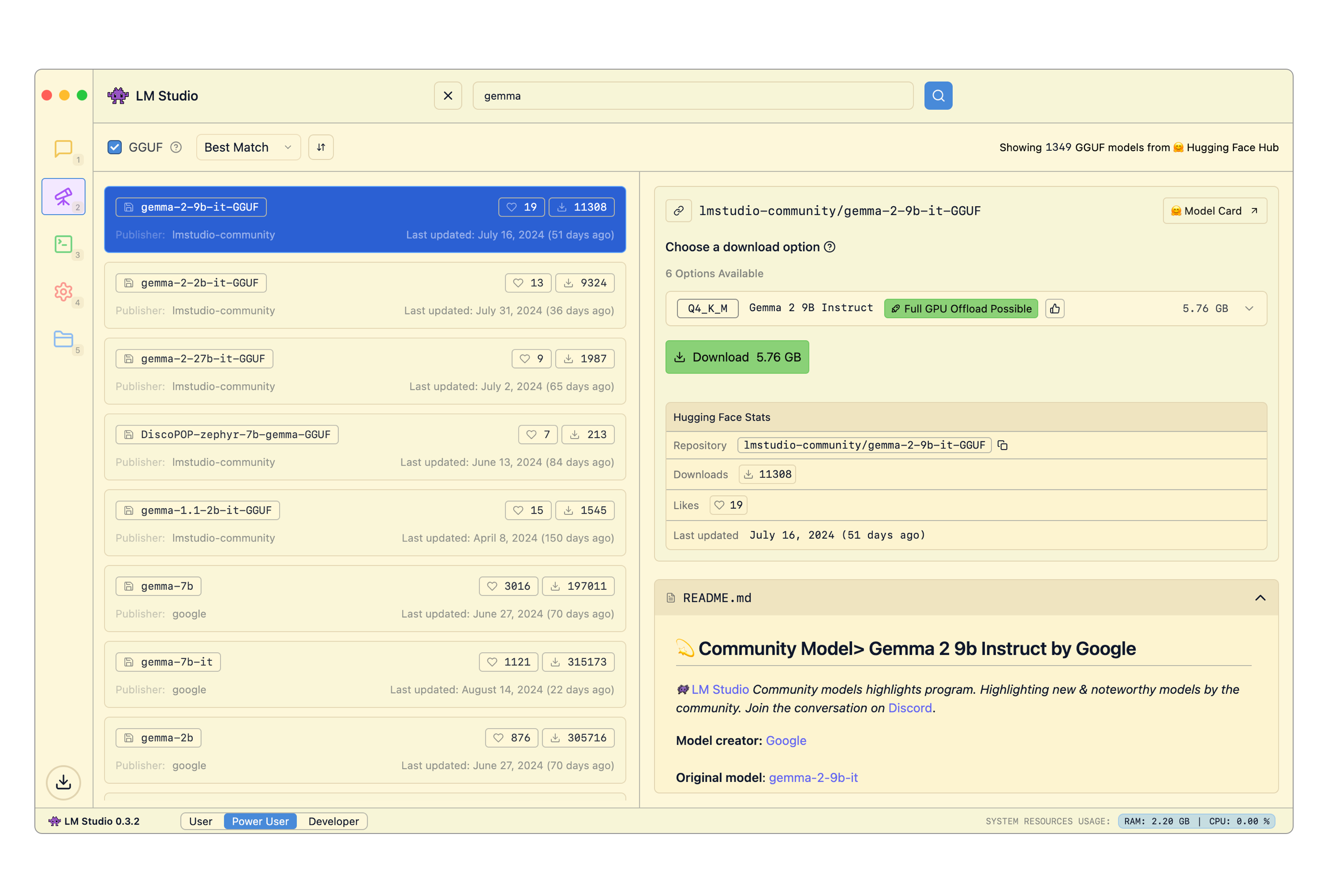

## Getting up and running First, **install the latest version of LM Studio**. You can get it from [here](/download). Once you're all set up, you need to **download your first LLM**. ### 1. Download an LLM to your computer Head over to the Discover tab to download models. Pick one of the curated options or search for models by search query (e.g. `"Llama"`). See more in-depth information about downloading models [here](/docs/basics/download-models).

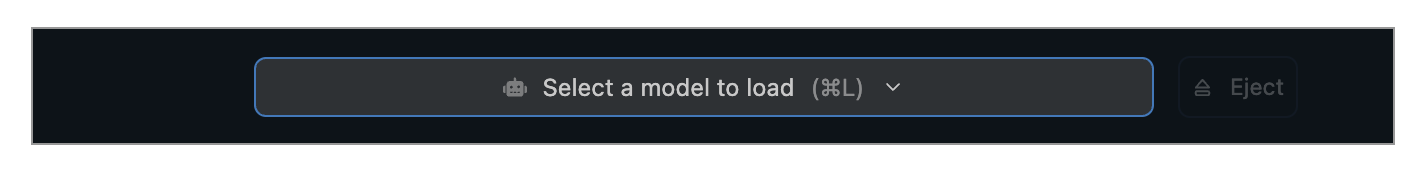

### 2. Load a model to memory

Head over to the **Chat** tab, and

1. Open the model loader

2. Select one of the models you downloaded (or [sideloaded](/docs/advanced/sideload)).

3. Optionally, choose load configuration parameters.

### 2. Load a model to memory

Head over to the **Chat** tab, and

1. Open the model loader

2. Select one of the models you downloaded (or [sideloaded](/docs/advanced/sideload)).

3. Optionally, choose load configuration parameters.

##### What does loading a model mean?

Loading a model typically means allocating memory to be able to accomodate the model's weights and other parameters in your computer's RAM.

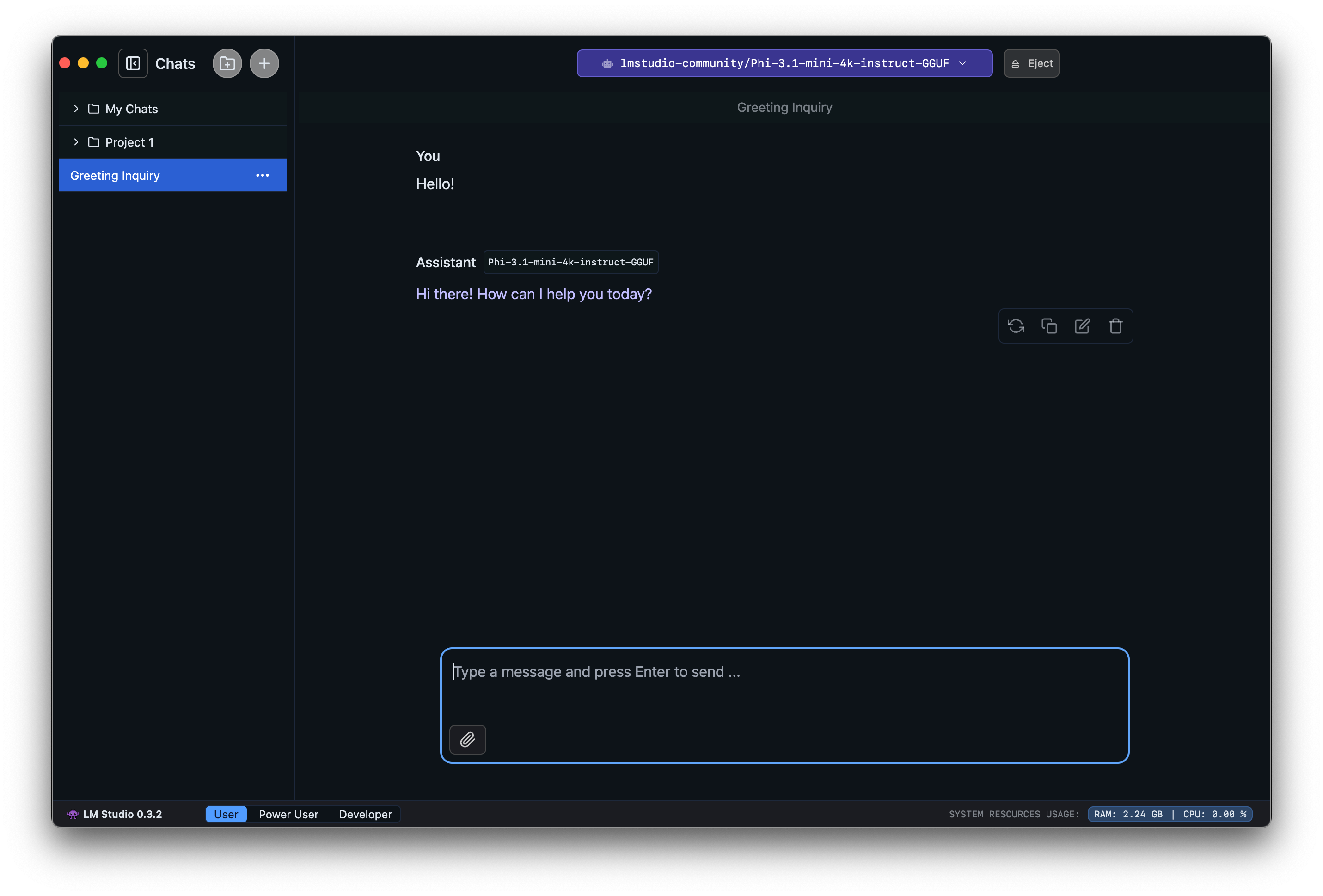

### 3. Chat!

Once the model is loaded, you can start a back-and-forth conversation with the model in the Chat tab.

##### What does loading a model mean?

Loading a model typically means allocating memory to be able to accomodate the model's weights and other parameters in your computer's RAM.

### 3. Chat!

Once the model is loaded, you can start a back-and-forth conversation with the model in the Chat tab.

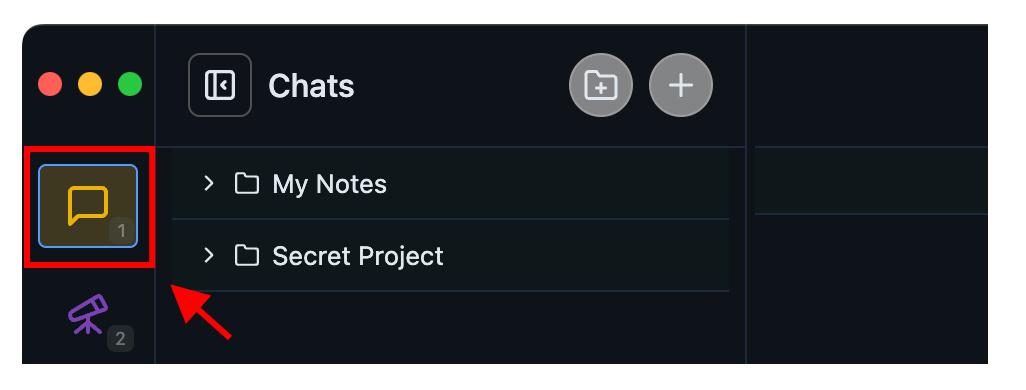

### Community Chat with other LM Studio users, discuss LLMs, hardware, and more on the [LM Studio Discord server](https://discord.gg/aPQfnNkxGC). ### Manage chats > Manage conversation threads with LLMs LM Studio has a ChatGPT-like interface for chatting with local LLMs. You can create many different conversation threads and manage them in folders.

### Create a new chat You can create a new chat by clicking the "+" button or by using a keyboard shortcut: `⌘` + `N` on Mac, or `ctrl` + `N` on Windows / Linux. ### Create a folder Create a new folder by clicking the new folder button or by pressing: `⌘` + `shift` + `N` on Mac, or `ctrl` + `shift` + `N` on Windows / Linux. ### Drag and drop You can drag and drop chats in and out of folders, and even drag folders into folders! ### Duplicate chats You can duplicate a whole chat conversation by clicking the `•••` menu and selecting "Duplicate". If the chat has any files in it, they will be duplicated too. ## FAQ #### Where are chats stored in the file system? Right-click on a chat and choose "Reveal in Finder" / "Show in File Explorer". Conversations are stored in JSON format. It is NOT recommended to edit them manually, nor to rely on their structure. #### Does the model learn from chats? The model doesn't 'learn' from chats. The model only 'knows' the content that is present in the chat or is provided to it via configuration options such as the "system prompt". ## Conversations folder filesystem path Mac / Linux: ```shell ~/.lmstudio/conversations/ ``` Windows: ```ps %USERPROFILE%\.lmstudio\conversations ```

### Community Chat with other LM Studio users, discuss LLMs, hardware, and more on the [LM Studio Discord server](https://discord.gg/aPQfnNkxGC). ### Download an LLM > Discover and download supported LLMs in LM Studio LM Studio comes with a built-in model downloader that let's you download any supported model from [Hugging Face](https://huggingface.co).

### Searching for models You can search for models by keyword (e.g. `llama`, `gemma`, `lmstudio`), or by providing a specific `user/model` string. You can even insert full Hugging Face URLs into the search bar! ###### Pro tip: you can jump to the Discover tab from anywhere by pressing `⌘` + `2` on Mac, or `ctrl` + `2` on Windows / Linux. ### Which download option to choose? You will often see several options for any given model named things like `Q3_K_S`, `Q_8` etc. These are all copies of the same model, provided in varying degrees of fidelity. The `Q` represents a technique called "Quantization", which roughly means compressing model files in size, while giving up some degree of quality. Choose a 4-bit option or higher if your machine is capable enough for running it.

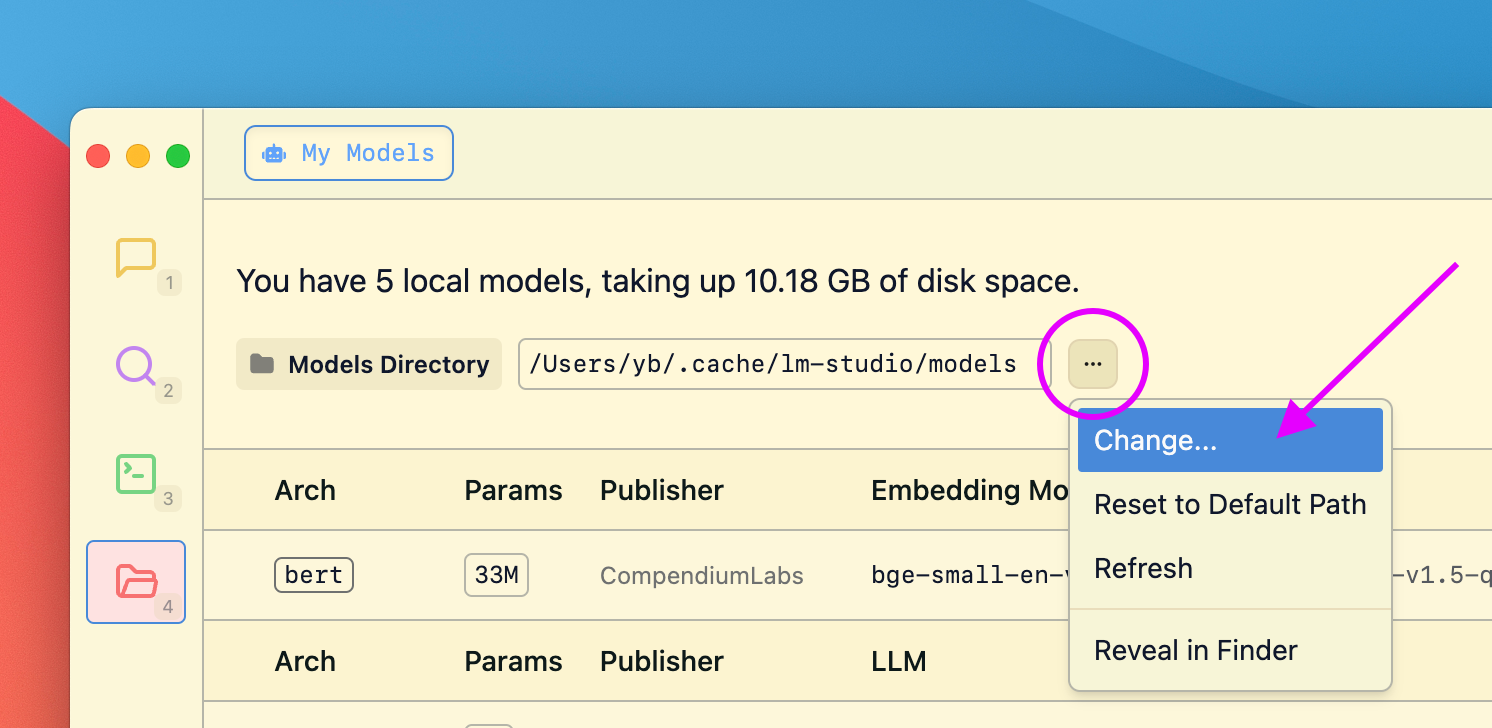

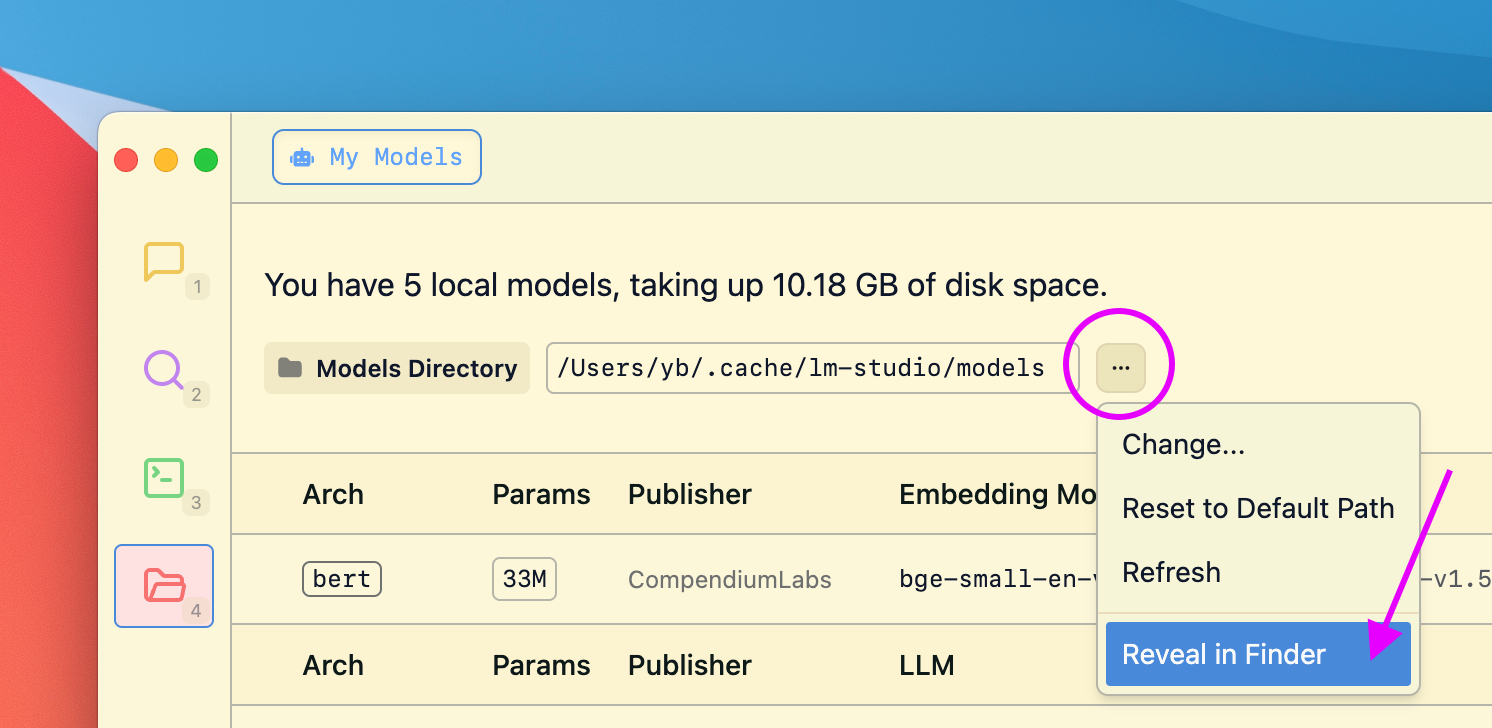

`Advanced` ### Changing the models directory You can change the models directory by heading to My Models

### Community Chat with other LM Studio users, discuss LLMs, hardware, and more on the [LM Studio Discord server](https://discord.gg/aPQfnNkxGC). ### Chat with Documents > How to provide local documents to an LLM as additional context You can attach document files (`.docx`, `.pdf`, `.txt`) to chat sessions in LM Studio. This will provide additional context to LLMs you chat with through the app.

### Terminology - **Retrieval**: Identifying relevant portion of a long source document - **Query**: The input to the retrieval operation - **RAG**: Retrieval-Augmented Generation\* - **Context**: the 'working memory' of an LLM. Often limited at a few thousand words\*\* ###### \* In this context, 'Generation' means the output of the LLM. ###### \*\* A recent trend in newer LLMs is support for larger context sizes. ###### Context sizes are measured in "tokens". One token is often about 3/4 of a word. ### RAG vs. Full document 'in context' If the document is short enough (i.e., if it fits in the model's context), LM Studio will add the file contents to the conversation in full. This is particularly useful for models that support longer context sizes such as Meta's Llama 3.1 and Mistral Nemo. If the document is very long, LM Studio will opt into using "Retrieval Augmented Generation", frequently referred to as "RAG". RAG means attempting to fish out relevant bits of a very long document (or several documents) and providing them to the model for reference. This technique sometimes works really well, but sometimes it requires some tuning and experimentation. ### Tip for successful RAG provide as much context in your query as possible. Mention terms, ideas, and words you expect to be in the relevant source material. This will often increase the chance the system will provide useful context to the LLM. As always, experimentation is the best way to find what works best. ### Import Models > Use model files you've downloaded outside of LM Studio You can use compatible models you've downloaded outside of LM Studio by placing them in the expected directory structure.

### Use `lms import` (experimental) To import a `GGUF` model you've downloaded outside of LM Studio, run the following command in your terminal: ```bash lms import

LM Studio aims to preserves the directory structure of models downloaded from Hugging Face. The expected directory structure is as follows:

```xml

~/.lmstudio/models/

└── publisher/

└── model/

└── model-file.gguf

```

For example, if you have a model named `ocelot-v1` published by `infra-ai`, the structure would look like this:

```xml

~/.lmstudio/models/

└── infra-ai/

└── ocelot-v1/

└── ocelot-v1-instruct-q4_0.gguf

```

LM Studio aims to preserves the directory structure of models downloaded from Hugging Face. The expected directory structure is as follows:

```xml

~/.lmstudio/models/

└── publisher/

└── model/

└── model-file.gguf

```

For example, if you have a model named `ocelot-v1` published by `infra-ai`, the structure would look like this:

```xml

~/.lmstudio/models/

└── infra-ai/

└── ocelot-v1/

└── ocelot-v1-instruct-q4_0.gguf

```

### Community Chat with other LM Studio users, discuss LLMs, hardware, and more on the [LM Studio Discord server](https://discord.gg/aPQfnNkxGC). ### Config Presets > Save your system prompts and other parameters as Presets for easy reuse across chats. Configuration Presets are new in LM Studio 0.3.3 ([Release Notes](/blog/lmstudio-v0.3.3)) #### The Use Case for Presets - Save your system prompts, inference parameters as a named `Preset`. - Easily switch between different use cases, such as reasoning, creative writing, multi-turn conversations, or brainstorming. _For migration from LM Studio 0.2.\* Presets, see [below](#migration-from-lm-studio-0-2-presets)_. **Please report bugs and feedback to bugs [at] lmstudio [dot] ai.**

### Build your own Prompt Library You can create your own prompt library by using Presets.

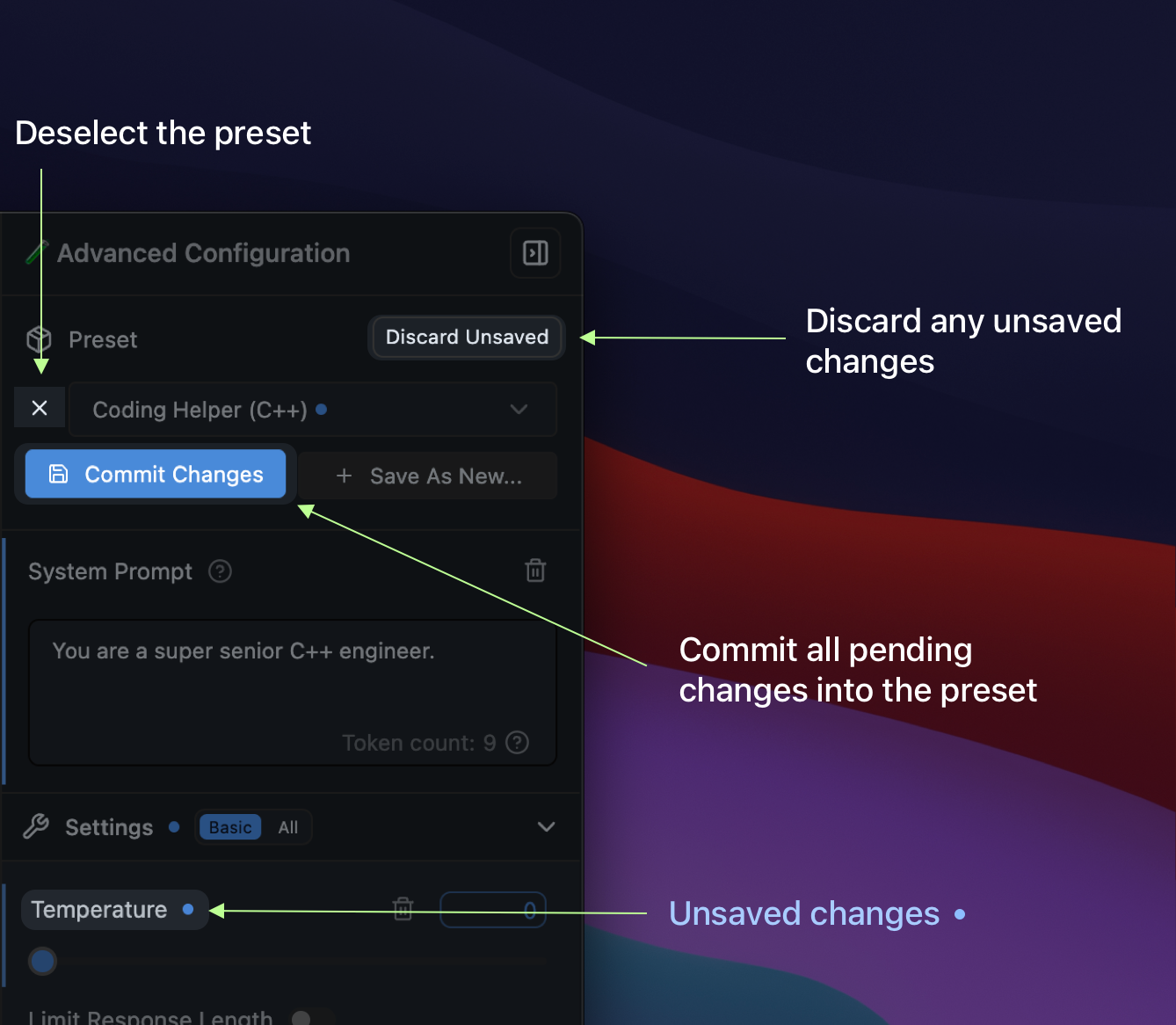

In addition to system prompts, every parameter under the Advanced Configuration sidebar can be recorded in a named Preset. For example, you might want to always use a certain Temperature, Top P, or Max Tokens for a particular use case. You can save these settings as a Preset (with or without a system prompt) and easily switch between them. ### Saving, resetting, and deselecting Presets Below is the anatomy of the Preset manager:

### Migration from LM Studio 0.2.\* Presets

- Presets you've saved in LM Studio 0.2.\* are automatically readable in 0.3.3 with no migration step needed.

- If you save **new changes** in a **legacy preset**, it'll be **copied** to a new format upon save.

- The old files are NOT deleted.

- Notable difference: Load parameters are not included in the new preset format.

- Favor editing the model's default config in My Models. See [how to do it here](/docs/configuration/per-model).

### Where Presets are stored

Presets are stored in the following directory:

#### macOS or Linux

```xml

~/.lmstudio/config-presets

```

#### Windows

```xml

%USERPROFILE%\.lmstudio\config-presets

```

### Migration from LM Studio 0.2.\* Presets

- Presets you've saved in LM Studio 0.2.\* are automatically readable in 0.3.3 with no migration step needed.

- If you save **new changes** in a **legacy preset**, it'll be **copied** to a new format upon save.

- The old files are NOT deleted.

- Notable difference: Load parameters are not included in the new preset format.

- Favor editing the model's default config in My Models. See [how to do it here](/docs/configuration/per-model).

### Where Presets are stored

Presets are stored in the following directory:

#### macOS or Linux

```xml

~/.lmstudio/config-presets

```

#### Windows

```xml

%USERPROFILE%\.lmstudio\config-presets

```

### Community Chat with other LM Studio users, discuss LLMs, hardware, and more on the [LM Studio Discord server](https://discord.gg/aPQfnNkxGC). ## user-interface ### LM Studio in your language > LM Studio is available in English, Spanish, French, German, Korean, Russian, and 6+ more languages. LM Studio is available in `English`, `Spanish`, `Japanese`, `Chinese`, `German`, `Norwegian`, `Turkish`, `Russian`, `Korean`, `Polish`, `Vietnamese`, `Czech`, `Ukranian`, and `Portuguese (BR,PT)` thanks to incredibly awesome efforts from the LM Studio community.

### Selecting a Language You can choose a language in the Settings tab. Use the dropdown menu under Preferences > Language. ```lms_protip You can jump to Settings from anywhere in the app by pressing `cmd` + `,` on macOS or `ctrl` + `,` on Windows/Linux. ``` ###### To get to the Settings page, you need to be on [Power User mode](/docs/modes) or higher.

#### Big thank you to community localizers 🙏 - Spanish [@xtianpaiva](https://github.com/xtianpaiva) - Norwegian [@Exlo84](https://github.com/Exlo84) - German [@marcelMaier](https://github.com/marcelMaier) - Turkish [@progesor](https://github.com/progesor) - Russian [@shelomitsky](https://github.com/shelomitsky), [@mlatysh](https://github.com/mlatysh), [@Adjacentai](https://github.com/Adjacentai) - Korean [@williamjeong2](https://github.com/williamjeong2) - Polish [@danieltechdev](https://github.com/danieltechdev) - Czech [@ladislavsulc](https://github.com/ladislavsulc) - Vietnamese [@trinhvanminh](https://github.com/trinhvanminh) - Portuguese (BR) [@Sm1g00l](https://github.com/Sm1g00l) - Portuguese (PT) [@catarino](https://github.com/catarino) - Chinese (zh-HK), (zh-TW), (zh-CN) [@neotan](https://github.com/neotan) - Chinese (zh-Hant) [@kywarai](https://github.com/kywarai) - Ukrainian (uk) [@hmelenok](https://github.com/hmelenok) - Japanese (ja) [@digitalsp](https://github.com/digitalsp) Still under development (due to lack of RTL support in LM Studio) - Hebrew: [@NHLOCAL](https://github.com/NHLOCAL) #### Contributing to LM Studio localization If you want to improve existing translations or contribute new ones, you're more than welcome to jump in. LM Studio strings are maintained in https://github.com/lmstudio-ai/localization. See instructions for contributing [here](https://github.com/lmstudio-ai/localization/blob/main/README.md). ### User, Power User, or Developer > Hide or reveal advanced features Starting LM Studio 0.3.0, you can switch between the following modes: - **User** - **Power User** - **Developer**

### Selecting a Mode You can configure LM Studio to run in increasing levels of configurability. Select between User, Power User, and Developer.

### Which mode should I choose?

#### `User`

Show only the chat interface, and auto-configure everything. This is the best choice for beginners or anyone who's happy with the default settings.

#### `Power User`

Use LM Studio in this mode if you want access to configurable [load](/docs/configuration/load) and [inference](/docs/configuration/inference) parameters as well as advanced chat features such as [insert, edit, & continue](/docs/advanced/context) (for either role, user or assistant).

#### `Developer`

Full access to all aspects in LM Studio. This includes keyboard shortcuts and development features. Check out the Developer section under Settings for more.

### Color Themes

> Customize LM Studio's color theme

LM Studio comes with a few built-in themes for app-wide color palettes.

### Which mode should I choose?

#### `User`

Show only the chat interface, and auto-configure everything. This is the best choice for beginners or anyone who's happy with the default settings.

#### `Power User`

Use LM Studio in this mode if you want access to configurable [load](/docs/configuration/load) and [inference](/docs/configuration/inference) parameters as well as advanced chat features such as [insert, edit, & continue](/docs/advanced/context) (for either role, user or assistant).

#### `Developer`

Full access to all aspects in LM Studio. This includes keyboard shortcuts and development features. Check out the Developer section under Settings for more.

### Color Themes

> Customize LM Studio's color theme

LM Studio comes with a few built-in themes for app-wide color palettes.

### Selecting a Theme You can choose a theme in the Settings tab. Choosing the "Auto" option will automatically switch between Light and Dark themes based on your system settings. ```lms_protip You can jump to Settings from anywhere in the app by pressing `cmd` + `,` on macOS or `ctrl` + `,` on Windows/Linux. ``` ###### To get to the Settings page, you need to be on [Power User mode](/docs/modes) or higher. ## advanced ### Per-model Defaults > You can set default settings for each model in LM Studio `Advanced` You can set default load settings for each model in LM Studio. When the model is loaded anywhere in the app (including through [`lms load`](/docs/cli#load-a-model-with-options)) these settings will be used.

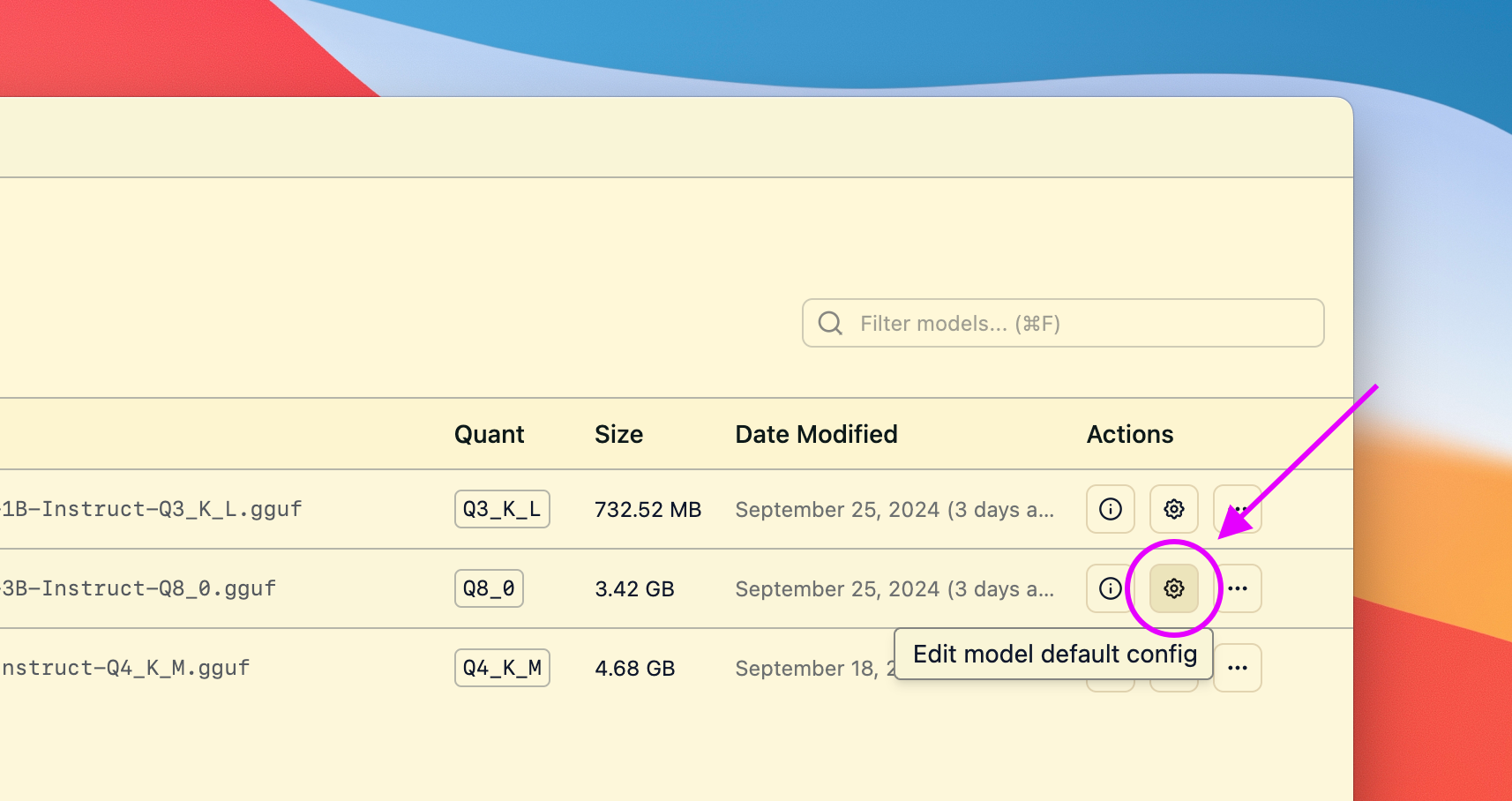

### Setting default parameters for a model Head to the My Models tab and click on the gear ⚙️ icon to edit the model's default parameters.

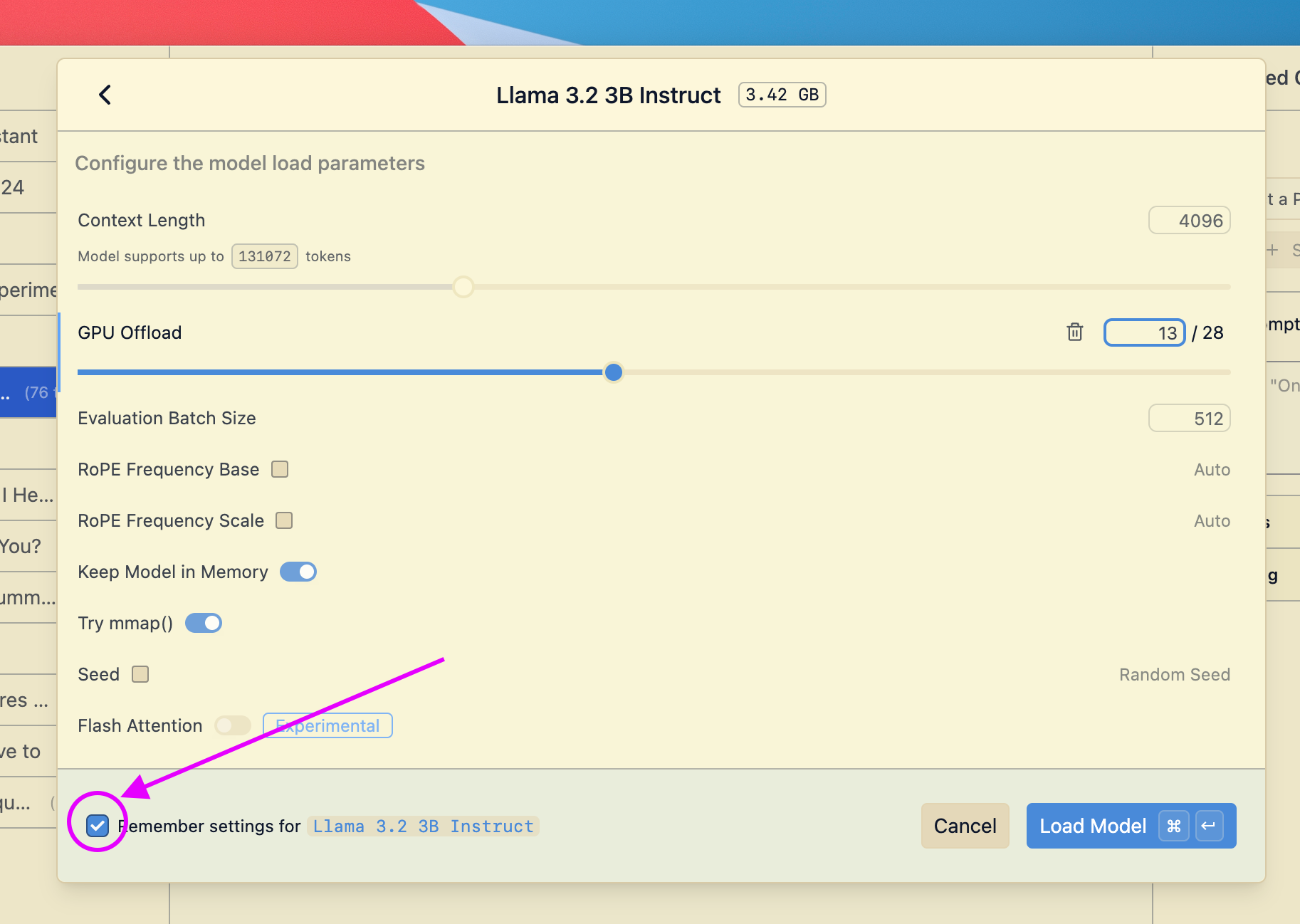

This will open a dialog where you can set the default parameters for the model.

Next time you load the model, these settings will be used.

```lms_protip

#### Reasons to set default load parameters (not required, totally optional)

- Set a particular GPU offload settings for a given model

- Set a particular context size for a given model

- Whether or not to utilize Flash Attention for a given model

```

## Advanced Topics

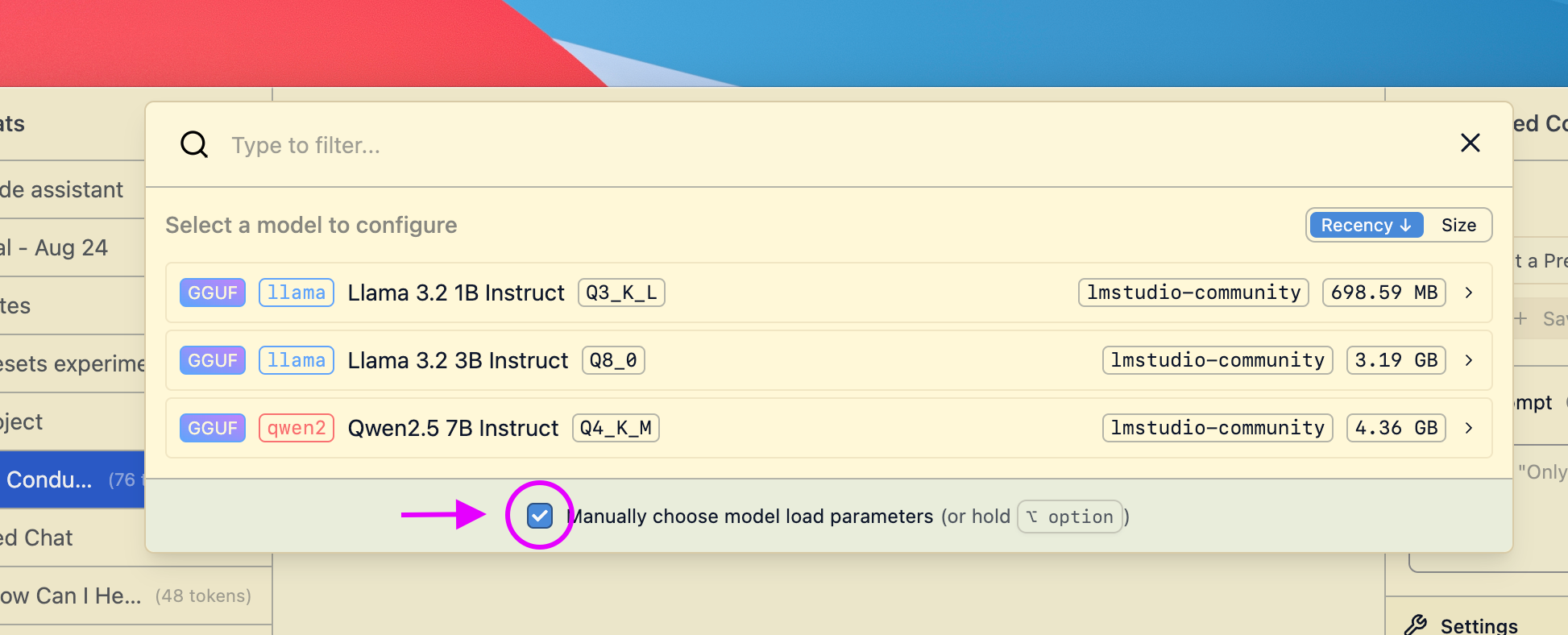

### Changing load settings before loading a model

When you load a model, you can optionally change the default load settings.

This will open a dialog where you can set the default parameters for the model.

Next time you load the model, these settings will be used.

```lms_protip

#### Reasons to set default load parameters (not required, totally optional)

- Set a particular GPU offload settings for a given model

- Set a particular context size for a given model

- Whether or not to utilize Flash Attention for a given model

```

## Advanced Topics

### Changing load settings before loading a model

When you load a model, you can optionally change the default load settings.

### Saving your changes as the default settings for a model

If you make changes to load settings when you load a model, you can save them as the default settings for that model.

### Saving your changes as the default settings for a model

If you make changes to load settings when you load a model, you can save them as the default settings for that model.

### Community Chat with other LM Studio power users, discuss configs, models, hardware, and more on the [LM Studio Discord server](https://discord.gg/aPQfnNkxGC). ### Prompt Template > Optionally set or modify the model's prompt template `Advanced` By default, LM Studio will automatically configure the prompt template based on the model file's metadata. However, you can customize the prompt template for any model.

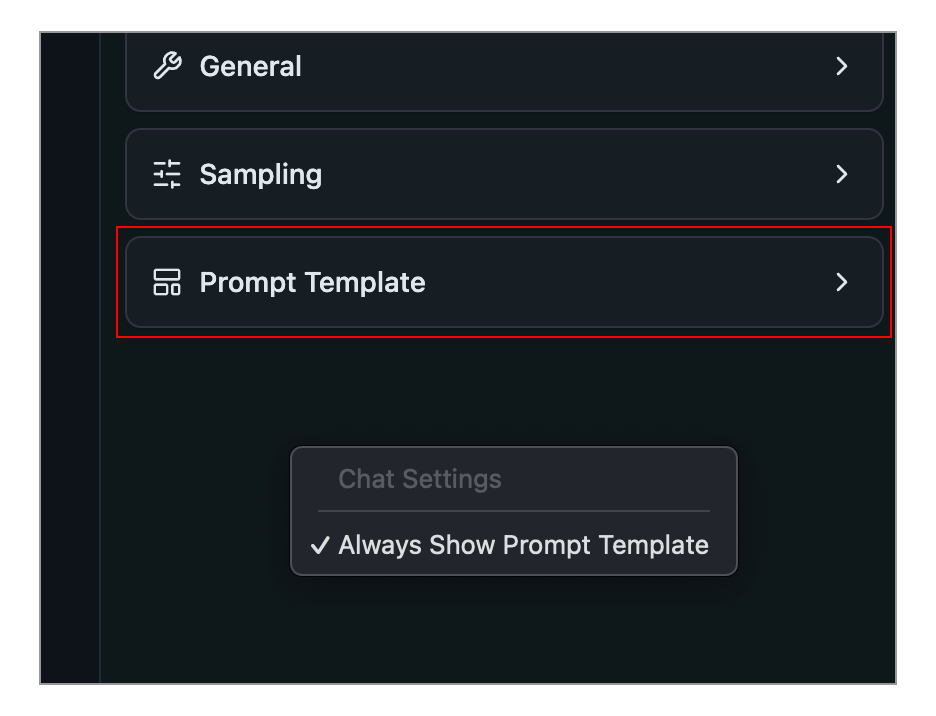

### Overriding the Prompt Template for a Specific Model Head over to the My Models tab and click on the gear ⚙️ icon to edit the model's default parameters. ###### Pro tip: you can jump to the My Models tab from anywhere by pressing `⌘` + `3` on Mac, or `ctrl` + `3` on Windows / Linux. ### Customize the Prompt Template ###### 💡 In most cases you don't need to change the prompt template When a model doesn't come with a prompt template information, LM Studio will surface the `Prompt Template` config box in the **🧪 Advanced Configuration** sidebar.

You can make this config box always show up by right clicking the sidebar and selecting **Always Show Prompt Template**.

### Prompt template options

#### Jinja Template

You can express the prompt template in Jinja.

###### 💡 [Jinja](https://en.wikipedia.org/wiki/Jinja_(template_engine)) is a templating engine used to encode the prompt template in several popular LLM model file formats.

#### Manual

You can also express the prompt template manually by specifying message role prefixes and suffixes.

You can make this config box always show up by right clicking the sidebar and selecting **Always Show Prompt Template**.

### Prompt template options

#### Jinja Template

You can express the prompt template in Jinja.

###### 💡 [Jinja](https://en.wikipedia.org/wiki/Jinja_(template_engine)) is a templating engine used to encode the prompt template in several popular LLM model file formats.

#### Manual

You can also express the prompt template manually by specifying message role prefixes and suffixes.

#### Reasons you might want to edit the prompt template: 1. The model's metadata is incorrect, incomplete, or LM Studio doesn't recognize it 2. The model does not have a prompt template in its metadata (e.g. custom or older models) 3. You want to customize the prompt template for a specific use case ### Speculative Decoding > Speed up generation with a draft model `Advanced` Speculative decoding is a technique that can substantially increase the generation speed of large language models (LLMs) without reducing response quality.

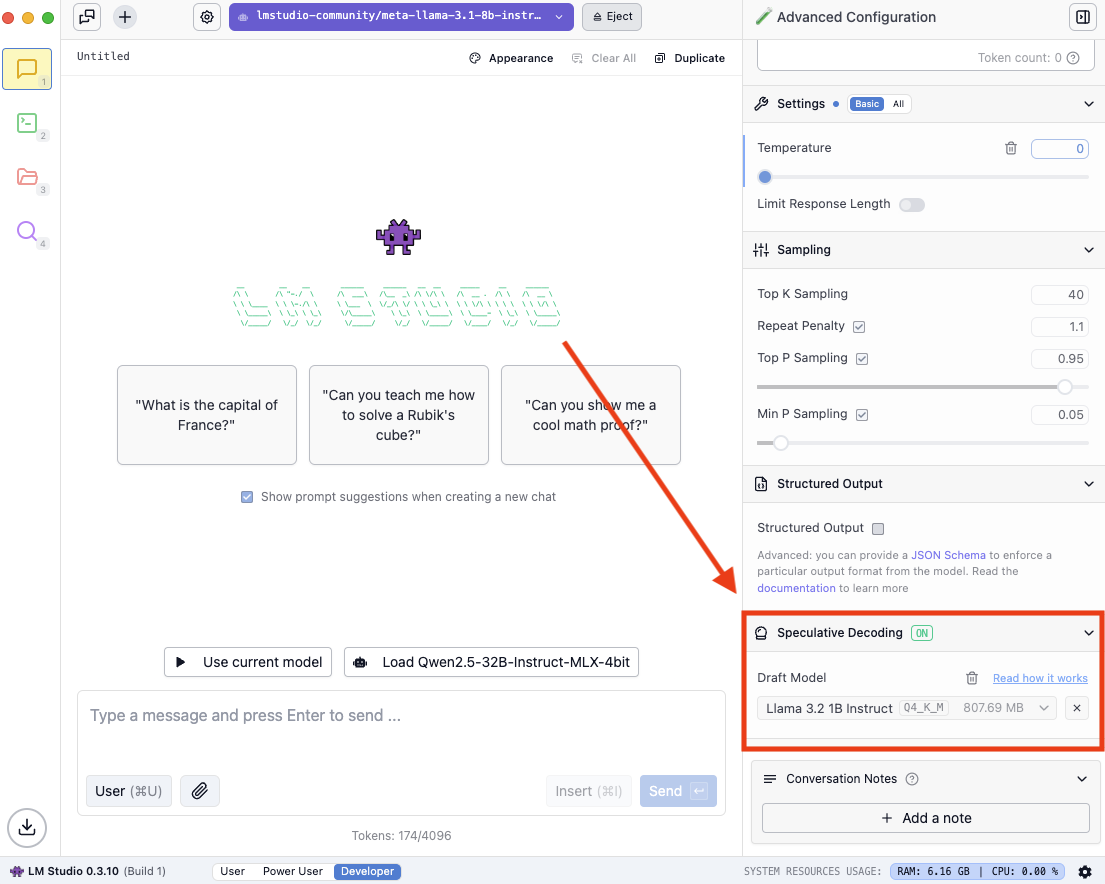

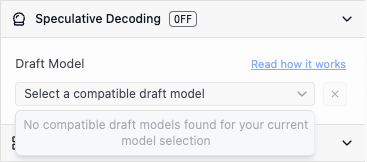

> 🔔 Speculative Decoding requires LM Studio 0.3.10 or newer, currently in beta. [Get it here](https://lmstudio.ai/beta-releases). ## What is Speculative Decoding Speculative decoding relies on the collaboration of two models: - A larger, "main" model - A smaller, faster "draft" model During generation, the draft model rapidly proposes potential tokens (subwords), which the main model can verify faster than it would take it to generate them from scratch. To maintain quality, the main model only accepts tokens that match what it would have generated. After the last accepted draft token, the main model always generates one additional token. For a model to be used as a draft model, it must have the same "vocabulary" as the main model. ## How to enable Speculative Decoding On `Power User` mode or higher, load a model, then select a `Draft Model` within the `Speculative Decoding` section of the chat sidebar:

### Finding compatible draft models

You might see the following when you open the dropdown:

### Finding compatible draft models

You might see the following when you open the dropdown:

Try to download a lower parameter variant of the model you have loaded, if it exists. If no smaller versions of your model exist, find a pairing that does.

For example:

Try to download a lower parameter variant of the model you have loaded, if it exists. If no smaller versions of your model exist, find a pairing that does.

For example:

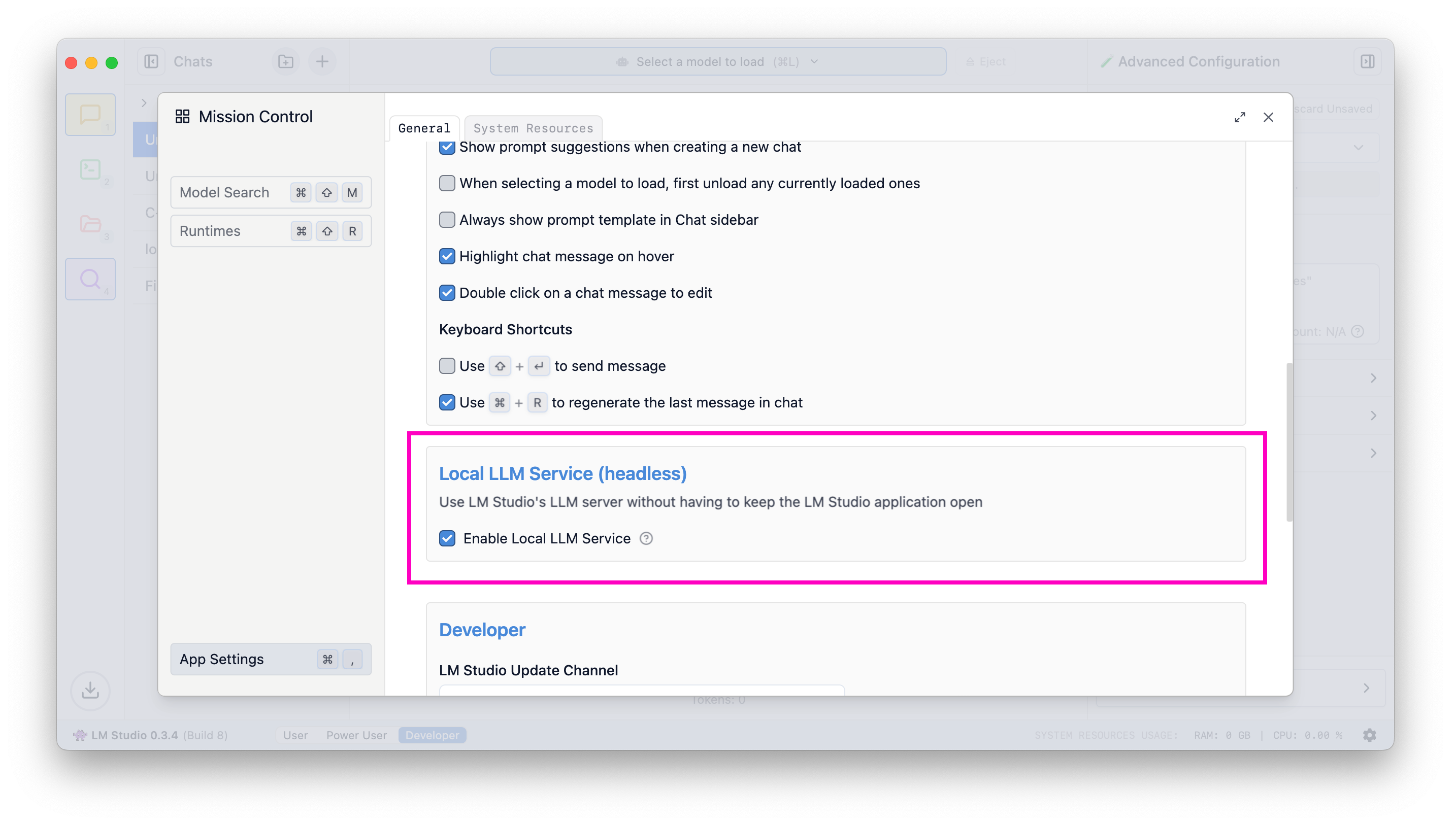

### Run LM Studio as a service (headless)

> GUI-less operation of LM Studio: run in the background, start on machine login, and load models on demand

`Advanced`

Starting in v[0.3.5](/blog/lmstudio-v0.3.5), LM Studio can be run as a service without the GUI. This is useful for running LM Studio on a server or in the background on your local machine.

### Run LM Studio as a service (headless)

> GUI-less operation of LM Studio: run in the background, start on machine login, and load models on demand

`Advanced`

Starting in v[0.3.5](/blog/lmstudio-v0.3.5), LM Studio can be run as a service without the GUI. This is useful for running LM Studio on a server or in the background on your local machine.

### Run LM Studio as a service Running LM Studio as a service consists of several new features intended to make it more efficient to use LM Studio as a developer tool. 1. The ability to run LM Studio without the GUI 2. The ability to start the LM Studio LLM server on machine login, headlessly 3. On-demand model loading

### Run the LLM service on machine login To enable this, head to app settings (`Cmd` / `Ctrl` + `,`) and check the box to run the LLM server on login.

When this setting is enabled, exiting the app will minimize it to the system tray, and the LLM server will continue to run in the background.

When this setting is enabled, exiting the app will minimize it to the system tray, and the LLM server will continue to run in the background.

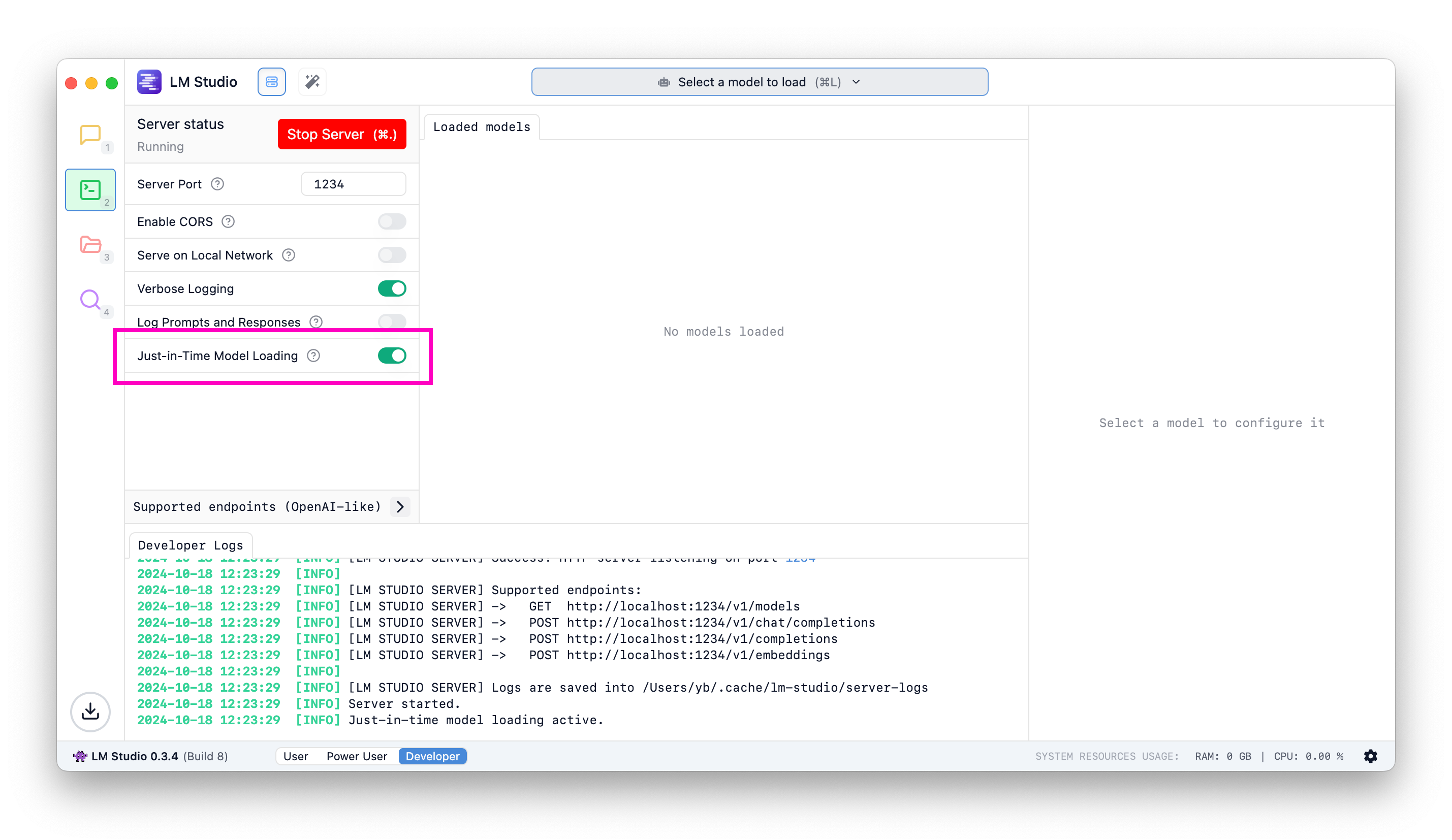

### Just-In-Time (JIT) model loading for OpenAI endpoints Useful when utilizing LM Studio as an LLM service with other frontends or applications.

#### When JIT loading is ON: - Call to `/v1/models` will return all downloaded models, not only the ones loaded into memory - Calls to inference endpoints will load the model into memory if it's not already loaded #### When JIT loading is OFF: - Call to `/v1/models` will return only the models loaded into memory - You have to first load the model into memory before being able to use it ##### What about auto unloading? As of LM Studio 0.3.5, auto unloading is not yet in place. Models that are loaded via JIT loading will remain in memory until you unload them. We expect to implement more sophisticated memory management in the near future. Let us know if you have any feedback or suggestions.

### Auto Server Start Your last server state will be saved and restored on app or service launch. To achieve this programmatically, you can use the following command: ```bash lms server start ``` ```lms_protip If you haven't already, bootstrap `lms` on your machine by following the instructions [here](/docs/cli). ```

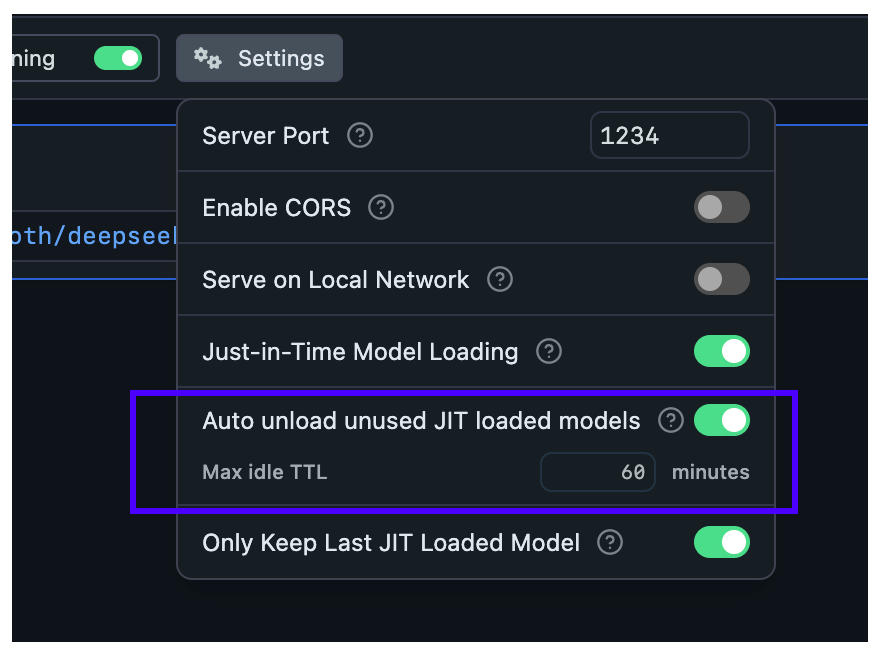

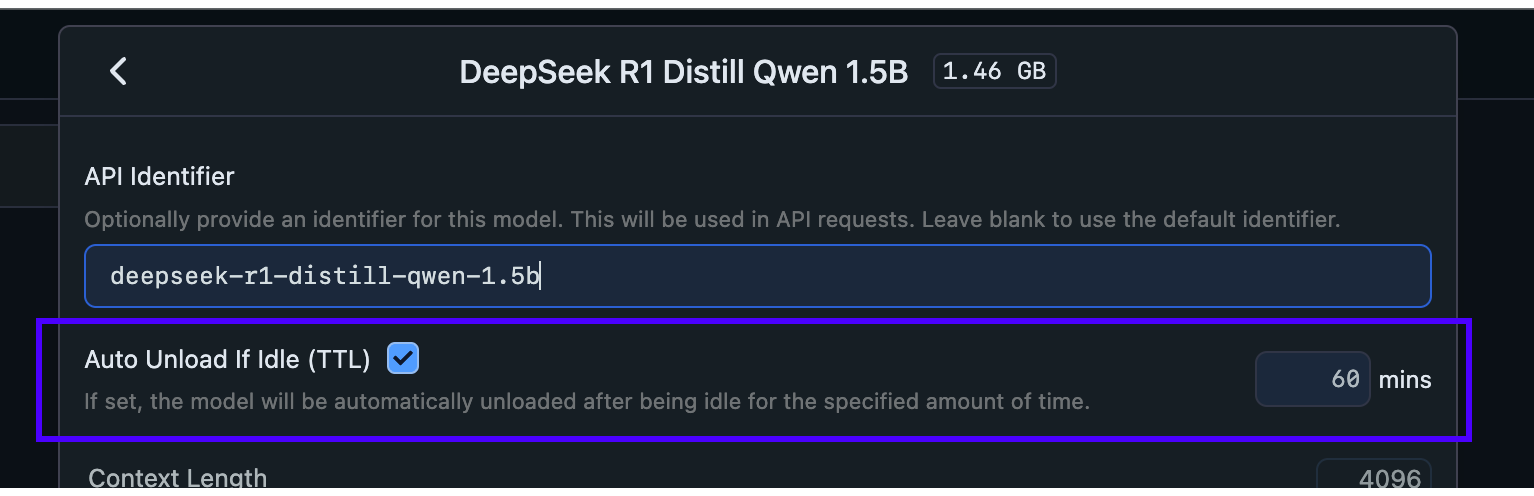

### Community Chat with other LM Studio developers, discuss LLMs, hardware, and more on the [LM Studio Discord server](https://discord.gg/aPQfnNkxGC). Please report bugs and issues in the [lmstudio-bug-tracker](https://github.com/lmstudio-ai/lmstudio-bug-tracker/issues) GitHub repository. ### Idle TTL and Auto-Evict > Optionally auto-unload idle models after a certain amount of time (TTL) ```lms_noticechill ℹ️ Requires LM Studio 0.3.9 (b1), currently in beta. Download from [here](https://lmstudio.ai/beta-releases) ``` LM Studio 0.3.9 (b1) introduces the ability to set a _time-to-live_ (TTL) for API models, and optionally auto-evict previously loaded models before loading new ones. These features complement LM Studio's [on-demand model loading (JIT)](https://lmstudio.ai/blog/lmstudio-v0.3.5#on-demand-model-loading) to automate efficient memory management and reduce the need for manual intervention. ## Background - `JIT loading` makes it easy to use your LM Studio models in other apps: you don't need to manually load the model first before being able to use it. However, this also means that models can stay loaded in memory even when they're not being used. `[Default: enabled]` - (New) `Idle TTL` (technically: Time-To-Live) defines how long a model can stay loaded in memory without receiving any requests. When the TTL expires, the model is automatically unloaded from memory. You can set a TTL using the `ttl` field in your request payload. `[Default: 60 minutes]` - (New) `Auto-Evict` is a feature that unloads previously JIT loaded models before loading new ones. This enables easy switching between models from client apps without having to manually unload them first. You can enable or disable this feature in Developer tab > Server Settings. `[Default: enabled]` ## Idle TTL **Use case**: imagine you're using an app like [Zed](https://github.com/zed-industries/zed/blob/main/crates/lmstudio/src/lmstudio.rs#L340), [Cline](https://github.com/cline/cline/blob/main/src/api/providers/lmstudio.ts), or [Continue.dev](https://docs.continue.dev/customize/model-providers/more/lmstudio) to interact with LLMs served by LM Studio. These apps leverage JIT to load models on-demand the first time you use them. **Problem**: When you're not actively using a model, you might don't want it to remain loaded in memory. **Solution**: Set a TTL for models loaded via API requests. The idle timer resets every time the model receives a request, so it won't disappear while you use it. A model is considered idle if it's not doing any work. When the idle TTL expires, the model is automatically unloaded from memory. ### Set App-default Idle TTL By default, JIT-loaded models have a TTL of 60 minutes. You can configure a default TTL value for any model loaded via JIT like so:

### Set per-model TTL-model in API requests

When JIT loading is enabled, the **first request** to a model will load it into memory. You can specify a TTL for that model in the request payload.

This works for requests targeting both the [OpenAI compatibility API](openai-api) and the [LM Studio's REST API](rest-api):

### Set per-model TTL-model in API requests

When JIT loading is enabled, the **first request** to a model will load it into memory. You can specify a TTL for that model in the request payload.

This works for requests targeting both the [OpenAI compatibility API](openai-api) and the [LM Studio's REST API](rest-api):

```diff curl http://localhost:1234/api/v0/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "deepseek-r1-distill-qwen-7b", + "ttl": 300, "messages": [ ... ] }' ``` ###### This will set a TTL of 5 minutes (300 seconds) for this model if it is JIT loaded. ### Set TTL for models loaded with `lms` By default, models loaded with `lms load` do not have a TTL, and will remain loaded in memory until you manually unload them. You can set a TTL for a model loaded with `lms` like so: ```bash lms load

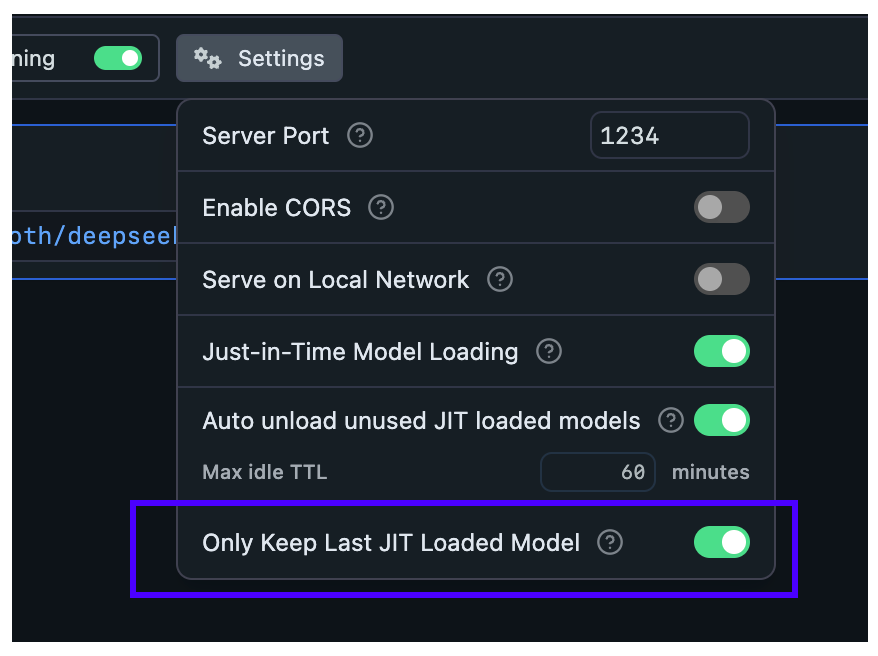

## Configure Auto-Evict for JIT loaded models

With this setting, you can ensure new models loaded via JIT automatically unload previously loaded models first.

This is useful when you want to switch between models from another app without worrying about memory building up with unused models.

## Configure Auto-Evict for JIT loaded models

With this setting, you can ensure new models loaded via JIT automatically unload previously loaded models first.

This is useful when you want to switch between models from another app without worrying about memory building up with unused models.

**When Auto-Evict is ON** (default):

- At most `1` model is kept loaded in memory at a time (when loaded via JIT)

- Non-JIT loaded models are not affected

**When Auto-Evict is OFF**:

- Switching models from an external app will keep previous models loaded in memory

- Models will remain loaded until either:

- Their TTL expires

- You manually unload them

This feature works in tandem with TTL to provide better memory management for your workflow.

### Nomenclature

`TTL`: Time-To-Live, is a term borrowed from networking protocols and cache systems. It defines how long a resource can remain allocated before it's considered stale and evicted.

### Structured Output

> Enforce LLM response formats using JSON schemas.

You can enforce a particular response format from an LLM by providing a JSON schema to the `/v1/chat/completions` endpoint, via LM Studio's REST API (or via any OpenAI client).

**When Auto-Evict is ON** (default):

- At most `1` model is kept loaded in memory at a time (when loaded via JIT)

- Non-JIT loaded models are not affected

**When Auto-Evict is OFF**:

- Switching models from an external app will keep previous models loaded in memory

- Models will remain loaded until either:

- Their TTL expires

- You manually unload them

This feature works in tandem with TTL to provide better memory management for your workflow.

### Nomenclature

`TTL`: Time-To-Live, is a term borrowed from networking protocols and cache systems. It defines how long a resource can remain allocated before it's considered stale and evicted.

### Structured Output

> Enforce LLM response formats using JSON schemas.

You can enforce a particular response format from an LLM by providing a JSON schema to the `/v1/chat/completions` endpoint, via LM Studio's REST API (or via any OpenAI client).

### Start LM Studio as a server To use LM Studio programatically from your own code, run LM Studio as a local server. You can turn on the server from the "Developer" tab in LM Studio, or via the `lms` CLI: ``` lms server start ``` ###### Install `lms` by running `npx lmstudio install-cli` This will allow you to interact with LM Studio via an OpenAI-like REST API. For an intro to LM Studio's OpenAI-like API, see [Running LM Studio as a server](/docs/basics/server).

### Structured Output The API supports structured JSON outputs through the `/v1/chat/completions` endpoint when given a [JSON schema](https://json-schema.org/overview/what-is-jsonschema). Doing this will cause the LLM to respond in valid JSON conforming to the schema provided. It follows the same format as OpenAI's recently announced [Structured Output](https://platform.openai.com/docs/guides/structured-outputs) API and is expected to work via the OpenAI client SDKs. **Example using `curl`** This example demonstrates a structured output request using the `curl` utility. To run this example on Mac or Linux, use any terminal. On Windows, use [Git Bash](https://git-scm.com/download/win). ```bash curl http://{{hostname}}:{{port}}/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "{{model}}", "messages": [ { "role": "system", "content": "You are a helpful jokester." }, { "role": "user", "content": "Tell me a joke." } ], "response_format": { "type": "json_schema", "json_schema": { "name": "joke_response", "strict": "true", "schema": { "type": "object", "properties": { "joke": { "type": "string" } }, "required": ["joke"] } } }, "temperature": 0.7, "max_tokens": 50, "stream": false }' ``` All parameters recognized by `/v1/chat/completions` will be honored, and the JSON schema should be provided in the `json_schema` field of `response_format`. The JSON object will be provided in `string` form in the typical response field, `choices[0].message.content`, and will need to be parsed into a JSON object. **Example using `python`** ```python from openai import OpenAI import json # Initialize OpenAI client that points to the local LM Studio server client = OpenAI( base_url="http://localhost:1234/v1", api_key="lm-studio" ) # Define the conversation with the AI messages = [ {"role": "system", "content": "You are a helpful AI assistant."}, {"role": "user", "content": "Create 1-3 fictional characters"} ] # Define the expected response structure character_schema = { "type": "json_schema", "json_schema": { "name": "characters", "schema": { "type": "object", "properties": { "characters": { "type": "array", "items": { "type": "object", "properties": { "name": {"type": "string"}, "occupation": {"type": "string"}, "personality": {"type": "string"}, "background": {"type": "string"} }, "required": ["name", "occupation", "personality", "background"] }, "minItems": 1, } }, "required": ["characters"] }, } } # Get response from AI response = client.chat.completions.create( model="your-model", messages=messages, response_format=character_schema, ) # Parse and display the results results = json.loads(response.choices[0].message.content) print(json.dumps(results, indent=2)) ``` **Important**: Not all models are capable of structured output, particularly LLMs below 7B parameters. Check the model card README if you are unsure if the model supports structured output. ### Structured output engine - For `GGUF` models: utilize `llama.cpp`'s grammar-based sampling APIs. - For `MLX` models: using [Outlines](https://github.com/dottxt-ai/outlines). The MLX implementation is available on Github: [lmstudio-ai/mlx-engine](https://github.com/lmstudio-ai/mlx-engine).

### Community Chat with other LM Studio users, discuss LLMs, hardware, and more on the [LM Studio Discord server](https://discord.gg/aPQfnNkxGC). ### Tool Use > Enable LLMs to interact with external functions and APIs. Tool use enables LLMs to request calls to external functions and APIs through the `/v1/chat/completions` endpoint, via LM Studio's REST API (or via any OpenAI client). This expands their functionality far beyond text output.

> 🔔 Tool use requires LM Studio 0.3.6 or newer, [get it here](https://lmstudio.ai/download) ## Quick Start ### 1. Start LM Studio as a server To use LM Studio programmatically from your own code, run LM Studio as a local server. You can turn on the server from the "Developer" tab in LM Studio, or via the `lms` CLI: ```bash lms server start ``` ###### Install `lms` by running `npx lmstudio install-cli` This will allow you to interact with LM Studio via an OpenAI-like REST API. For an intro to LM Studio's OpenAI-like API, see [Running LM Studio as a server](/docs/basics/server). ### 2. Load a Model You can load a model from the "Chat" or "Developer" tabs in LM Studio, or via the `lms` CLI: ```bash lms load ``` ### 3. Copy, Paste, and Run an Example! - `Curl` - [Single Turn Tool Call Request](#example-using-curl) - `Python` - [Single Turn Tool Call + Tool Use](#single-turn-example) - [Multi-Turn Example](#multi-turn-example) - [Advanced Agent Example](#advanced-agent-example)

## Tool Use ### What really is "Tool Use"? Tool use describes: - LLMs output text requesting functions to be called (LLMs cannot directly execute code) - Your code executes those functions - Your code feeds the results back to the LLM. ### High-level flow ```xml ┌──────────────────────────┐ │ SETUP: LLM + Tool list │ └──────────┬───────────────┘ ▼ ┌──────────────────────────┐ │ Get user input │◄────┐ └──────────┬───────────────┘ │ ▼ │ ┌──────────────────────────┐ │ │ LLM prompted w/messages │ │ └──────────┬───────────────┘ │ ▼ │ Needs tools? │ │ │ │ Yes No │ │ │ │ ▼ └────────────┐ │ ┌─────────────┐ │ │ │Tool Response│ │ │ └──────┬──────┘ │ │ ▼ │ │ ┌─────────────┐ │ │ │Execute tools│ │ │ └──────┬──────┘ │ │ ▼ ▼ │ ┌─────────────┐ ┌───────────┐ │Add results │ │ Normal │ │to messages │ │ response │ └──────┬──────┘ └─────┬─────┘ │ ▲ └───────────────────────┘ ``` ### In-depth flow LM Studio supports tool use through the `/v1/chat/completions` endpoint when given function definitions in the `tools` parameter of the request body. Tools are specified as an array of function definitions that describe their parameters and usage, like: It follows the same format as OpenAI's [Function Calling](https://platform.openai.com/docs/guides/function-calling) API and is expected to work via the OpenAI client SDKs. We will use [lmstudio-community/Qwen2.5-7B-Instruct-GGUF](https://model.lmstudio.ai/download/lmstudio-community/Qwen2.5-7B-Instruct-GGUF) as the model in this example flow. 1. You provide a list of tools to an LLM. These are the tools that the model can _request_ calls to. For example: ```json // the list of tools is model-agnostic [ { "type": "function", "function": { "name": "get_delivery_date", "description": "Get the delivery date for a customer's order", "parameters": { "type": "object", "properties": { "order_id": { "type": "string" } }, "required": ["order_id"] } } } ] ``` This list will be injected into the `system` prompt of the model depending on the model's chat template. For `Qwen2.5-Instruct`, this looks like: ```json <|im_start|>system You are Qwen, created by Alibaba Cloud. You are a helpful assistant. # Tools You may call one or more functions to assist with the user query. You are provided with function signatures within

## Supported Models Through LM Studio, **all** models support at least some degree of tool use. However, there are currently two levels of support that may impact the quality of the experience: Native and Default. Models with Native tool use support will have a hammer badge in the app, and generally perform better in tool use scenarios. ### Native tool use support "Native" tool use support means that both: 1. The model has a chat template that supports tool use (usually means the model has been trained for tool use) - This is what will be used to format the `tools` array into the system prompt and tell them model how to format tool calls - Example: [Qwen2.5-Instruct chat template](https://huggingface.co/mlx-community/Qwen2.5-7B-Instruct-4bit/blob/c26a38f6a37d0a51b4e9a1eb3026530fa35d9fed/tokenizer_config.json#L197) 2. LM Studio supports that model's tool use format - Required for LM Studio to properly input the chat history into the chat template, and parse the tool calls the model outputs into the `chat.completion` object Models that currently have native tool use support in LM Studio (subject to change): - Qwen - `GGUF` [lmstudio-community/Qwen2.5-7B-Instruct-GGUF](https://model.lmstudio.ai/download/lmstudio-community/Qwen2.5-7B-Instruct-GGUF) (4.68 GB) - `MLX` [mlx-community/Qwen2.5-7B-Instruct-4bit](https://model.lmstudio.ai/download/mlx-community/Qwen2.5-7B-Instruct-4bit) (4.30 GB) - Llama-3.1, Llama-3.2 - `GGUF` [lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF](https://model.lmstudio.ai/download/lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF) (4.92 GB) - `MLX` [mlx-community/Meta-Llama-3.1-8B-Instruct-8bit](https://model.lmstudio.ai/download/mlx-community/Meta-Llama-3.1-8B-Instruct-8bit) (8.54 GB) - Mistral - `GGUF` [bartowski/Ministral-8B-Instruct-2410-GGUF](https://model.lmstudio.ai/download/bartowski/Ministral-8B-Instruct-2410-GGUF) (4.67 GB) - `MLX` [mlx-community/Ministral-8B-Instruct-2410-4bit](https://model.lmstudio.ai/download/mlx-community/Ministral-8B-Instruct-2410-4bit) (4.67 GB GB) ### Default tool use support "Default" tool use support means that **either**: 1. The model does not have chat template that supports tool use (usually means the model has not been trained for tool use) 2. LM Studio does not currently support that model's tool use format Under the hood, default tool use works by: - Giving models a custom system prompt and a default tool call format to use - Converting `tool` role messages to the `user` role so that chat templates without the `tool` role are compatible - Converting `assistant` role `tool_calls` into the default tool call format Results will vary by model. You can see the default format by running `lms log stream` in your terminal, then sending a chat completion request with `tools` to a model that doesn't have Native tool use support. The default format is subject to change.

Expand to see example of default tool use format

```bash -> % lms log stream Streaming logs from LM Studio timestamp: 11/13/2024, 9:35:15 AM type: llm.prediction.input modelIdentifier: gemma-2-2b-it modelPath: lmstudio-community/gemma-2-2b-it-GGUF/gemma-2-2b-it-Q4_K_M.gguf input: "## Example using `curl` This example demonstrates a model requesting a tool call using the `curl` utility. To run this example on Mac or Linux, use any terminal. On Windows, use [Git Bash](https://git-scm.com/download/win). ```bash curl http://localhost:1234/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "lmstudio-community/qwen2.5-7b-instruct", "messages": [{"role": "user", "content": "What dell products do you have under $50 in electronics?"}], "tools": [ { "type": "function", "function": { "name": "search_products", "description": "Search the product catalog by various criteria. Use this whenever a customer asks about product availability, pricing, or specifications.", "parameters": { "type": "object", "properties": { "query": { "type": "string", "description": "Search terms or product name" }, "category": { "type": "string", "description": "Product category to filter by", "enum": ["electronics", "clothing", "home", "outdoor"] }, "max_price": { "type": "number", "description": "Maximum price in dollars" } }, "required": ["query"], "additionalProperties": false } } } ] }' ``` All parameters recognized by `/v1/chat/completions` will be honored, and the array of available tools should be provided in the `tools` field. If the model decides that the user message would be best fulfilled with a tool call, an array of tool call request objects will be provided in the response field, `choices[0].message.tool_calls`. The `finish_reason` field of the top-level response object will also be populated with `"tool_calls"`. An example response to the above `curl` request will look like: ```bash { "id": "chatcmpl-gb1t1uqzefudice8ntxd9i", "object": "chat.completion", "created": 1730913210, "model": "lmstudio-community/qwen2.5-7b-instruct", "choices": [ { "index": 0, "logprobs": null, "finish_reason": "tool_calls", "message": { "role": "assistant", "tool_calls": [ { "id": "365174485", "type": "function", "function": { "name": "search_products", "arguments": "{\"query\":\"dell\",\"category\":\"electronics\",\"max_price\":50}" } } ] } } ], "usage": { "prompt_tokens": 263, "completion_tokens": 34, "total_tokens": 297 }, "system_fingerprint": "lmstudio-community/qwen2.5-7b-instruct" } ``` In plain english, the above response can be thought of as the model saying: > "Please call the `search_products` function, with arguments: > > - 'dell' for the `query` parameter, > - 'electronics' for the `category` parameter > - '50' for the `max_price` parameter > > and give me back the results" The `tool_calls` field will need to be parsed to call actual functions/APIs. The below examples demonstrate how.

## Examples using `python` Tool use shines when paired with program languages like python, where you can implement the functions specified in the `tools` field to programmatically call them when the model requests. ### Single-turn example Below is a simple single-turn (model is only called once) example of enabling a model to call a function called `say_hello` that prints a hello greeting to the console: `single-turn-example.py` ```python from openai import OpenAI # Connect to LM Studio client = OpenAI(base_url="http://localhost:1234/v1", api_key="lm-studio") # Define a simple function def say_hello(name: str) -> str: print(f"Hello, {name}!") # Tell the AI about our function tools = [ { "type": "function", "function": { "name": "say_hello", "description": "Says hello to someone", "parameters": { "type": "object", "properties": { "name": { "type": "string", "description": "The person's name" } }, "required": ["name"] } } } ] # Ask the AI to use our function response = client.chat.completions.create( model="lmstudio-community/qwen2.5-7b-instruct", messages=[{"role": "user", "content": "Can you say hello to Bob the Builder?"}], tools=tools ) # Get the name the AI wants to use a tool to say hello to # (Assumes the AI has requested a tool call and that tool call is say_hello) tool_call = response.choices[0].message.tool_calls[0] name = eval(tool_call.function.arguments)["name"] # Actually call the say_hello function say_hello(name) # Prints: Hello, Bob the Builder! ``` Running this script from the console should yield results like: ```xml -> % python single-turn-example.py Hello, Bob the Builder! ``` Play around with the name in ```python messages=[{"role": "user", "content": "Can you say hello to Bob the Builder?"}] ``` to see the model call the `say_hello` function with different names. ### Multi-turn example Now for a slightly more complex example. In this example, we'll: 1. Enable the model to call a `get_delivery_date` function 2. Hand the result of calling that function back to the model, so that it can fulfill the user's request in plain text

multi-turn-example.py (click to expand)

```python

from datetime import datetime, timedelta

import json

import random

from openai import OpenAI

# Point to the local server

client = OpenAI(base_url="http://localhost:1234/v1", api_key="lm-studio")

model = "lmstudio-community/qwen2.5-7b-instruct"

def get_delivery_date(order_id: str) -> datetime:

# Generate a random delivery date between today and 14 days from now

# in a real-world scenario, this function would query a database or API

today = datetime.now()

random_days = random.randint(1, 14)

delivery_date = today + timedelta(days=random_days)

print(

f"\nget_delivery_date function returns delivery date:\n\n{delivery_date}",

flush=True,

)

return delivery_date

tools = [

{

"type": "function",

"function": {

"name": "get_delivery_date",

"description": "Get the delivery date for a customer's order. Call this whenever you need to know the delivery date, for example when a customer asks 'Where is my package'",

"parameters": {

"type": "object",

"properties": {

"order_id": {

"type": "string",

"description": "The customer's order ID.",

},

},

"required": ["order_id"],

"additionalProperties": False,

},

},

}

]

messages = [

{

"role": "system",

"content": "You are a helpful customer support assistant. Use the supplied tools to assist the user.",

},

{

"role": "user",

"content": "Give me the delivery date and time for order number 1017",

},

]

# LM Studio

response = client.chat.completions.create(

model=model,

messages=messages,

tools=tools,

)

print("\nModel response requesting tool call:\n", flush=True)

print(response, flush=True)

# Extract the arguments for get_delivery_date

# Note this code assumes we have already determined that the model generated a function call.

tool_call = response.choices[0].message.tool_calls[0]

arguments = json.loads(tool_call.function.arguments)

order_id = arguments.get("order_id")

# Call the get_delivery_date function with the extracted order_id

delivery_date = get_delivery_date(order_id)

assistant_tool_call_request_message = {

"role": "assistant",

"tool_calls": [

{

"id": response.choices[0].message.tool_calls[0].id,

"type": response.choices[0].message.tool_calls[0].type,

"function": response.choices[0].message.tool_calls[0].function,

}

],

}

# Create a message containing the result of the function call

function_call_result_message = {

"role": "tool",

"content": json.dumps(

{

"order_id": order_id,

"delivery_date": delivery_date.strftime("%Y-%m-%d %H:%M:%S"),

}

),

"tool_call_id": response.choices[0].message.tool_calls[0].id,

}

# Prepare the chat completion call payload

completion_messages_payload = [

messages[0],

messages[1],

assistant_tool_call_request_message,

function_call_result_message,

]

# Call the OpenAI API's chat completions endpoint to send the tool call result back to the model

# LM Studio

response = client.chat.completions.create(

model=model,

messages=completion_messages_payload,

)

print("\nFinal model response with knowledge of the tool call result:\n", flush=True)

print(response.choices[0].message.content, flush=True)

```

agent-chat-example.py (click to expand)

```python

import json

from urllib.parse import urlparse

import webbrowser

from datetime import datetime

import os

from openai import OpenAI

# Point to the local server

client = OpenAI(base_url="http://localhost:1234/v1", api_key="lm-studio")

model = "lmstudio-community/qwen2.5-7b-instruct"

def is_valid_url(url: str) -> bool:

try:

result = urlparse(url)

return bool(result.netloc) # Returns True if there's a valid network location

except Exception:

return False

def open_safe_url(url: str) -> dict:

# List of allowed domains (expand as needed)

SAFE_DOMAINS = {

"lmstudio.ai",

"github.com",

"google.com",

"wikipedia.org",

"weather.com",

"stackoverflow.com",

"python.org",

"docs.python.org",

}

try:

# Add http:// if no scheme is present

if not url.startswith(('http://', 'https://')):

url = 'http://' + url

# Validate URL format

if not is_valid_url(url):

return {"status": "error", "message": f"Invalid URL format: {url}"}

# Parse the URL and check domain

parsed_url = urlparse(url)

domain = parsed_url.netloc.lower()

base_domain = ".".join(domain.split(".")[-2:])

if base_domain in SAFE_DOMAINS:

webbrowser.open(url)

return {"status": "success", "message": f"Opened {url} in browser"}

else:

return {

"status": "error",

"message": f"Domain {domain} not in allowed list",

}

except Exception as e:

return {"status": "error", "message": str(e)}

def get_current_time() -> dict:

"""Get the current system time with timezone information"""

try:

current_time = datetime.now()

timezone = datetime.now().astimezone().tzinfo

formatted_time = current_time.strftime("%Y-%m-%d %H:%M:%S %Z")

return {

"status": "success",

"time": formatted_time,

"timezone": str(timezone),

"timestamp": current_time.timestamp(),

}

except Exception as e:

return {"status": "error", "message": str(e)}

def analyze_directory(path: str = ".") -> dict:

"""Count and categorize files in a directory"""

try:

stats = {

"total_files": 0,

"total_dirs": 0,

"file_types": {},

"total_size_bytes": 0,

}

for entry in os.scandir(path):

if entry.is_file():

stats["total_files"] += 1

ext = os.path.splitext(entry.name)[1].lower() or "no_extension"

stats["file_types"][ext] = stats["file_types"].get(ext, 0) + 1

stats["total_size_bytes"] += entry.stat().st_size

elif entry.is_dir():

stats["total_dirs"] += 1

# Add size of directory contents

for root, _, files in os.walk(entry.path):

for file in files:

try:

stats["total_size_bytes"] += os.path.getsize(os.path.join(root, file))

except (OSError, FileNotFoundError):

continue

return {"status": "success", "stats": stats, "path": os.path.abspath(path)}

except Exception as e:

return {"status": "error", "message": str(e)}

tools = [

{

"type": "function",

"function": {

"name": "open_safe_url",

"description": "Open a URL in the browser if it's deemed safe",

"parameters": {

"type": "object",

"properties": {

"url": {

"type": "string",

"description": "The URL to open",

},

},

"required": ["url"],

},

},

},

{

"type": "function",

"function": {

"name": "get_current_time",

"description": "Get the current system time with timezone information",

"parameters": {

"type": "object",

"properties": {},

"required": [],

},

},

},

{

"type": "function",

"function": {

"name": "analyze_directory",

"description": "Analyze the contents of a directory, counting files and folders",

"parameters": {

"type": "object",

"properties": {

"path": {

"type": "string",

"description": "The directory path to analyze. Defaults to current directory if not specified.",

},

},

"required": [],

},

},

},

]

def process_tool_calls(response, messages):

"""Process multiple tool calls and return the final response and updated messages"""

# Get all tool calls from the response

tool_calls = response.choices[0].message.tool_calls

# Create the assistant message with tool calls

assistant_tool_call_message = {

"role": "assistant",

"tool_calls": [

{

"id": tool_call.id,

"type": tool_call.type,

"function": tool_call.function,

}

for tool_call in tool_calls

],

}

# Add the assistant's tool call message to the history

messages.append(assistant_tool_call_message)

# Process each tool call and collect results

tool_results = []

for tool_call in tool_calls:

# For functions with no arguments, use empty dict

arguments = (

json.loads(tool_call.function.arguments)

if tool_call.function.arguments.strip()

else {}

)

# Determine which function to call based on the tool call name

if tool_call.function.name == "open_safe_url":

result = open_safe_url(arguments["url"])

elif tool_call.function.name == "get_current_time":

result = get_current_time()

elif tool_call.function.name == "analyze_directory":

path = arguments.get("path", ".")

result = analyze_directory(path)

else:

# llm tried to call a function that doesn't exist, skip

continue

# Add the result message

tool_result_message = {

"role": "tool",

"content": json.dumps(result),

"tool_call_id": tool_call.id,

}

tool_results.append(tool_result_message)

messages.append(tool_result_message)

# Get the final response

final_response = client.chat.completions.create(

model=model,

messages=messages,

)

return final_response

def chat():

messages = [

{

"role": "system",

"content": "You are a helpful assistant that can open safe web links, tell the current time, and analyze directory contents. Use these capabilities whenever they might be helpful.",

}

]

print(

"Assistant: Hello! I can help you open safe web links, tell you the current time, and analyze directory contents. What would you like me to do?"

)

print("(Type 'quit' to exit)")

while True:

# Get user input

user_input = input("\nYou: ").strip()

# Check for quit command

if user_input.lower() == "quit":

print("Assistant: Goodbye!")

break

# Add user message to conversation

messages.append({"role": "user", "content": user_input})

try:

# Get initial response

response = client.chat.completions.create(

model=model,

messages=messages,

tools=tools,

)

# Check if the response includes tool calls

if response.choices[0].message.tool_calls:

# Process all tool calls and get final response

final_response = process_tool_calls(response, messages)

print("\nAssistant:", final_response.choices[0].message.content)

# Add assistant's final response to messages

messages.append(

{

"role": "assistant",

"content": final_response.choices[0].message.content,

}

)

else:

# If no tool call, just print the response

print("\nAssistant:", response.choices[0].message.content)

# Add assistant's response to messages

messages.append(

{

"role": "assistant",

"content": response.choices[0].message.content,

}

)

except Exception as e:

print(f"\nAn error occurred: {str(e)}")

exit(1)

if __name__ == "__main__":

chat()

```

tool-streaming-chatbot.py (click to expand)

```python

from openai import OpenAI

import time

client = OpenAI(base_url="http://127.0.0.1:1234/v1", api_key="lm-studio")

MODEL = "lmstudio-community/qwen2.5-7b-instruct"

TIME_TOOL = {

"type": "function",

"function": {

"name": "get_current_time",

"description": "Get the current time, only if asked",

"parameters": {"type": "object", "properties": {}},

},

}

def get_current_time():

return {"time": time.strftime("%H:%M:%S")}

def process_stream(stream, add_assistant_label=True):

"""Handle streaming responses from the API"""

collected_text = ""

tool_calls = []

first_chunk = True

for chunk in stream:

delta = chunk.choices[0].delta

# Handle regular text output

if delta.content:

if first_chunk:

print()

if add_assistant_label:

print("Assistant:", end=" ", flush=True)

first_chunk = False

print(delta.content, end="", flush=True)

collected_text += delta.content

# Handle tool calls

elif delta.tool_calls:

for tc in delta.tool_calls:

if len(tool_calls) <= tc.index:

tool_calls.append({

"id": "", "type": "function",

"function": {"name": "", "arguments": ""}

})

tool_calls[tc.index] = {

"id": (tool_calls[tc.index]["id"] + (tc.id or "")),

"type": "function",

"function": {

"name": (tool_calls[tc.index]["function"]["name"] + (tc.function.name or "")),

"arguments": (tool_calls[tc.index]["function"]["arguments"] + (tc.function.arguments or ""))

}

}

return collected_text, tool_calls

def chat_loop():

messages = []

print("Assistant: Hi! I am an AI agent empowered with the ability to tell the current time (Type 'quit' to exit)")

while True:

user_input = input("\nYou: ").strip()

if user_input.lower() == "quit":

break

messages.append({"role": "user", "content": user_input})

# Get initial response

response_text, tool_calls = process_stream(

client.chat.completions.create(

model=MODEL,

messages=messages,

tools=[TIME_TOOL],

stream=True,

temperature=0.2

)

)

if not tool_calls:

print()

text_in_first_response = len(response_text) > 0

if text_in_first_response:

messages.append({"role": "assistant", "content": response_text})

# Handle tool calls if any

if tool_calls:

tool_name = tool_calls[0]["function"]["name"]

print()

if not text_in_first_response:

print("Assistant:", end=" ", flush=True)

print(f"**Calling Tool: {tool_name}**")

messages.append({"role": "assistant", "tool_calls": tool_calls})

# Execute tool calls

for tool_call in tool_calls:

if tool_call["function"]["name"] == "get_current_time":

result = get_current_time()

messages.append({

"role": "tool",

"content": str(result),

"tool_call_id": tool_call["id"]

})

# Get final response after tool execution

final_response, _ = process_stream(

client.chat.completions.create(

model=MODEL,

messages=messages,

stream=True

),

add_assistant_label=False

)

if final_response:

print()

messages.append({"role": "assistant", "content": final_response})

if __name__ == "__main__":

chat_loop()

```

### OpenAI-like API endpoints LM Studio accepts requests on several OpenAI endpoints and returns OpenAI-like response objects. #### Supported endpoints ``` GET /v1/models POST /v1/chat/completions POST /v1/embeddings POST /v1/completions ``` ###### See below for more info about each endpoint

### Re-using an existing OpenAI client ```lms_protip You can reuse existing OpenAI clients (in Python, JS, C#, etc) by switching up the "base URL" property to point to your LM Studio instead of OpenAI's servers. ``` #### Switching up the `base url` to point to LM Studio ###### Note: The following examples assume the server port is `1234` ##### Python ```diff from openai import OpenAI client = OpenAI( + base_url="http://localhost:1234/v1" ) # ... the rest of your code ... ``` ##### Typescript ```diff import OpenAI from 'openai'; const client = new OpenAI({ + baseUrl: "http://localhost:1234/v1" }); // ... the rest of your code ... ``` ##### cURL ```diff - curl https://api.openai.com/v1/chat/completions \ + curl http://localhost:1234/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ - "model": "gpt-4o-mini", + "model": "use the model identifier from LM Studio here", "messages": [{"role": "user", "content": "Say this is a test!"}], "temperature": 0.7 }' ```

### Endpoints overview #### `/v1/models` - `GET` request - Lists the currently **loaded** models. ##### cURL example ```bash curl http://localhost:1234/v1/models ``` #### `/v1/chat/completions` - `POST` request - Send a chat history and receive the assistant's response - Prompt template is applied automatically - You can provide inference parameters such as temperature in the payload. See [supported parameters](#supported-payload-parameters) - See [OpenAI's documentation](https://platform.openai.com/docs/api-reference/chat) for more information - As always, keep a terminal window open with [`lms log stream`](/docs/cli/log-stream) to see what input the model receives ##### Python example ```python # Example: reuse your existing OpenAI setup from openai import OpenAI # Point to the local server client = OpenAI(base_url="http://localhost:1234/v1", api_key="lm-studio") completion = client.chat.completions.create( model="model-identifier", messages=[ {"role": "system", "content": "Always answer in rhymes."}, {"role": "user", "content": "Introduce yourself."} ], temperature=0.7, ) print(completion.choices[0].message) ``` #### `/v1/embeddings` - `POST` request - Send a string or array of strings and get an array of text embeddings (integer token IDs) - See [OpenAI's documentation](https://platform.openai.com/docs/api-reference/embeddings) for more information ##### Python example ```python # Make sure to `pip install openai` first from openai import OpenAI client = OpenAI(base_url="http://localhost:1234/v1", api_key="lm-studio") def get_embedding(text, model="model-identifier"): text = text.replace("\n", " ") return client.embeddings.create(input = [text], model=model).data[0].embedding print(get_embedding("Once upon a time, there was a cat.")) ``` #### `/v1/completions` ```lms_warning This OpenAI-like endpoint is no longer supported by OpenAI. LM Studio continues to support it. Using this endpoint with chat-tuned models might result in unexpected behavior such as extraneous role tokens being emitted by the model. For best results, utilize a base model. ``` - `POST` request - Send a string and get the model's continuation of that string - See [supported payload parameters](#supported-payload-parameters) - Prompt template will NOT be applied, even if the model has one - See [OpenAI's documentation](https://platform.openai.com/docs/api-reference/completions) for more information - As always, keep a terminal window open with [`lms log stream`](/docs/cli/log-stream) to see what input the model receives

### Supported payload parameters For an explanation for each parameter, see https://platform.openai.com/docs/api-reference/chat/create. ```py model top_p top_k messages temperature max_tokens stream stop presence_penalty frequency_penalty logit_bias repeat_penalty seed ```