Documentation

Core

LM Studio REST API

OpenAI Compatible Endpoints

Anthropic Compatible Endpoints

Core

LM Studio REST API

OpenAI Compatible Endpoints

Anthropic Compatible Endpoints

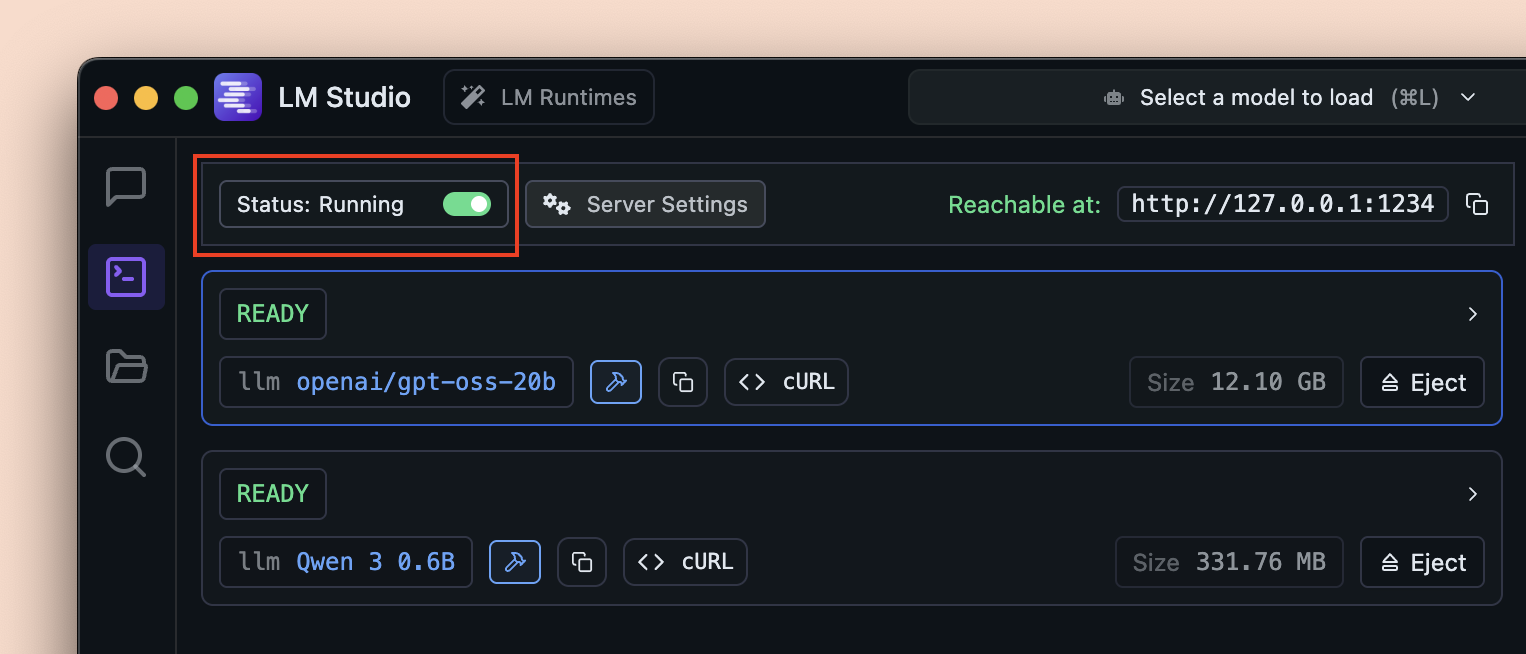

You can serve local LLMs from LM Studio's Developer tab, either on localhost or on the network.

LM Studio's APIs can be used through REST API, client libraries like lmstudio-js and lmstudio-python, and compatibility endpoints like OpenAI-compatible and Anthropic-compatible.

Load and serve LLMs from LM Studio

Running the server

To run the server, go to the Developer tab in LM Studio, and toggle the "Start server" switch to start the API server.

Start the LM Studio API Server

Alternatively, you can use lms (LM Studio's CLI) to start the server from your terminal:

lms server start

API options

- LM Studio REST API

- TypeScript SDK -

lmstudio-js - Python SDK -

lmstudio-python - OpenAI-compatible endpoints

- Anthropic-compatible endpoints

This page's source is available on GitHub