Qwen3-Coder

Qwen3-Coder

State-of-the-art, Mixture-of-Experts local coding model with native support for 256K context length. Available in 30B (3B active) and 480B (35B active) sizes.

Memory Requirements

To run the smallest Qwen3-Coder, you need at least 15 GB of RAM. The largest one may require up to 250 GB.

Capabilities

Qwen3-Coder models support tool use. They are available in gguf and mlx.

About Qwen3-Coder

Qwen3-Coder is an agentic coding model from Alibaba Qwen. It comes in two sizes:

Qwen3-Coder-480B-A35B-Instruct - a 480B-parameter Mixture-of-Experts model with 35B active parameters, offering exceptional performance in both coding and agentic tasks.

Qwen3-Coder-30B-A3B-Instruct - 30B-parameter Mixture-of-Experts model with 3B active parameters.

Key features

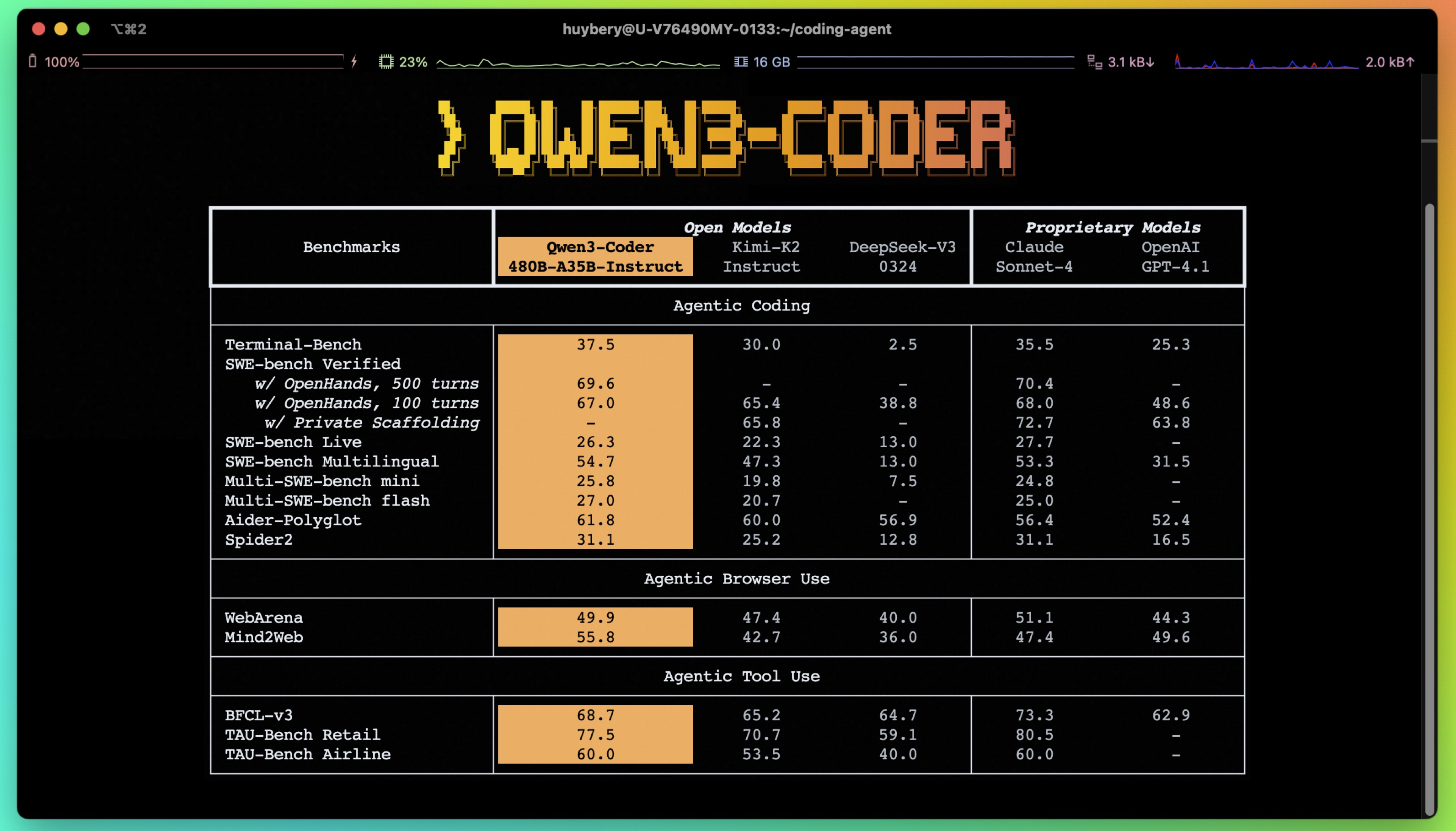

- Significant Performance among open models on Agentic Coding, Agentic Browser-Use, and other foundational coding tasks.

- Long-context Capabilities with native support for 256K tokens, extendable up to 1M tokens using Yarn, optimized for repository-scale understanding.

- Agentic Coding supporting for most platform such as Qwen Code, CLINE, featuring a specially designed function call format.

Qwen3-Coder-30B

Qwen3-Coder-30B-A3B-Instruct has the following features:

- Type: Causal Language Models

- Training Stage: Pretraining & Post-training

- Number of Parameters: 30.5B in total and 3.3B activated

- Number of Layers: 48

- Number of Attention Heads (GQA): 32 for Q and 4 for KV

- Number of Experts: 128

- Number of Activated Experts: 8

- Context Length: 262,144 natively.

Qwen3-Coder-480B

Qwen3-Coder-480B-A35B-Instruct has the following features:

- Type: Causal Language Models

- Training Stage: Pretraining & Post-training

- Number of Parameters: 480B in total and 35B activated

- Number of Layers: 62

- Number of Attention Heads (GQA): 96 for Q and 8 for KV

- Number of Experts: 160

- Number of Activated Experts: 8

- Context Length: 262,144 natively.

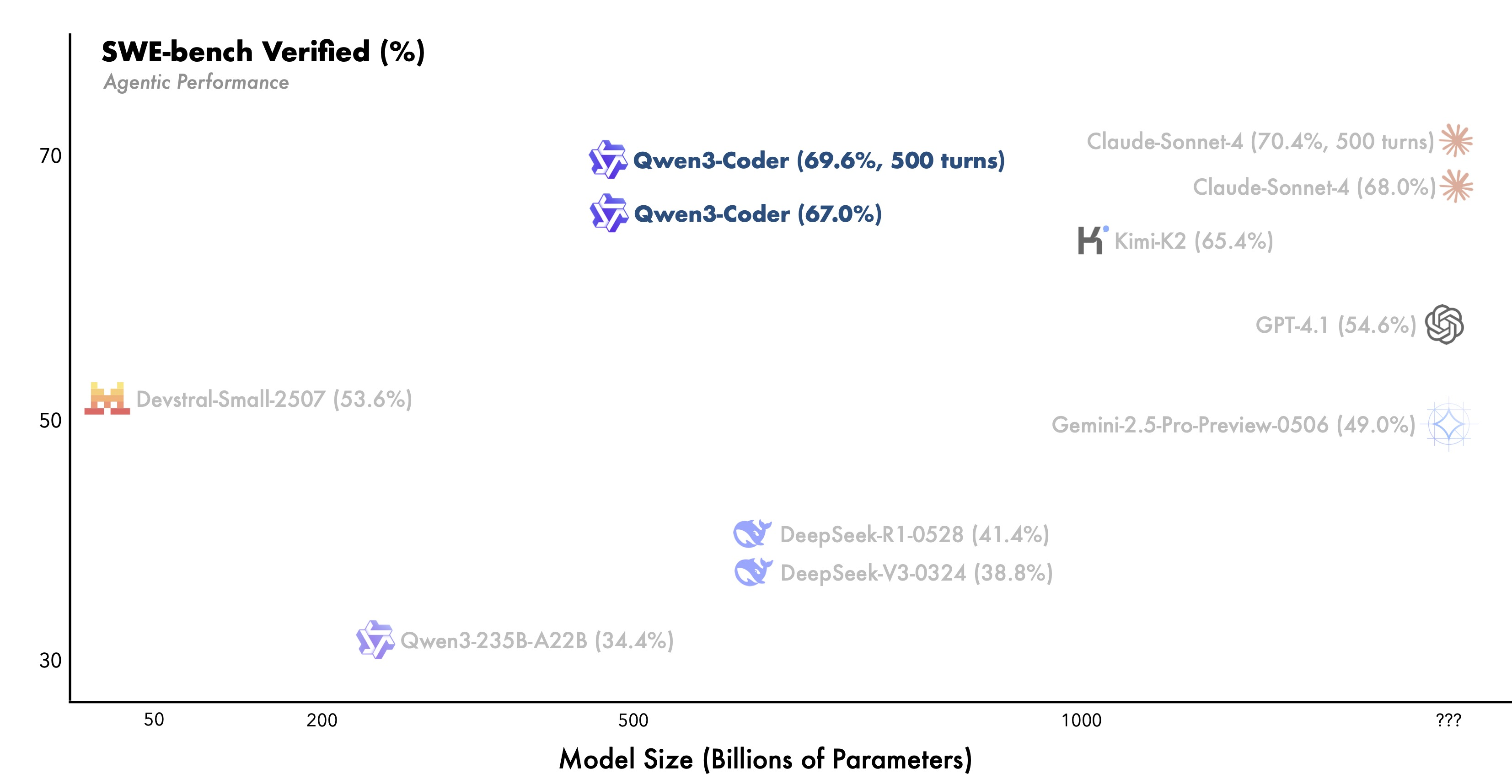

Performance

In real-world software engineering tasks like SWE-Bench, Qwen3-Coder must engage in multi-turn interaction with the environment, involving planning, using tools, receiving feedback, and making decisions. In the post-training phase of Qwen3-Coder, Alibaba Qwen introduced long-horizon RL (Agent RL) to encourage the model to solve real-world tasks through multi-turn interactions using tools. The key challenge of Agent RL lies in environment scaling. To address this, Alibaba Qwen built a scalable system capable of running 20,000 independent environments in parallel, leveraging Alibaba Cloud's infrastructure. The infrastructure provides the necessary feedback for large-scale reinforcement learning and supports evaluation at scale. As a result, Qwen3-Coder achieves state-of-the-art performance among open-source models on SWE-Bench Verified without test-time scaling.