phi-4-reasoning

phi-4-reasoning

Phi-4-mini-reasoning is a lightweight open model built upon synthetic data with a focus on high-quality, reasoning dense data.

Memory Requirements

To run the smallest phi-4-reasoning, you need at least 3 GB of RAM. The largest one may require up to 8 GB.

Capabilities

phi-4-reasoning models support tool use and reasoning. They are available in gguf and mlx.

About phi-4-reasoning

Phi-4-mini-reasoning is a lightweight open model built upon synthetic data with a focus on high-quality, reasoning dense data further finetuned for more advanced math reasoning capabilities. The model belongs to the Phi-4 model family and supports 128K token context length.

Technical report: https://arxiv.org/abs/2504.21318

Primary use case

The Phi-4-reasoning family models are designed for multi-step, logic-intensive mathematical problem-solving tasks under memory/compute constrained environments and latency bound scenarios. Some of the use cases include formal proof generation, symbolic computation, advanced word problems, and a wide range of mathematical reasoning scenarios. These models excel at maintaining context across steps, applying structured logic, and delivering accurate, reliable solutions in domains that require deep analytical thinking.

Performance

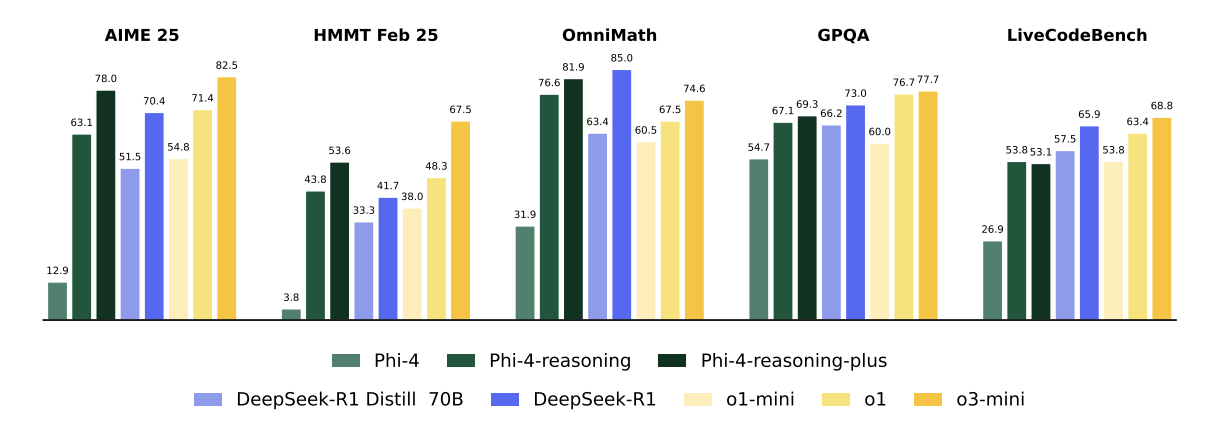

Phi-4-reasoning performance across representative reasoning benchmarks spanning mathematical (HMMT, AIME 25, OmniMath), scientific (GPQA), and coding (LiveCodeBench 8/24-1/25) domains. Microsoft illustrates the performance gains from reasoning-focused post-training of Phi-4 via Phi-4-reasoning (SFT) and Phi-4-reasoning-plus (SFT+RL), alongside: open-weight models from DeepSeek including DeepSeek-R1 (671B Mixture-of-Experts) and its distilled dense variant DeepSeek-R1-Distill-Llama-70B, and OpenAI’s proprietary frontier models o1 and o3-mini. Phi-4-reasoning and Phi-4-reasoning-plus consistently outperform the base model Phi-4 and demonstrate competitive performance against substantially larger and state-of-the-art models

License

Phi-4-reasoning models are provided under the MIT license.