Olmo 3

Olmo 3

Olmo 3 is a family of Open language models designed to enable the science of language models.

Memory Requirements

To run the smallest Olmo 3, you need at least 6 GB of RAM. The largest one may require up to 19 GB.

Capabilities

Olmo 3 models support tool use and reasoning. They are available in gguf and mlx.

About Olmo 3

AllenAI introduces Olmo 3, a new family of 7B and 32B models. This suite includes Base, Instruct, and Think variants. The Base models were trained using a staged training approach.

Olmo is a series of Open language models designed to enable the science of language models. These models are trained on the Dolma 3 dataset. All code, checkpoints, and associated training details are released as open source artifacts.

AllenAI published a technical report detailing their process of creating and evaluating Olmo 3 models. It is available here.

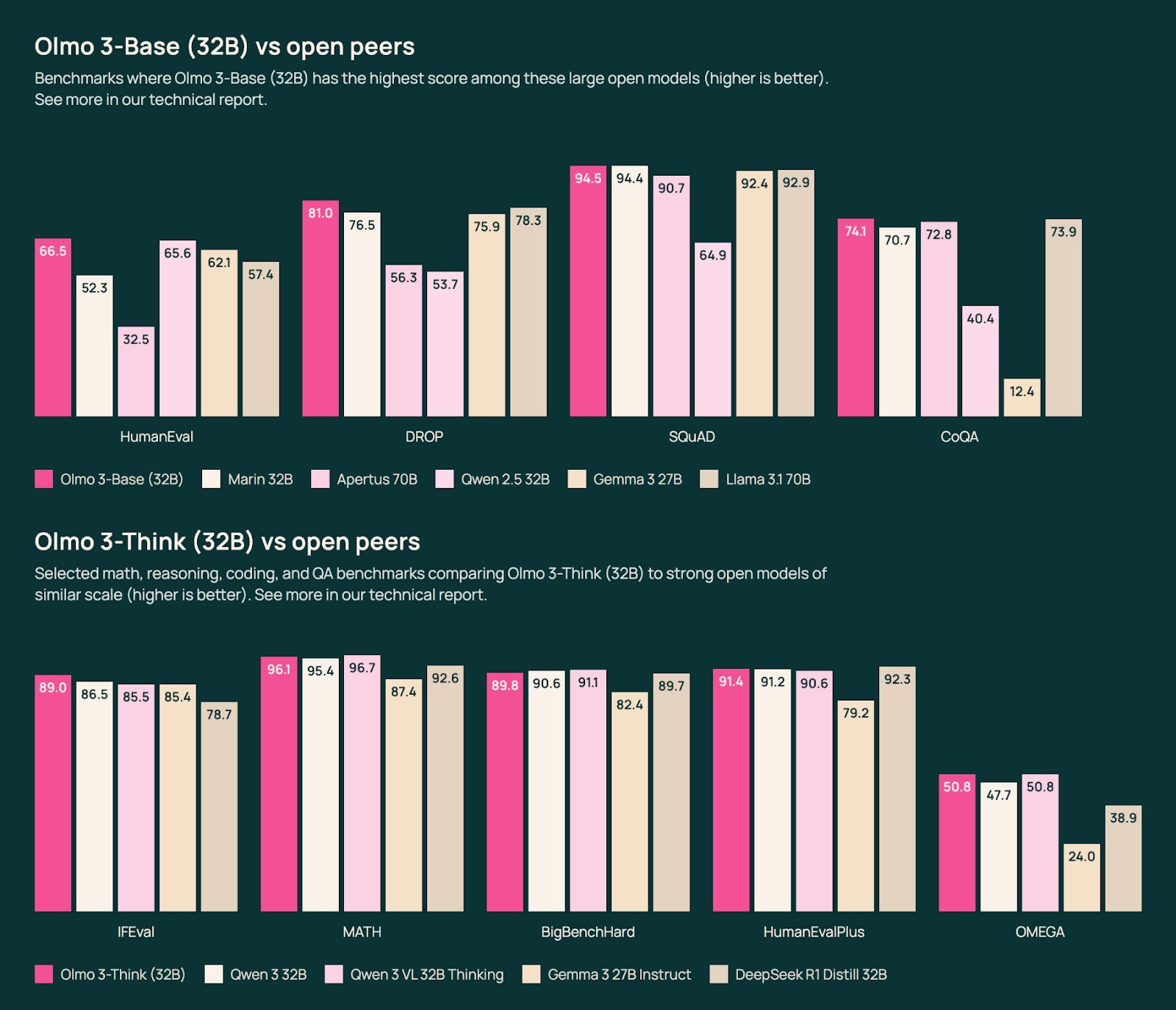

Performance comparison

Olmo3 Models

| Size | Training Tokens | Layers | Hidden Size | Q Heads | KV Heads | Context Length |

|---|---|---|---|---|---|---|

| OLMo 3 7B | 5.93 Trillion | 32 | 4096 | 32 | 32 | 65,536 |

| OLMo 3 32B | 5.50 Trillion | 64 | 5120 | 40 | 8 | 65,536 |

License

Olmo3 models are released under the Apache 2.0 license