Granite 4.0

Granite 4.0

Granite 4.0 language models are lightweight, state-of-the-art open models that natively support multilingual capabilities, coding tasks, RAG, tool use, and JSON output.

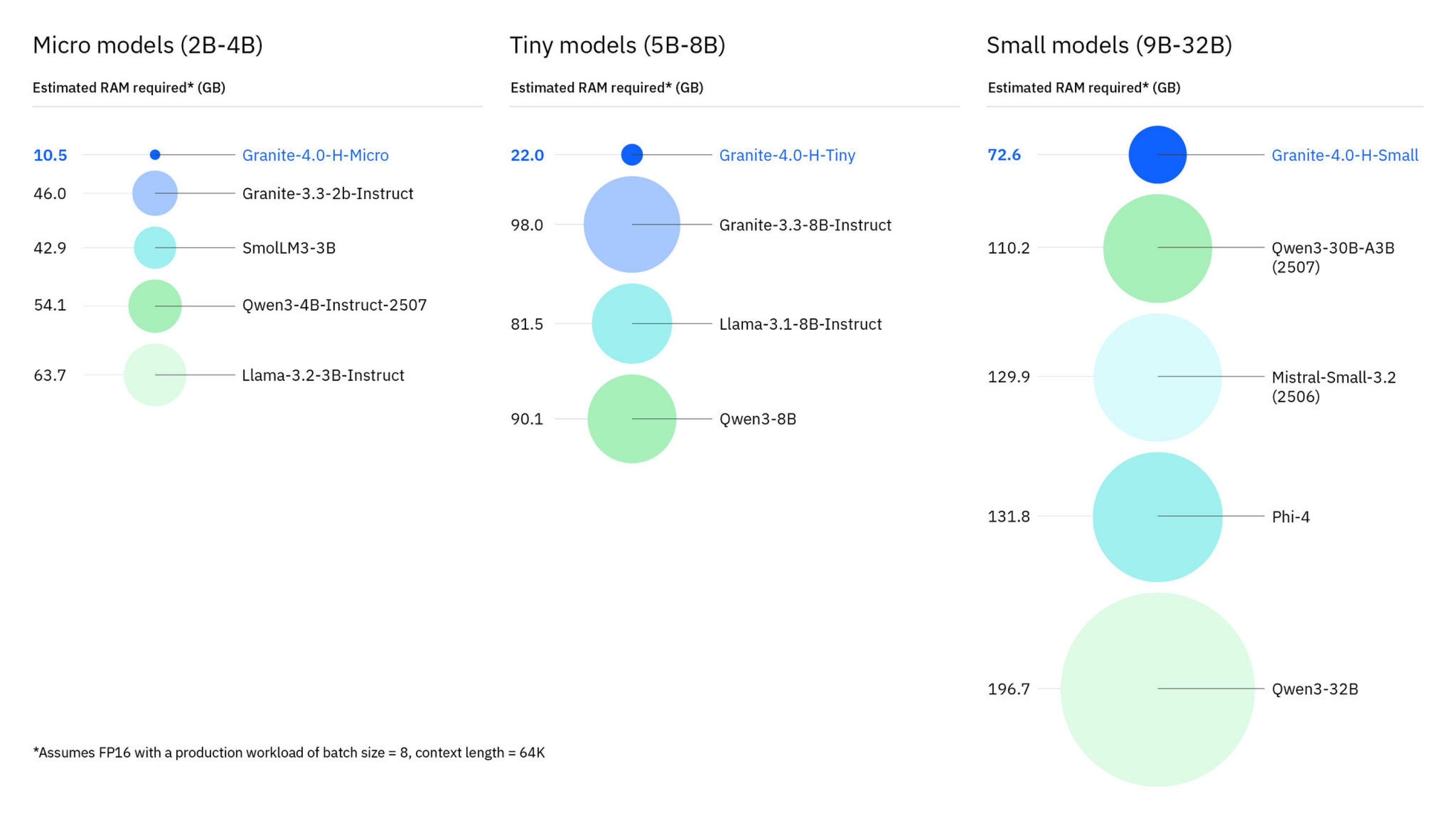

Memory Requirements

To run the smallest Granite 4.0, you need at least 2 GB of RAM. The largest one may require up to 21 GB.

Capabilities

Granite 4.0 models support tool use. They are available in gguf.

About Granite 4.0

Granite 4.0 language models are lightweight, state-of-the-art open foundation models that natively support multilingual capabilities, a wide range of coding tasks—including fill-in-the-middle (FIM) code completion—retrieval-augmented generation (RAG), tool usage, and structured JSON output.

The Granite 4.0 model family

Granite 4.0 models are available in three sizes—micro, tiny, and small—and are built on dense, dense-hybrid, and mixture-of-experts (MoE) hybrid architectures. We release both base models (checkpoints after pretraining) and instruct models (checkpoints fine-tuned for dialogue, instruction following, helpfulness, and safety).

IBM granite models are developed using a combination of advanced techniques such as structured chat formatting, supervised fine-tuning, reinforcement learning–based model alignment, and model merging. Granite 4.0 features significantly improved instruction-following and tool-calling capabilities, making it highly effective for enterprise applications and an ideal choice for deployment in environments with constrained compute resources.

Performance

Estimated RAM required for each Granite 4.0 model, compared with other popular open source models.

License

All Granite 4.0 Language Models are distributed under Apache 2.0 license, allowing free use for both research and commercial purposes.

The data curation and training processes were specifically designed for enterprise scenarios and customization, incorporating governance, risk, and compliance (GRC) evaluations alongside IBM’s standard data clearance and document quality review procedures.

Docs

See IBM's documentation for running Granite models locally with LM Studio: https://www.ibm.com/granite/docs/run/granite-with-lmstudio.