← All Models

Ernie-4.5

10.2K Downloads

Ernie-4.5

10.2K Downloads

Medium-size Mixture-of-Experts model from Baidu's new Ernie 4.5 line of foundation models.

Models

Updated 2 days ago

12.00 GB

Memory Requirements

To run the smallest Ernie-4.5, you need at least 12 GB of RAM.

Capabilities

Ernie-4.5 models are available in gguf and mlx formats.

About Ernie-4.5

ERNIE 4.5, a new family of large-scale models from Baidu.

Ernie 4.5 21B A3B is a medium-size Mixture-of-Experts model, specializing in general-purpose English and Chinese understanding and generation.

Supports a context length of up to 131,072 tokens.

Model Overview

ERNIE-4.5-21B-A3B is a text MoE Post-trained model, with 21B total parameters and 3B activated parameters for each token. The following are the model configuration details:

| Key | Value |

|---|---|

| Modality | Text |

| Training Stage | Posttraining |

| Params(Total / Activated) | 21B / 3B |

| Layers | 28 |

| Heads(Q/KV) | 20 / 4 |

| Text Experts(Total / Activated) | 64 / 6 |

| Vision Experts(Total / Activated) | 64 / 6 |

| Shared Experts | 2 |

| Context Length | 131072 |

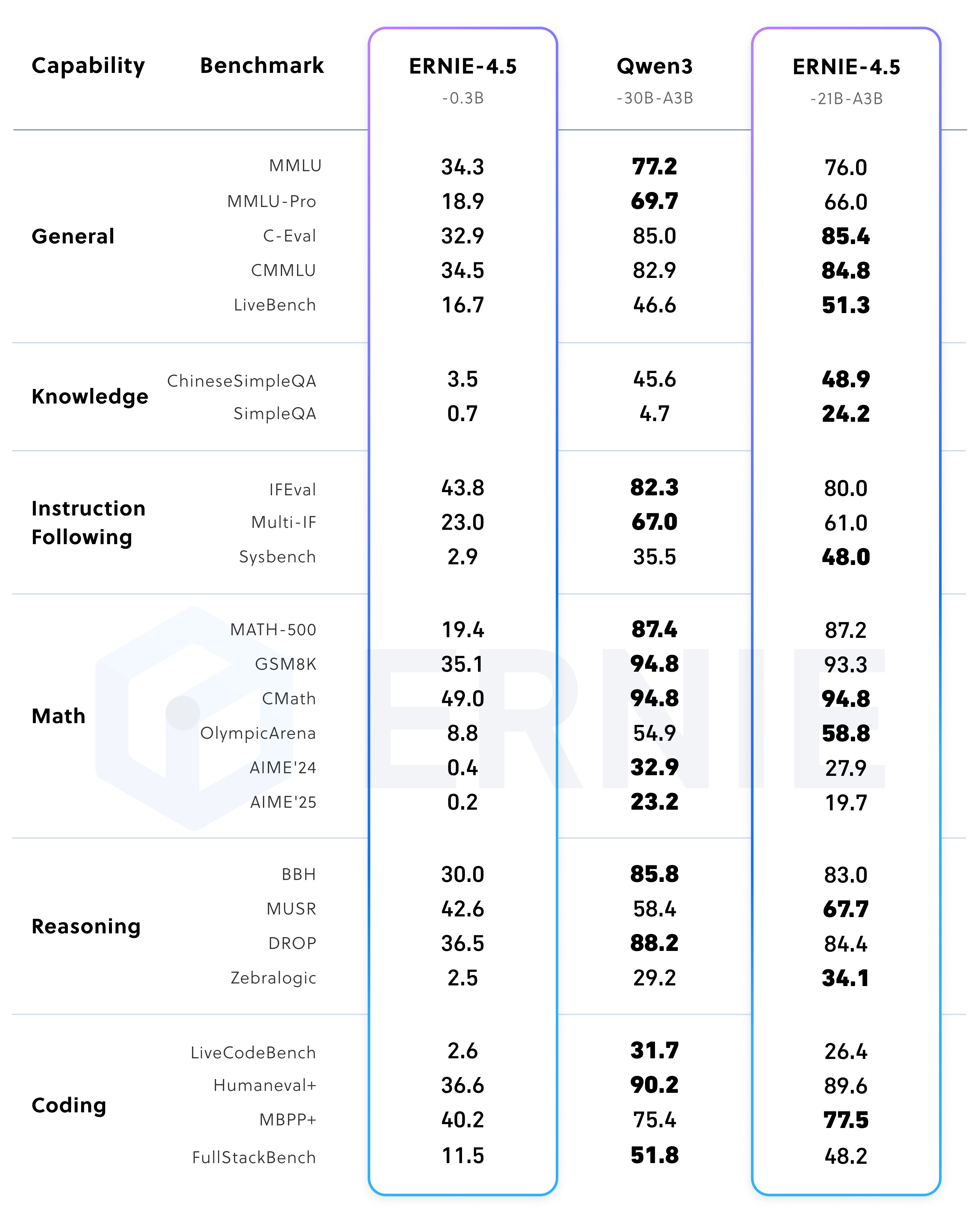

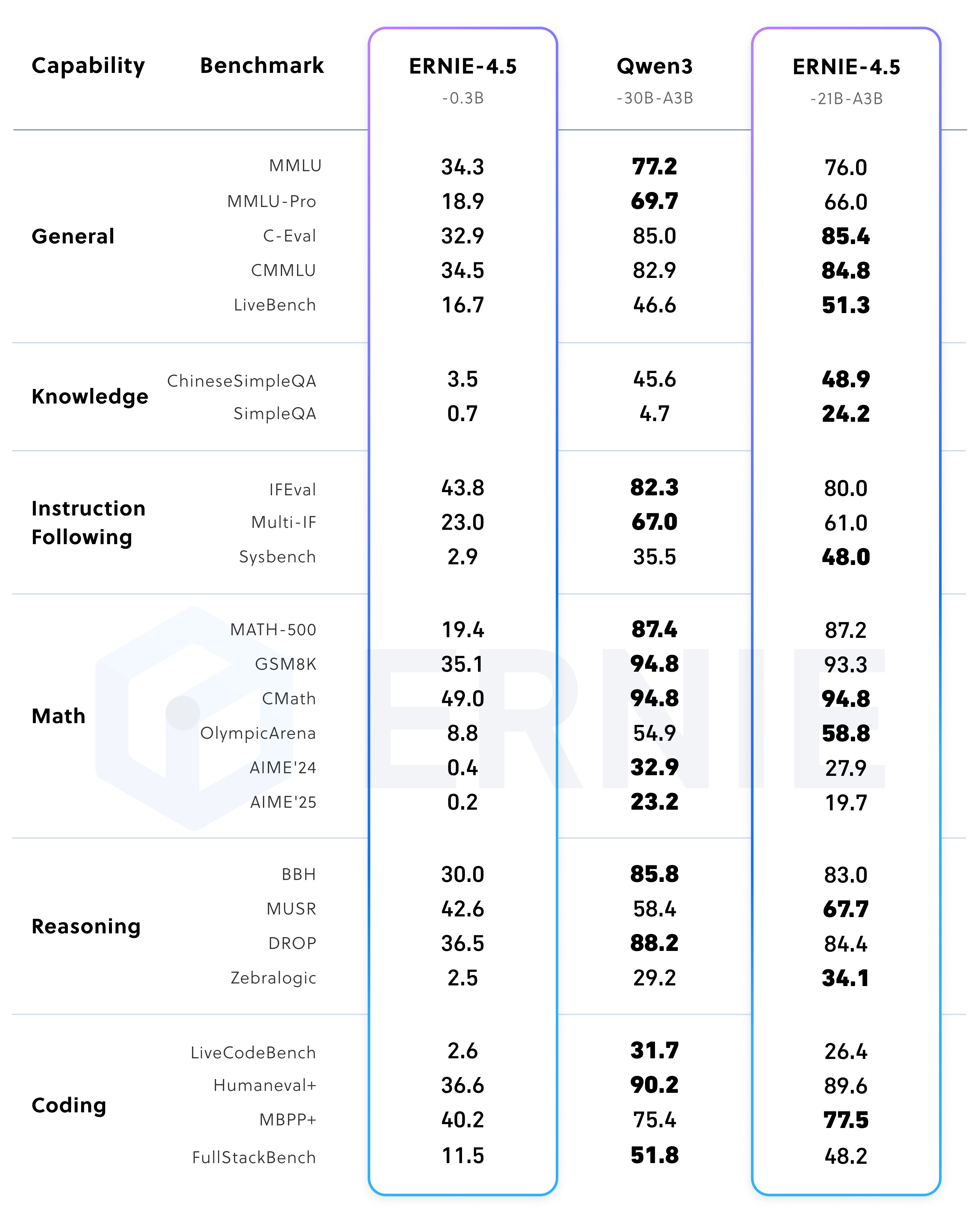

Performance

License

ERNIE 4.5 is Apache 2.0 licensed.