Codestral

Codestral

Mistral AI's latest coding model, Codestral can handle both instructions and code completions with ease in over 80 programming languages.

Memory Requirements

To run the smallest Codestral, you need at least 13 GB of RAM.

Capabilities

Codestral models are available in gguf formats.

About Codestral

MistralAI introduces Codestral, their first-ever code model. Codestral is an open-weight generative AI model explicitly designed for code generation tasks.

Codestral-22B-v0.1 is trained on a diverse dataset of 80+ programming languages, including the most popular ones, such as Python, Java, C, C++, JavaScript, and Bash (more details in the Blogpost). The model can be queried:

-

As instruct, for instance to answer any questions about a code snippet (write documentation, explain, factorize) or to generate code following specific indications

-

As Fill in the Middle (FIM), to predict the middle tokens between a prefix and a suffix (very useful for software development add-ons like in VS Code).

Performance

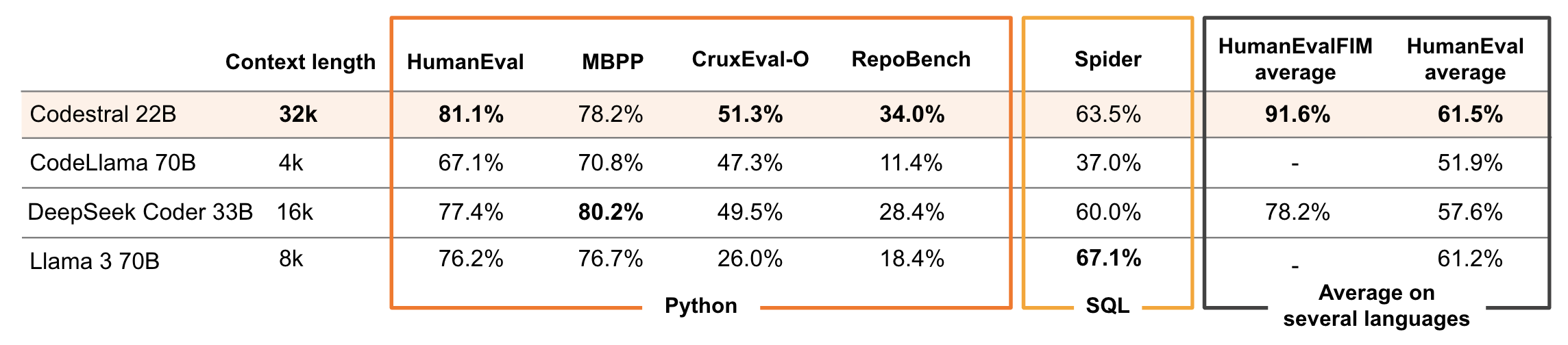

As a 22B model, Codestral sets a new standard on the performance/latency space for code generation compared to previous models used for coding.

License

Codestral is provided under the custom MNPL-0.1 license.