Documentation

Getting Started

Model Context Protocol (MCP)

Models (model.yaml)

User Interface

Getting Started

Model Context Protocol (MCP)

Models (model.yaml)

User Interface

Download and run Large Language Models like Qwen, Mistral, Gemma, or gpt-oss in LM Studio.

Double check computer meets the minimum system requirements.

You might sometimes see terms such as open-source models or open-weights models. Different models might be released under different licenses and varying degrees of 'openness'. In order to run a model locally, you need to be able to get access to its "weights", often distributed as one or more files that end with .gguf, .safetensors etc.

Getting up and running

First, install the latest version of LM Studio. You can get it from here.

Once you're all set up, you need to download your first LLM.

1. Download an LLM to your computer

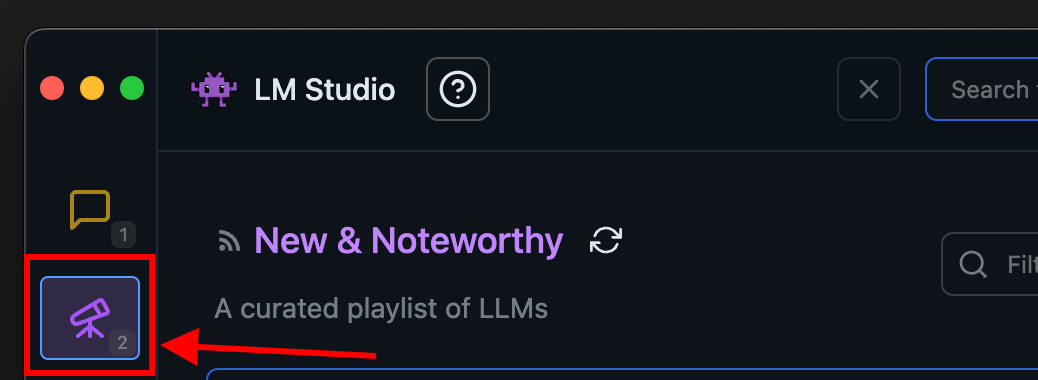

Head over to the Discover tab to download models. Pick one of the curated options or search for models by search query (e.g. "Llama"). See more in-depth information about downloading models here.

The Discover tab in LM Studio

2. Load a model to memory

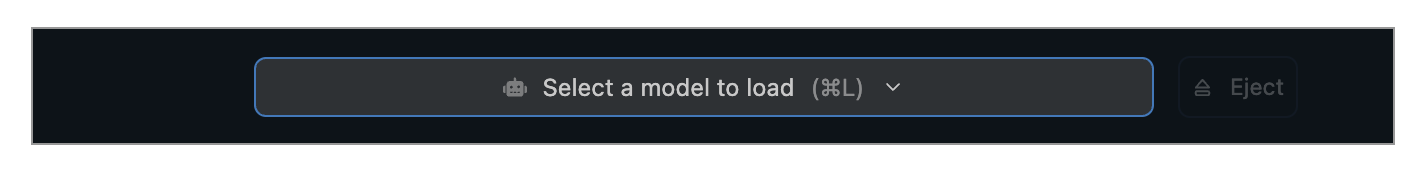

Head over to the Chat tab, and

- Open the model loader

- Select one of the models you downloaded (or sideloaded).

- Optionally, choose load configuration parameters.

Quickly open the model loader with cmd + L on macOS or ctrl + L on Windows/Linux

What does loading a model mean?

Loading a model typically means allocating memory to be able to accommodate the model's weights and other parameters in your computer's RAM.

3. Chat!

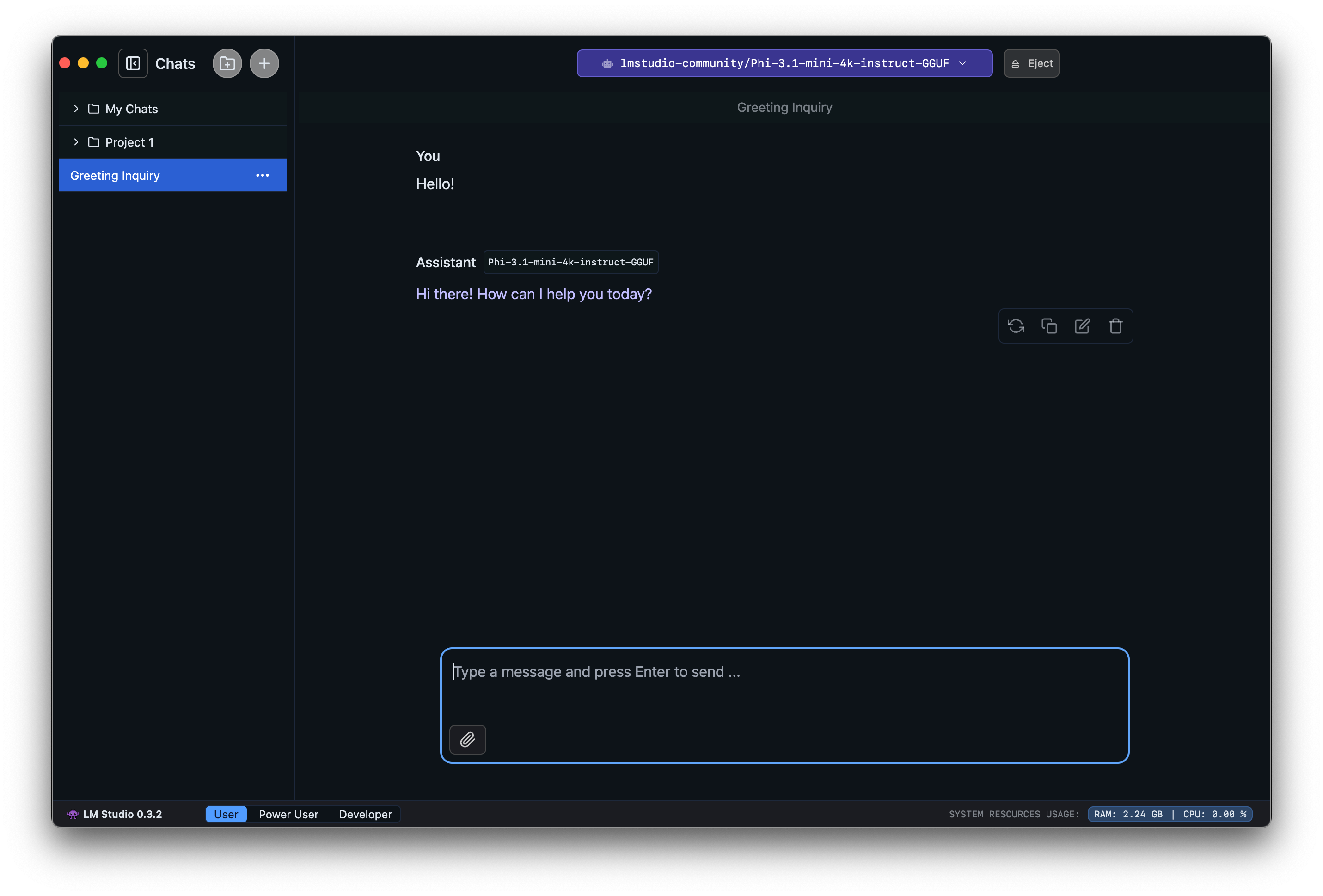

Once the model is loaded, you can start a back-and-forth conversation with the model in the Chat tab.

LM Studio on macOS

Community

Chat with other LM Studio users, discuss LLMs, hardware, and more on the LM Studio Discord server.

This page's source is available on GitHub

On this page

Getting up and running

1. Download an LLM to your computer

2. Load a model to memory

3. Chat!

Community