Use OpenAI's Responses API with local models

LM Studio 0.3.29 is now available as a stable release. Update in‑app or download the latest version at lmstudio.ai/download.

New: OpenAI-compatible /v1/responses

This release adds support for OpenAI’s /v1/responses API through the LM Studio REST server.

- Stateful interactions - pass a

previous_response_idto continue interactions without needing to manage message history yourself. - Custom function tool calling - bring your own function tools for the model to call, similar to in

v1/chat/completions. - Remote MCP - enable the model to call tools from remote MCP servers, requires explicit opt-in in settings.

- Reasoning support - parse reasoning output, and control effort with

reasoning: { effort: "low" | "medium" | "high" }foropenai/gpt-oss-20b. - Streaming or sync - use

stream: trueto receive SSE events as the model generates, or omit for a single JSON response. - More information at https://lmstudio.ai/docs/app/api/endpoints/openai

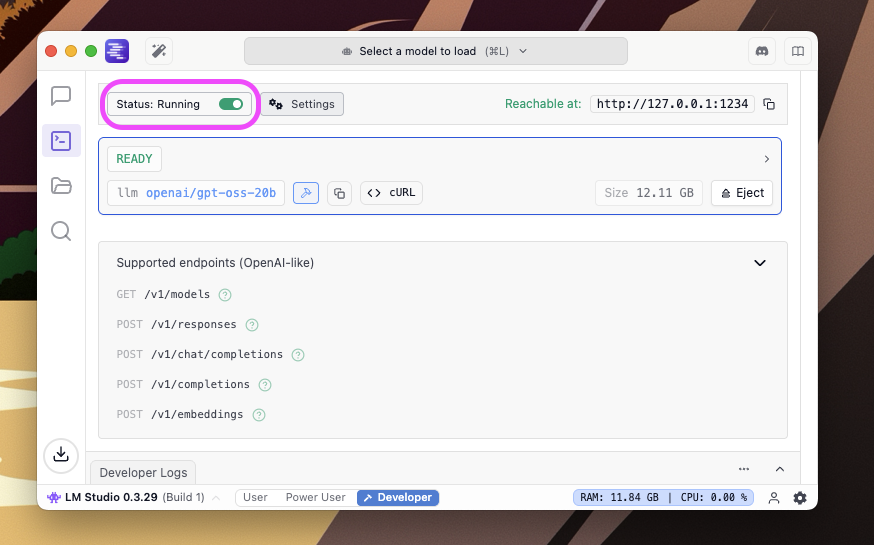

To use REST API server endpoints, ensure your LM Studio server is running in the UI (Developer → Status: Running):

Start the server in the UI

Or through the lms CLI:

→ % lms server start Success! Server is now running on port 1234

Stateful Interactions

-

Example request:

curl http://127.0.0.1:1234/v1/responses \ -H "Content-Type: application/json" \ -d '{ "model": "openai/gpt-oss-20b", "input": "Provide a prime number less than 50", "reasoning": { "effort": "low" } }' -

Response (shortened):

{ "id": "resp_123", "output": [{"type":"message", ...}] } -

Continue the interaction by setting

previous_response_idto the id above:curl http://127.0.0.1:1234/v1/responses \ -H "Content-Type: application/json" \ -d '{ "model": "openai/gpt-oss-20b", "input": "Multiply it by 2", "previous_response_id": "resp_123" }'

Streaming

Example request:

curl http://127.0.0.1:1234/v1/responses \ -H "Content-Type: application/json" \ -d '{ "model": "openai/gpt-oss-20b", "input": "Hello", "stream": true }'

You’ll receive events as the model generates output like response.created, response.output_text.delta, and response.completed. See docs for more details on streaming events.

Remote MCP

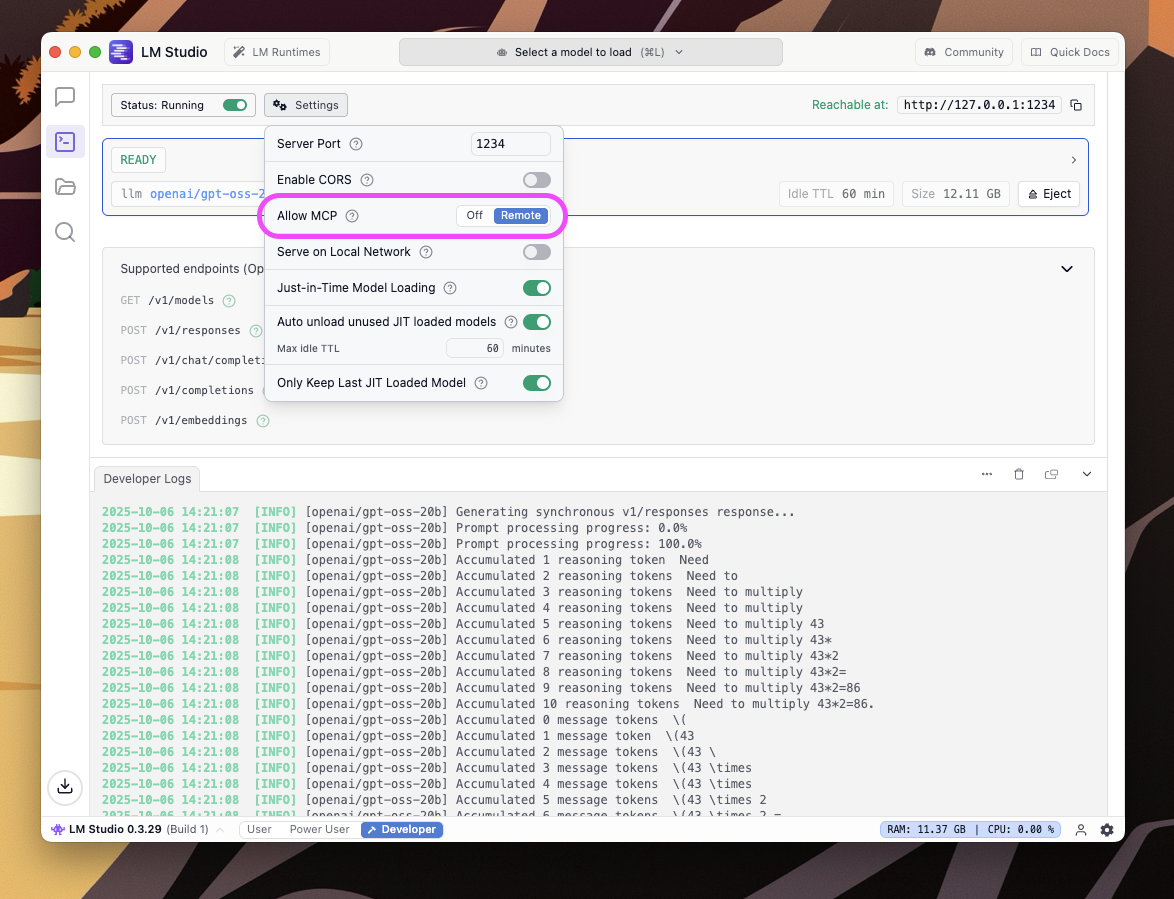

Opt-in to allow use of remote MCP servers (Developer → Settings → Allow MCP → Remote):

Allow remote MCP servers

Example request:

curl http://127.0.0.1:1234/v1/responses \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $OPENAI_API_KEY" \ -d '{ "model": "openai/gpt-oss-20b", "tools": [{ "type": "mcp", "server_label": "tiktoken", "server_url": "https://gitmcp.io/openai/tiktoken", "allowed_tools": ["fetch_tiktoken_documentation"] }], "input": "What is the first sentence of the tiktoken documentation?" }'

Output will include a tool discovery and a tool call before the assistant’s reply. See docs for full schema and examples.

CLI: list all model variants

Quickly inspect every available variant for multi‑variant models:

lms ls --variants

Example output (variants only):

google/gemma-3-12b (2 variants) * google/gemma-3-12b@q3_k_l 12B gemma3 7.33 GB google/gemma-3-12b@4bit 12B gemma3 8.07 GB

0.3.29 - Release Notes

Build 1

- New OpenAI compatibility endpoint:

/v1/responses- Create stateful interactions by passing the id of a previous response as input to the next — no need to manage message history yourself

- Custom tool calling support

- Supports reasoning parsing, setting reasoning effort ("low"|"medium"|"high") for models like gpt-oss

- Synchronous and streaming (

stream=true) - See https://lmstudio.ai/docs/app/api/endpoints/openai for more details

- New

lms lscommand option:lms ls --variantsto list all variants for multi-variant models

Resources

- We are hiring! Check out our careers page for open roles.

- Download LM Studio: lmstudio.ai/download

- Report bugs: lmstudio-bug-tracker

- X / Twitter: @lmstudio

- Discord: LM Studio Community