Introducing lmstudio-python and lmstudio-js

LM Studio SDK is here!

Today we are launching lmstudio-python (1.0.1) and lmstudio-js (1.0.0):

LM Studio's software developer kits for Python and TypeScript. Both libraries are MIT licensed and are developed in the open on Github.

Furthermore, we introduce LM Studio's first agent-oriented API: the .act() call. You give it a prompt and tools, and the model goes on its own autonomously for multiple execution "rounds" until it accomplishes the task (or gives up).

Use LM Studio's capabilities from your own code

The SDK lets you tap into the same AI system capabilities we've built for the LM Studio desktop app. Opening up these APIs was always our plan, and we've architected the software stack with this in mind: LM Studio uses the same public lmstudio-js APIs for its core functions. Your apps can now do this too.

Build your own local AI tools

Our goal is to enable you to build your own tools, while (hopefully) saving you from having to solve the problems we already solved as a part of developing LM Studio: this includes automatic software dependency management (CUDA, Vulkan), multi GPU support (NVIDIA, AMD, Apple), multi operating system support (Windows, macOS, Linux), default LLM parameter selection, and much more.

Apps using lmstudio-python or lmstudio-js will be able to run on any computer that has LM Studio running (either in the foreground or in headless mode).

Your first app

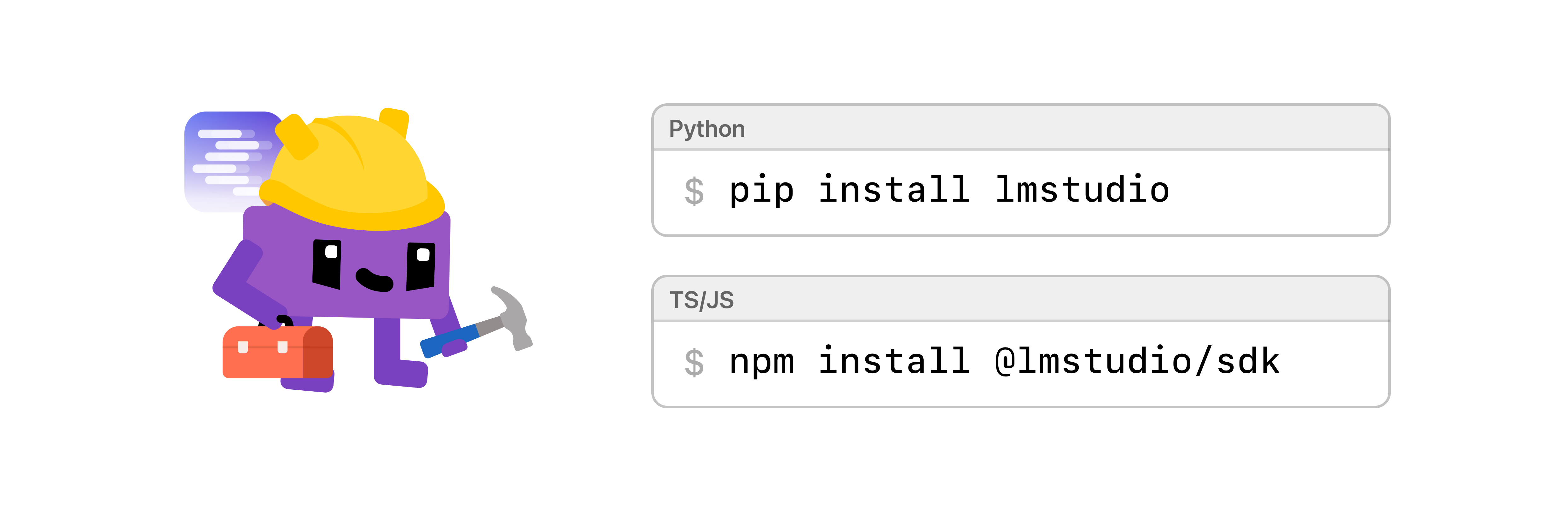

The SDK is available for both Python and TypeScript. You can install it via pip or npm:

pip install lmstudio

In Python, use the lmstudio package in your scripts, tools, or even the Python REPL.

- See

lmstudio-pythondeveloper docs.

In TypeScript, import the @lmstudio/sdk package in your Node.js or browser apps.

- See

lmstudio-jsdeveloper docs.

Core APIs: Chat, Text Completions, Embeddings, and Agentic Tool Use

The core APIs surfaced through the LM Studio SDK are:

- Chat with LLMs (

.respond()) - Agentic Tool Use (

.act()) - Structured Output (Pydantic, zod, JSON schema)

- Image Input

- Speculative Decoding (for MLX and llama.cpp)

- Text Completions (

.complete()) - Embeddings (

.embed()) - Low Level Configuration (GPU, context length, and more)

- Model Management in Memory (

.load(),.unload())

In TypeScript, SDK APIs work both from Node environments and the browser (with CORS enabled). In Python, we support both a convenience sync API, as well as a session based async API for scoped resource management.

For a full list of APIs, see the API References for Python and TypeScript.

APIs with sane defaults, but also fully configurable

Apps, tools, scripts using lmstudio-python or lmstudio-js would be to run the latest llama.cpp or MLX models without having to configure hardware or software. The system automatically chooses the right inferencing engine for a given model, and picks parameters (such as GPU offload) based on the available resources.

For example, if you just want any model for a quick prompt, run this:

model = lms.llm() # gets the current model if loaded

But if you have more specific needs, you can load a new instance of a model and configure every parameter manually. See more Python docs | TypeScript docs.

Enforce Output Format with Pydantic, zod, or JSON schema

Back in the old days of LLMs (circa mid 2023), the best way to ensure a specific output format from a model involved prompting with pleas such as

USER: "Please respond with valid JSON and nothing else. PLEASE do not output ANYTHING after the last } bracket".

Nowadays, much better methods exist — for example grammar constrained sampling [1] [2], which LM Studio supports for both llama.cpp and MLX models.

The SDK surfaces APIs to enforce the model's output format using Pydantic (for Python), or zod (for TypeScript). In both libraries, you can also use a JSON schema.

Python - use Pydantic

from pydantic import BaseModel

# A class based schema for a book

class BookSchema(BaseModel):

title: str

author: str

year: int

result = model.respond("Tell me about The Hobbit",

response_format=BookSchema)

book = result.parsed

print(book)

# ^

# Note that `book` is correctly typed as

# { title: string, author: string, year: number }

Read more in the Python docs.

TypeScript - use zod

import { z } from "zod";

// A zod schema for a book

const bookSchema = z.object({

title: z.string(),

author: z.string(),

year: z.number().int(),

});

const result = await model.respond("Tell me about The Hobbit.",

{ structured: bookSchema }

);

const book = result.parsed;

console.info(book);

// ^

// Note that `book` is correctly typed as

// { title: string, author: string, year: number }

Read more in the TypeScript docs.

The .act() API: running tools in a loop

In addition to "standard" LLM APIs like .respond(), the SDK introduces a new API: .act(). This API is designed for agent-oriented programming, where a model is given a task and a set of tools, and it then goes on its own in an attempt to accomplish the task.

What does it mean for an LLM to "use a tool"?

LLMs are largely text-in, text-out programs. So, you may ask "how can an LLM use a tool?". The answer is that some LLMs are trained to ask the human to call the tool for them, and expect the tool output to to be provided back in some format.

Imagine you're giving computer support to someone over the phone. You might say things like "run this command for me ... OK what did it output? ... OK now click there and tell me what it says ...". In this case you're the LLM! And you're "calling tools" vicariously through the person on the other side of the line.

Running tool calls in "rounds"

We introduce the concept of execution "rounds" to describe the combined process of running a tool, providing its output to the LLM, and then waiting for the LLM to decide what to do next.

Execution Round

• run a tool → ↑ • provide the result to the LLM → │ • wait for the LLM to generate a response │ └────────────────────────────────────────┘ └➔ (return)

A model might choose to run tools multiple times before returning a final result. For example, if the LLM is writing code, it might choose to compile or run the program, fix errors, and then run it again, rinse and repeat until it gets the desired result.

With this in mind, we say that the .act() API is an automatic "multi-round" tool calling API.

In TypeScript, you define tools with a description and a function. In Python, you may even pass in a function directly!

import lmstudio as lms

def multiply(a: float, b: float) → float:

"""Given two numbers a and b. Returns the product of them."""

return a * b

model = lms.llm("qwen2.5-7b-instruct")

model.act(

"What is the result of 12345 multiplied by 54321?",

[multiply],

on_message=print,

)

When the LLM chooses to use a tool, the SDK runs it and automatically (in the client process) provides the result back to the LLM. The model might then choose to run tools again, e.g. there was a software error that provided the LLM information about how to proceed.

As the execution progresses, the SDK provides a stream of events to the developer via callbacks. This allows you to build interactive UIs that show the user what the LLM is doing, which tool it is using, and how it's progressing.

To get started with the .act() API, see .act() (Python) and .act() (TypeScript).

Open Source: Contributing to SDK Development

Both libraries are MIT and contain up to date contribution guides. If you want to get involved with open source development, you're welcome to jump in!

- See

lmstudio-pythonon Github - See

lmstudio-json Github

Additional LM Studio open source software includes:

lms: LM Studio's CLI (MIT) on Githubmlx-engine: LM Studio's Apple MLX engine (MIT) on Github

We'd love to hear your feedback!

As you start using the SDK, we'd love to hear your feedback. What's working well? What's not? What features would you like to see next? And of course, whether you're running into bugs.

Please open issues on the respective Github repositories. We'd appreciate a star too 🙏⭐️.