NVIDIA DGX Spark

LM Studio is now supported on NVIDIA's DGX Spark! We're excited to be a launch partner for this new device. This also means that starting now, LM Studio ships for Linux on ARM (aarch64).

The Spark is a tiny but mighty Linux ARM box with 128GB of unified RAM, and 273 GB/s memory bandwidth. The form factor is quite small (similar to a Mac Mini), and it's styled like a miniature version the bigger DGX systems.

We brought up a new variant of LM Studio's llama.cpp engine with support for CUDA 13.

Use NVIDIA DGX Spark as your own private LLM server

On the Spark, you can run the LM Studio GUI and use the Chat UI as you would on any other computer.

Additionally, you could utilize LM Studio's API server and make the Spark your own private LLM server and connect to it over the network.

Install LM Studio on the Spark

First, grab the LM Studio Linux ARM64 AppImage from the download page and install it on your Spark.

Download a model

Next, download a model like gpt-oss or Qwen3 Coder.

lms get openai/gpt-oss-20b

Start the LLM server

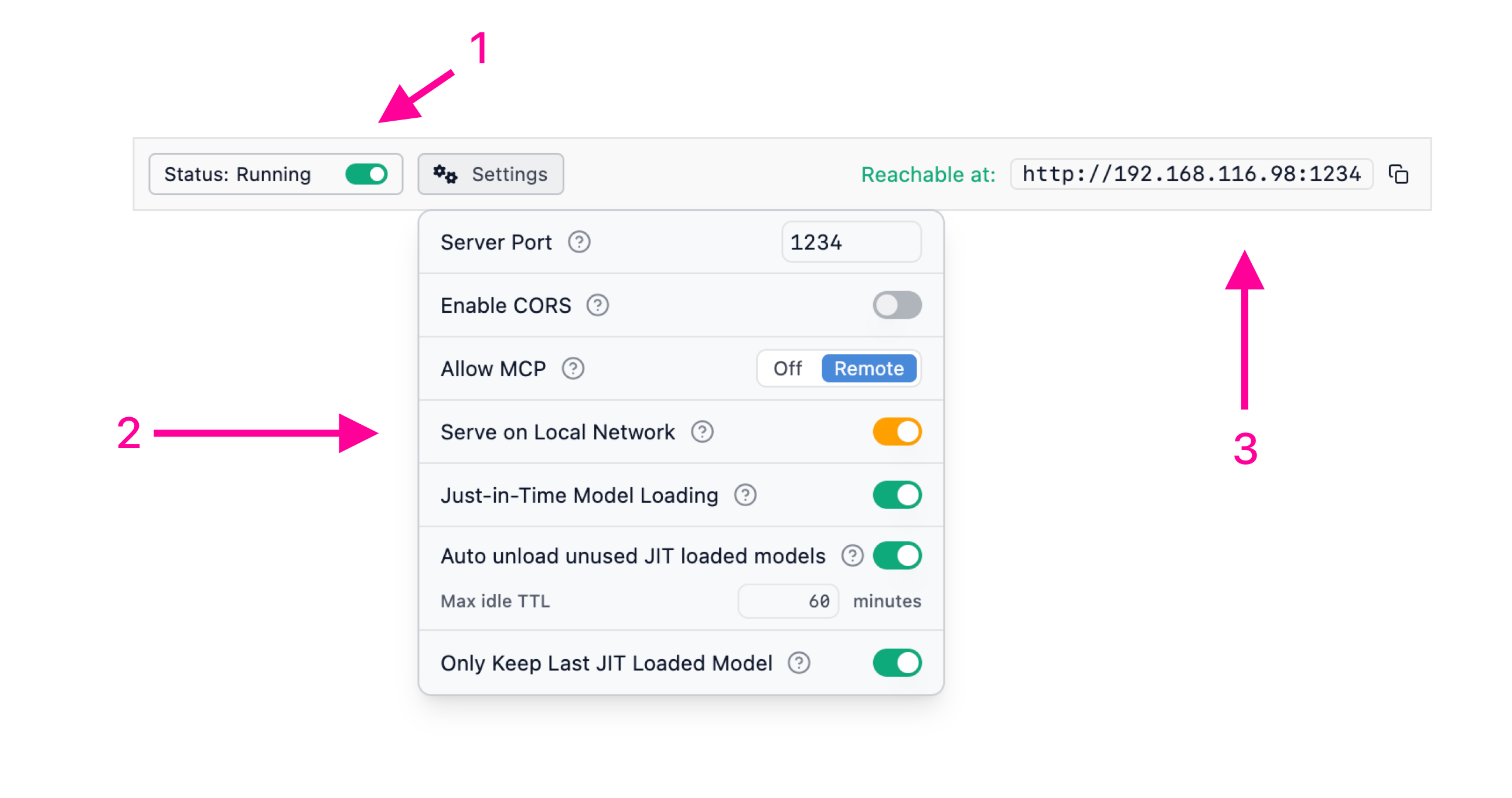

In the LM Studio GUI, head to the Developer tab and in the server settings, turn on Serve on Local Network. This will allow other devices on your network to connect to the Spark's LLM server.

Turn on Serve on Local Network in the Developer tab

In (3) above, you can see which IP address and port the server is running on. By default, it's port 1234.

P.S. you can also start the server from the command line:

lms server start # --port 1234

Connect to the Spark from another device

Assuming your network isn't locked down in such a way that prevents devices from talking to each other, you can connect to the Spark's LLM server from another device on the same subnet.

Give it a try by sending a request to list the available models:

curl http://<SPARK_IP_ADDRESS>:1234/v1/models

If you can reach the device, you're good to go!

Use the Spark's LLM server in your apps

Utilize LM Studio's SDKs (lmstudio-js, lmstudio-python) or OpenAI-compatible API to connect to the Spark's LLM server from your apps, just like you would a hosted LLM service.

More Resources

- We are hiring! Check out our careers page for open roles.

- Download LM Studio: lmstudio.ai/download

- Report bugs: lmstudio-bug-tracker

- X / Twitter: @lmstudio

- Discord: LM Studio Community