DeepSeek R1: open source reasoning model

Last week, Chinese AI company DeepSeek released its highly anticipated open-source reasoning models, dubbed DeepSeek R1. DeepSeek R1 models, both distilled* and full size, are available for running locally in LM Studio on Mac, Windows, and Linux.

* read below about distilled models and how they're made

DeepSeek R1 distilled into Qwen 7B (MLX, 4-bit) solving an algebra question, 100% offline on an M1 Mac.

DeepSeek R1 models, distilled and full size

If you've gone online in the last week or so, there's little chance you missed the news about DeepSeek.

DeepSeek R1 models represent significant and exciting milestone for openly available models: you can now run "reasoning" models, similar in style to OpenAI's o1 models, on your local system. All you need is enough RAM.

The release from DeepSeek included:

- DeepSeek-R1 - the flagship 671B parameter reasoning model

- DeepSeek-R1 distilled models: A collection of smaller pre-existing models fine-tuned using DeepSeek-R1 generations (1.5B, 7B, 8B, 14B, 32B, 70B parameters). An example is DeepSeek-R1-Distill-Qwen-7B.

- DeepSeek-R1-Zero - An R1 prototype fine-tuned using only unsupervised reinforcement learning (RL)

Can I run DeepSeek R1 models locally?

Yes, if you have enough RAM.

Here's how to do it:

- Download LM Studio for your operating system from here.

- Click the 🔎 icon on the sidebar and search for "DeepSeek"

- Pick an option that will fit on your system. For example, if you have 16GB of RAM, you can run the 7B or 8B parameter distilled models. If you have ~192GB+ of RAM, you can run the full 671B parameter model.

- Load the model in the chat, and start asking questions!

Reasoning models, what are they?

Reasoning models were trained to "think" before providing a final answer. This is done using a technique called "Chain-of-thought" (CoT). CoT is a technique that encourages models to break down complex problems into smaller, more manageable steps. This allows the model to arrive at a final answer through a series of intermediate steps, rather than attempting to solve the problem in one go. DeepSeek's CoT is contained in <think>...</think> tokens.

When asked a non-trivial question, DeepSeek models will start their response with a <think> token. The content afterwards will often look like a long stream of consciousness, where the model is working through the problem step by step. After the closing </think> token, the model start generating regular content, which is the final answer to the question. The content after the </think> token is directly influenced by the content in the <think> section.

Below is output from DeepSeek-R1-Distill-Qwen-7B that demonstrates its ability to "think" to holistically answer the question "Are tomatoes fruits?" The thinking section is wrapped in <think>...</think> tags.

User's question:

Are tomatoes fruits?

Model's response:

Click to expand/collapse

DeepSeek-R1-Distill-Qwen-7B: <think> Okay, so I'm trying to figure out whether tomatoes are fruits or vegetables. I know that in many places like Italy, they're definitely considered fruits because you can eat them raw and they have a sweet taste. But here in the US, it's usually said they're vegetables. Hmm, why is there this difference? I remember learning that fruits come from flowers that aren't pollinated, right? Like roses where bees don't help them reproduce. Tomatoes grow on vines and are typically picked when ripe. They have seeds inside which you eat. On the other hand, vegetables usually come from plants that do get pollinated, like carrots or tomatoes when they're on a plant with flowers. Wait, but tomatoes can be both eaten raw and cooked, just like many fruits. So maybe it's more about how they're grown rather than their natural state. I think the confusion comes from different regions having different classifications. In Italy, they're definitely fruits because they're used as such in dishes without needing to be cooked down or anything. I guess in the US, tomatoes are considered vegetables because they come from plants that do produce flowers and require pollination by insects like bees. So even though you can eat them raw, their classification as a vegetable is based on their origin and how they're typically used in cooking here. But then again, I've seen them used both ways—like people making sauces or just eating them as snacks. But maybe in the culinary sense, they're more often used as vegetables because of their typical preparation methods like slicing or chopping for soups or salads. So it's probably a matter of regional definitions and how they are primarily consumed here versus where they might be considered fruits naturally. </think> Tomatoes can be both fruits and vegetables depending on the context: Fruit Perspective: In many countries, including Italy, tomatoes are classified as fruits because they grow on vines without needing pollination and can be eaten raw with a sweet taste. Vegetable Perspective (US Context): In the United States, tomatoes are considered vegetables due to their origin from plants that do produce flowers and require pollination by insects like bees. They are often used in cooking methods such as slicing or chopping for soups and salads, which aligns more with vegetable preparation. Thus, the classification of tomatoes can vary based on regional definitions and usage.

Performance

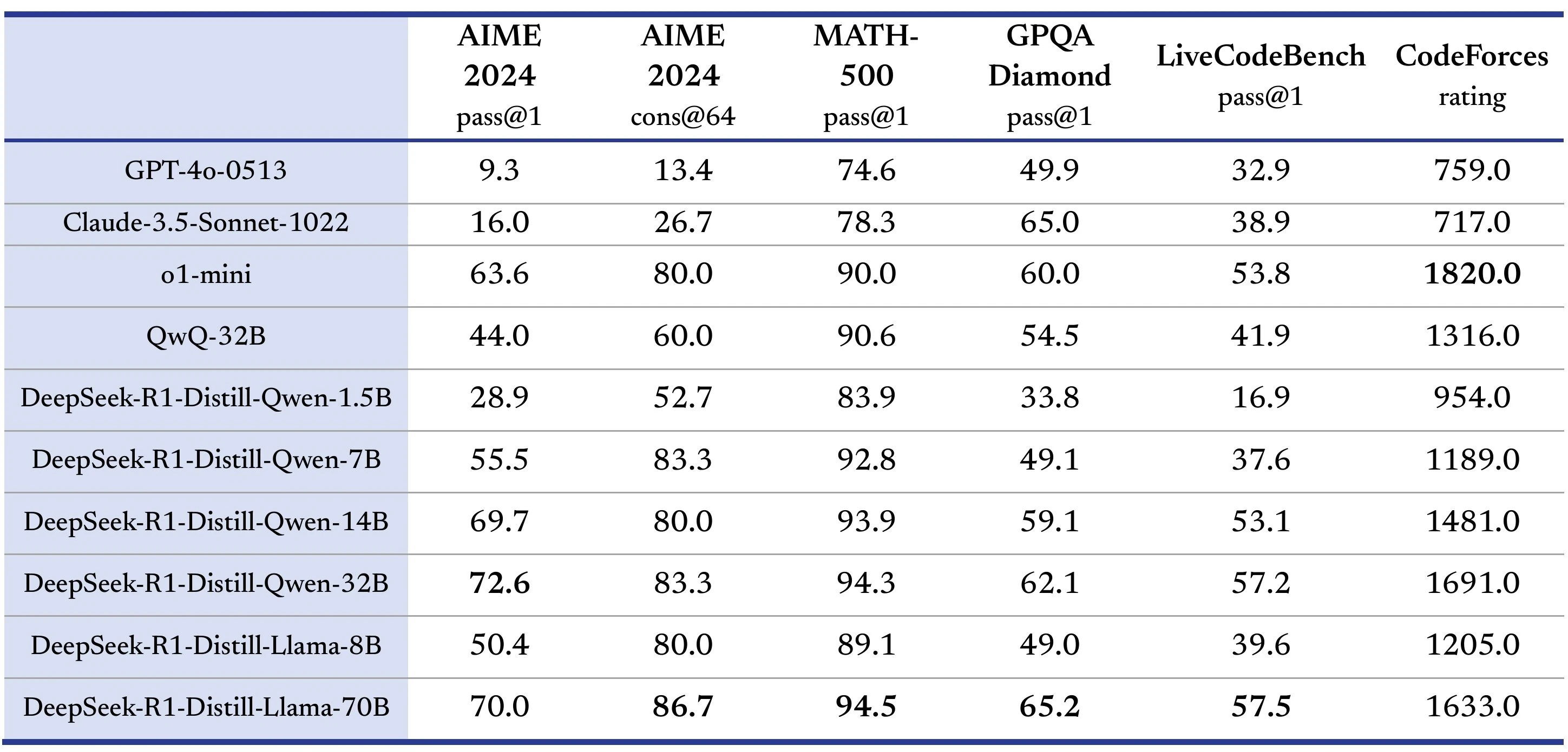

According to several popular reasoning benchmarks like AIME 2024, MATH-500, and CodeForces, the open-source flagship 671B parameter DeepSeek-R1 model performs comparably to OpenAI's full-sized o1 reasoning model. The smaller DeepSeek-R1 "distilled" models perform comparably to OpenAI's o1-mini reasoning models.

Source: @deepseek_ai on X

Distillation

"Distilling" DeepSeek-R1 means: Taking smaller "dense models" like Llama3 and Qwen2.5, and fine-tuning them using artifacts generated by a larger model, with the intention to instill in them capabilities that resemble the larger model.

DeepSeek did this by curating around 800k (600k reasoning, 200k non-reasoning) high-quality generations from DeepSeek-R1, and training Llama3 and Qwen2.5 models on them (Source: DeepSeek's R1 publication).

This is an efficient technique to "teach" smaller, pre-existing models how to reason like DeepSeek-R1.

Training

DeepSeek-R1 was largely trained using unsupervised reinforcement learning. This is an important achievement, because it means humans did not have to curate as much labeled supervised fine-tuning (SFT) data.

DeepSeek-R1-Zero, DeepSeek-R1's predecessor, was fine-tuned using only reinforcement learning. However, it had issues with readability and language mixing.

DeepSeek eventually arrived on a multi-stage training pipeline for R1 that mixed SFT and RL techniques to maintain RL novelty and cost benefits while addressing the shortcomings of DeepSeek-R1-Zero.

More detailed info on training can be found in DeepSeek's R1 publication.

Use DeepSeek R1 models locally from your own code

You can leverage LM Studio's APIs to call DeepSeek R1 models from your own code.

Here are some relevant documentation links:

- LM Studio API documentation - API reference

- OpenAI compatibility mode - swap out the base URL and reuse your OpenAI client code

- Running LM Studio headlessly - call LM Studio's local server without the GUI

lms: LM Studio's CLI

Even More

- Download the latest LM Studio from https://lmstudio.ai/download.

- New to LM Studio? Head over to the documentation: Getting Started with LM Studio.

- For discussions and community, join our Discord server: https://discord.gg/aPQfnNkxGC

- If you want to use LM Studio at your organization at work, get in touch: LM Studio @ Work