MCP in LM Studio

LM Studio 0.3.17 introduces Model Context Protocol (MCP) support, allowing you to connect your favorite MCP servers to the app and use them with local models.

LM Studio supports both local and remote MCP servers. You can add MCPs by editing the app's mcp.json file or via the new "Add to LM Studio" Button, when available.

Also new in this release:

- Support for 11 new languages, thanks to our community localizers. LM Studio is now available in 33 languages.

- Many bug fixes, as well as improvements to the UI, including a new theme: 'Solarized Dark'.

Upgrade via in-app update, or from https://lmstudio.ai/download.

Add MCP servers to LM Studio

Model Context Protocol (MCP) is a set of interfaces for providing LLMs access to tools and other resources. It was originally introduced by Anthropic, and is developed on Github.

Terminology:

- "MCP Server": program that provides tools and access to resources. For example Stripe, GitHub, or Notion make MCP servers.

- "MCP Host": applications (like LM Studio or Claude Desktop) that can connect to MCP servers, and make their resources available to models.

Install new servers: mcp.json

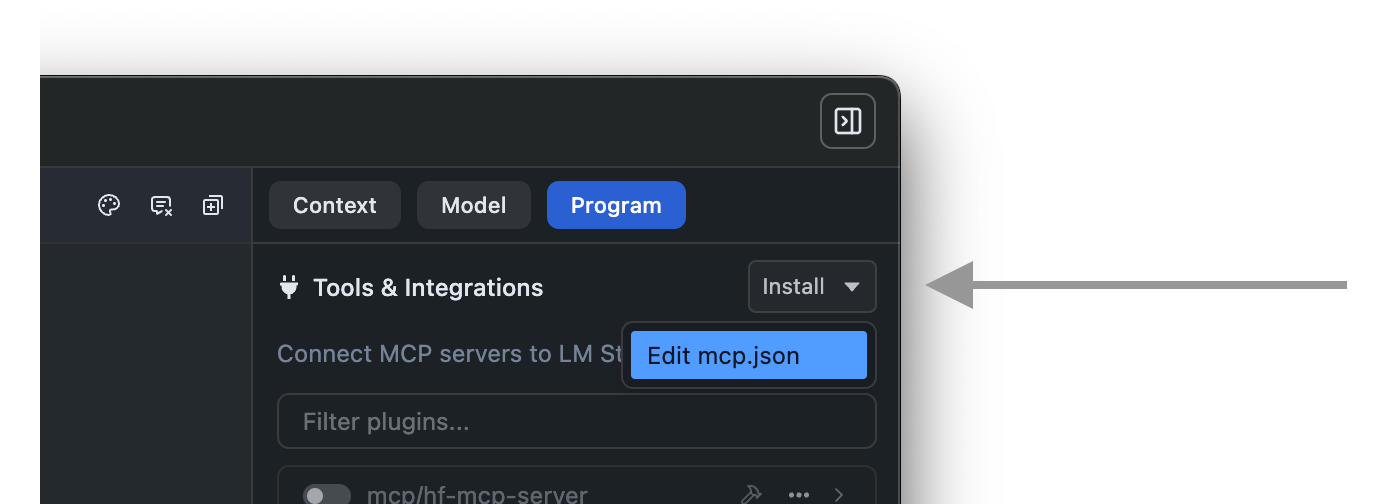

Switch to the "Program" tab in the right hand sidebar. Click Install > Edit mcp.json.

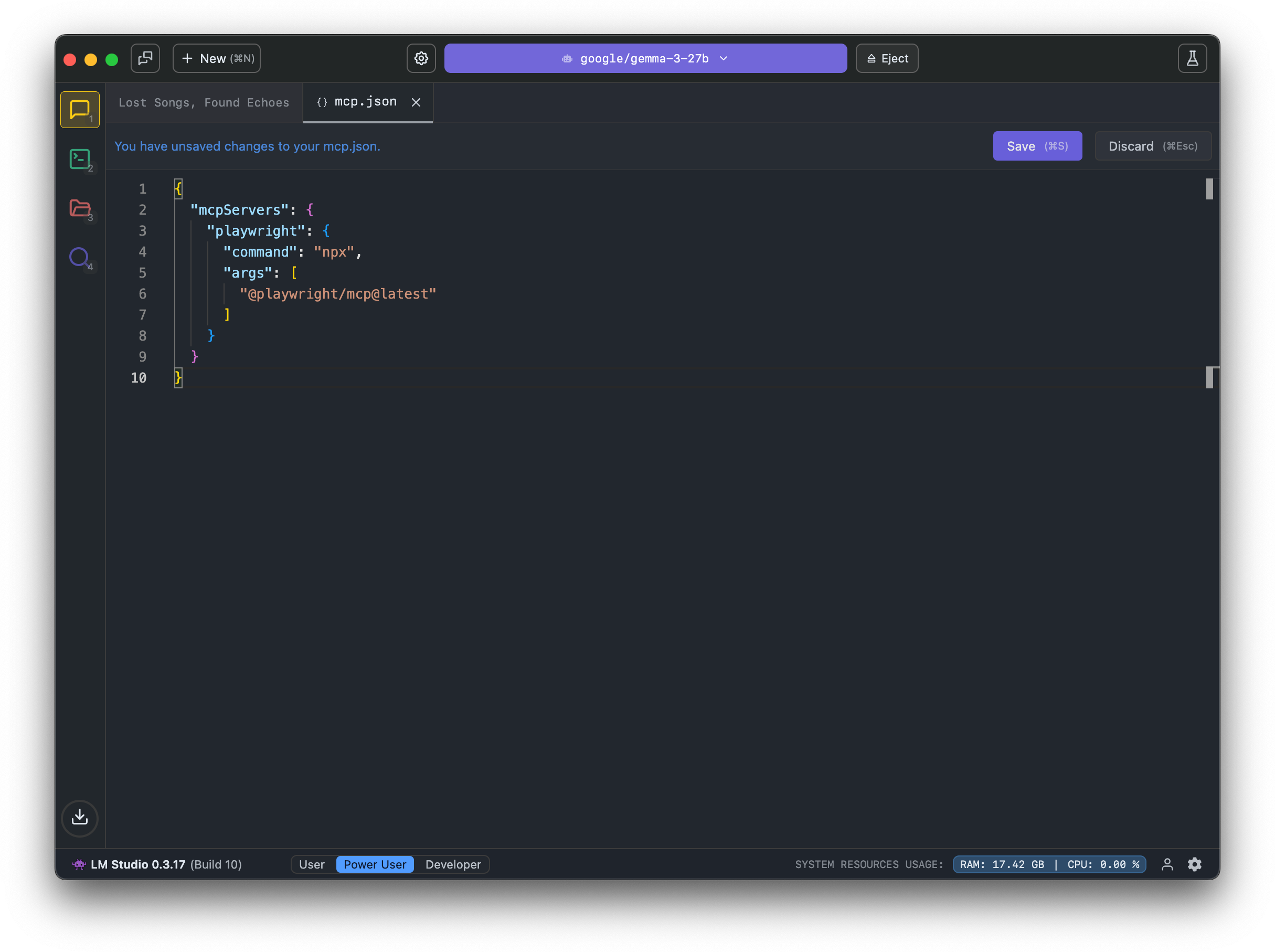

This will open the mcp.json file in the in-app editor. You can add MCP servers by editing this file.

Edit mcp.json using the in-app editor

LM Studio currently follows Cursor's mcp.json notation.

Example MCP to try: Hugging Face MCP Server

This MCP server provides access to functions like model and dataset search.

{ "mcpServers": { "hf-mcp-server": { "url": "https://huggingface.co/mcp", "headers": { "Authorization": "Bearer <YOUR_HF_TOKEN>" } } } }

You will need to replace <YOUR_HF_TOKEN> with your actual Hugging Face token. Learn more here.

Be cautious

Never install MCPs from untrusted sources.

Some MCP servers can run arbitrary code, access your local files, and use your network connection. Always be cautious when installing and using MCP servers. If you don't trust the source, don't install it.

Tool call confirmations

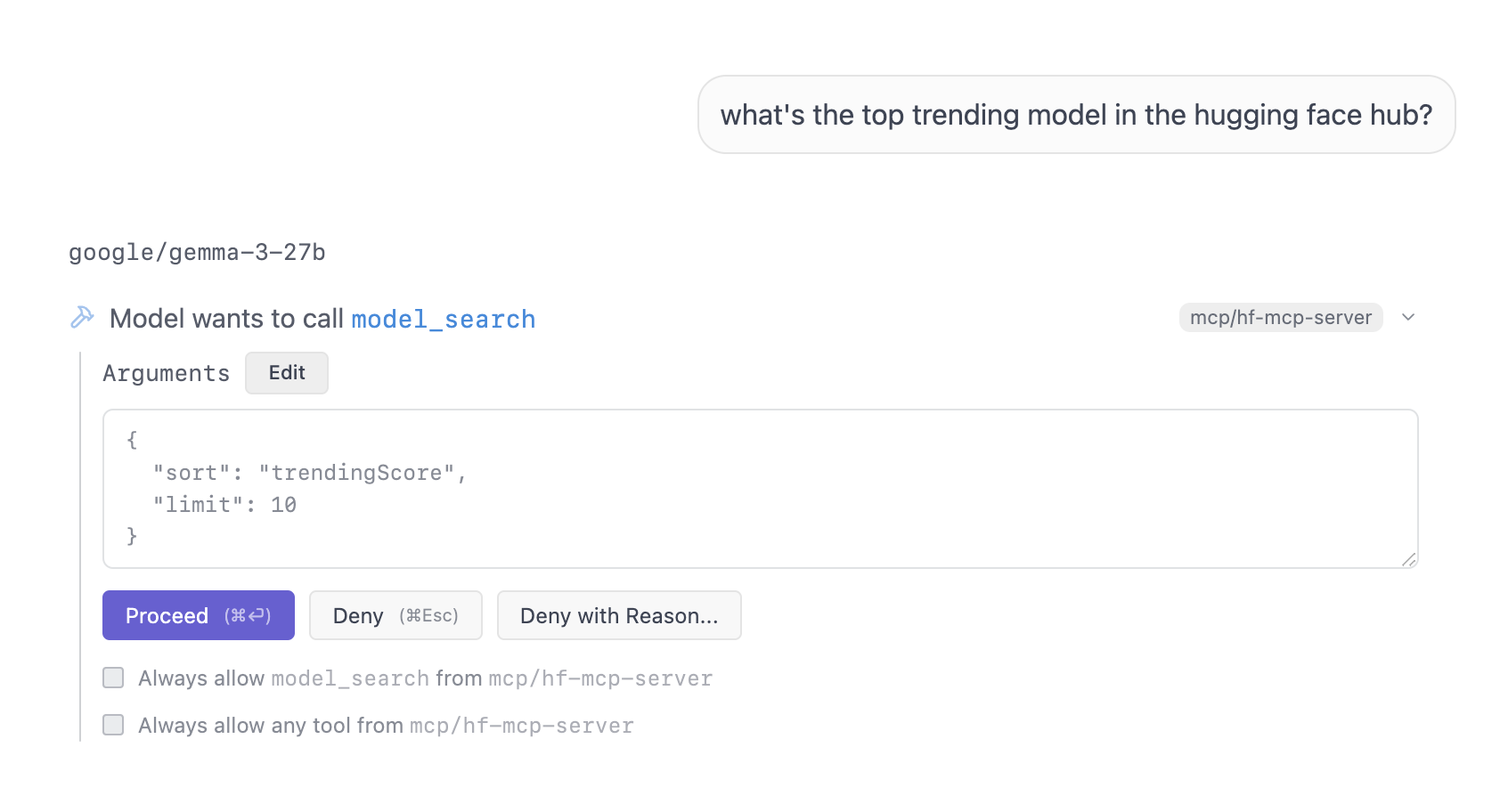

When a model calls a tool, LM Studio will show a confirmation dialog to the user. This allows you to review the tool call arguments before executing it, including editing the arguments if needed.

Tool call confirmation dialog

You can choose to always allow a given tool or allow it only once.

If you choose to always allow a tool, LM Studio will not show the confirmation dialog for that tool in the future. You can manage this later in App Settings > Tools & Integrations.

MCP support: more technical details

-

When you save the

mcp.jsonfile, LM Studio will automatically load the MCP servers defined in it. We spawn a separate process for each MCP server. -

For local MCP servers that rely on

npxoruvx(or any other program on your machine), you need to ensure those tools are installed and available in your system's PATH. -

mcp.jsonlives in~/.lmstudio/mcp.jsonon macOS and Linux, and in%USERPROFILE%/.lmstudio/mcp.jsonon Windows. It's recommended to use the in-app editor to edit this file.

If you're running into bugs, please open an issue on our bug tracker: https://github.com/lmstudio-ai/lmstudio-bug-tracker/issues.

Developers: Create a 'Add to LM Studio' Button

We're also introducing a one-click way to add MCP servers to LM Studio using a deeplink button.

Install link generator

Enter your MCP JSON entry to generate a deeplink for the Add to LM Studio button.

👇 This is a real interactive tool that you can use to create your own MCP install links. Try it!

Click on the button to copy the Markdown to clipboard.

Example

Try to copy and paste the following into the link generator above.

{ "hf-mcp-server": { "url": "https://huggingface.co/mcp", "headers": { "Authorization": "Bearer <YOUR_HF_TOKEN>" } } }

You will need to replace <YOUR_HF_TOKEN> with your actual Hugging Face token. Learn more here.

One more thing...

Connecting MCP servers is easy. But what about creating your own tools and custom resources for your models?

- Sign up for an upcoming private beta using this link.

0.3.17 - Full Release Notes

Build 10

- Added a Chat Appearance setting to display message generation stats on last message only, in tooltips only, or on all applicable messages

- Token count now includes system prompt & tool definitions

- Show 'open in browser' button for LLM messages that have a URL in the content.

- Be mindful: LLMs may generate untrusted URLs. Always verify links before clicking.

Build 9

- Enable MCP by default

Cmd + Shift + Eon Mac orCtrl + Shift + Eon PC always opens the system prompt editor for the current chat

Build 8

- When download panel is open in a new window, add an option to pin it on top of other windows (right click the body)

- Adds the following languages, thanks to our community localizers!

- Malayalam (ml) @prasanthc41m

- Thai (th) @gnoparus

- Bosnian (bs) @0haris0

- Bulgarian (bg) @DenisZekiria

- Hungarian (hu) @Mekemoka

- Bengali (bn) @AbiruzzamanMolla

- Catalan (ca) @Gopro3010

- Finnish (fi) @reinew

- Greek (gr) @ilikecatgirls

- Romanian (ro) @alexandrughinea

- Swedish (sv) @reinew

- Fixed a bug where entries may be duplicated when selecting a draft model for speculative decoding

Build 7

- Added thinking block preview 'vignette', with an option to disable in chat appearance settings

- Set default domain for "Qwen3 Embedding" models to Text Embedding

- Added

--statscommand tolms chatcommand to show prediction statistics (Thanks @Yorkie) - [Windows][ROCm] Strix Halo (AMD Ryzen AI PRO 300 series) support

- [Windows] Add CPU name to hardware page

Build 6

- Stream tool call argument tokens to the UI as they are generated

- Fixed bugs with scrolling up while the model is generating

- Fixed a bug where tool permission dialog wouldn't auto-scroll to the bottom of the chat

Build 5

- To reduce new user confusion, the "change role" and "insert" buttons will now be hidden by default on new installs. You can right click the send button to toggle them on or off.

- Fixed a bug where MCP tools that don't provide a parameters objects would not work correctly.

- Fixed a bug where if MCP server reloaded, the ongoing tool calls would hang indefinitely.

Build 4

- [MCP Beta] Fixes crash when arguments of a tool call contains arrays or objects

Build 3

- Fixed a bug where engine updates can sometimes get stuck

Build 2

- Improved full-sized DeepSeek-R1 tool calling reliability

- Button to pop out downloads panel to a new window.

Build 1

- New Theme: Solarized Dark.

- Set it in Settings > General or by pressing

[⌘/Ctrl K + T]

- Set it in Settings > General or by pressing

- Fixed model catalog timestamp, likes, downloads sorting not working as expected.

- Fixed placeholder text in model deletion dialog.

- Fixed MLX models showing up on Windows.

- Fixed a bug with the header bar reappearing on the no chats page.

- Fixed a bug where delete chat dialog block chat UI if quickly escaped.