LM Studio 0.3.0

We're incredibly excited to finally share LM Studio 0.3.0 🥳.

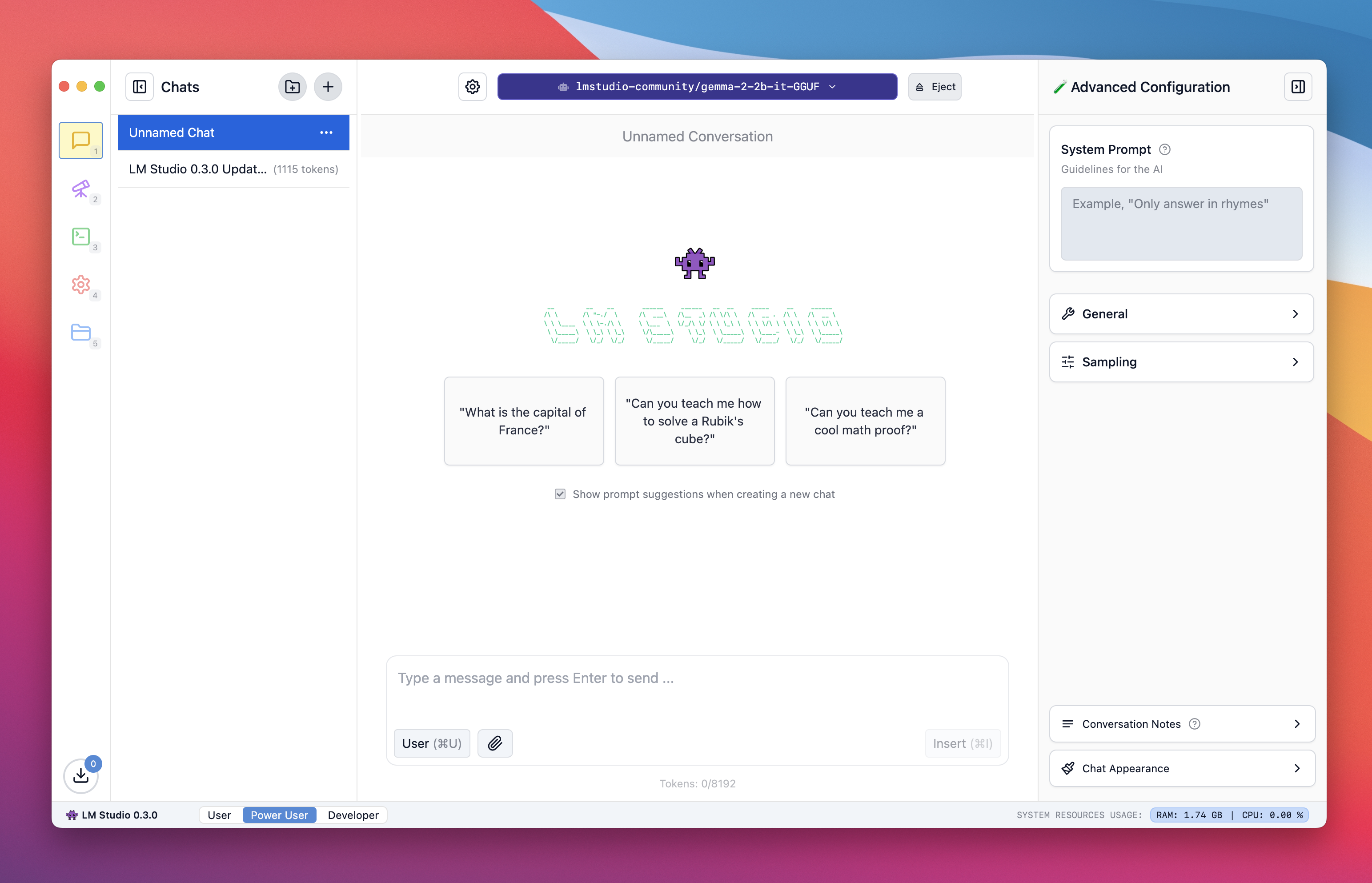

The new chat page in light mode in LM Studio 0.3.0

LM Studio

Since its inception, LM Studio packaged together a few elements for making the most out of local LLMs when you run them on your computer:

- A desktop application that runs entirely offline and has no telemetry

- A familiar chat interface

- Search & download functionality (via Hugging Face 🤗)

- A local server that can listen on OpenAI-like endpoints

- Systems for managing local models and configurations

With this update, we've improved upon, deepened, and simplified many of these aspects through what we've learned from over a year of running local LLMs.

Download LM Studio for Mac, Windows (x86 / ARM), or Linux (x86) from https://lmstudio.ai.

What's new in LM Studio 0.3.0

Chat with your documents

LM Studio 0.3.0 comes with built-in functionality to provide a set of document to an LLM and ask questions about them. If the document is short enough (i.e., if it fits in the model's "context"), LM Studio will add the file contents to the conversation in full. This is particularly useful for models that support long context such as Meta's Llama 3.1 and Mistral Nemo.

If the document is very long, LM Studio will opt into using "Retrieval Augmented Generation", frequently referred to as "RAG". RAG means attempting to fish out relevant bits of a very long document (or several documents) and providing them to the model for reference. This technique sometimes works really well, but sometimes it requires some tuning and experimentation.

Tip for successful RAG: provide as much context in your query as possible. Mention terms, ideas, and words you expect to be in the relevant source material. This will often increase the chance the system will provide useful context to the LLM. As always, experimentation is the best way to find what works best.

OpenAI-like Structured Output API

OpenAI recently announced a JSON-schema based API that can result in reliable JSON outputs. LM Studio 0.3.0 supports this with any local model that can run in LM Studio! We've included a code snippet for doing this right inside the app. Look for it in the Developer page, on the right-hand pane.

UI themes

LM Studio first shipped in May 2024 in dark retro theme, complete with Comic Sans sprinkled for good measure. The OG dark theme held strong, and LM Studio 0.3.0 introduces 3 additional themes: Dark, Light, Sepia. Choose "System" to automatically switch between Dark and Light, depending on your system's dark mode settings.

Automatic load parameters, but also full customizability

Some of us are well versed in the nitty gritty of LLM load and inference parameters. But many of us, understandably, can't be bothered. LM Studio 0.3.0 auto-configures everything based on the hardware you are running it on. If you want to pop open the hood and configure things yourself, LM Studio 0.3.0 has even more customizable options.

Pro tip: head to the My Models page and look for the gear icon next to each model. You can set per-model defaults that will be used anywhere in the app.

Serve on the network

If you head to the server page you'll see a new toggle that says "Serve on Network". Turning this on will open up the server to requests outside of 'localhost'. This means you could use LM Studio server from other devices on the network. Combined with the ability to load and serve multiple LLMs simultaneously, this opens up a lot of new use cases.

Folders to organize chats

Useful if you're working on multiple projects at once. You can even nest folders inside folders!

Multiple generations for each chat

LM Studio had a "regenerate" feature for a while. Now clicking "regenerate" keeps previous message generations and you can easily page between them using a familiar arrow right / arrow left interface.

How to migrate your chats from LM Studio 0.2.31 to 0.3.0

To support features like multi-version regenerations we introduced a new data structure under the hood. You can migrate your pre-0.3.0 chats by going to Settings and clicking on "Migrate Chats". This will make a copy, and will not delete any old files.

Full list of updates

Completely Refreshed UI:

- Includes themes, spellcheck, and corrections.

- Built on top of lmstudio.js (TypeScript SDK).

- New chat settings sidebar design.

Basic RAG (Retrieve & Generate):

- Drag and drop a PDF, .txt file, or other files directly into the chat window.

- Max file input size for RAG (PDF / .docx) increased to 30MB.

- RAG accepts any file type, but non-.pdf/.docx files are read as plain text.

Automatic GPU Detection + Offload:

- Distributes tasks between GPU and CPU based on your machine’s capabilities.

- Can still be overridden manually.

Browse & Download "LM Runtimes":

- Download the latest LLM engines (e.g., llama.cpp) without updating the whole app.

- Available options: ROCm, AVX-only, with more to come.

Automatic Prompt Template:

- LM Studio reads the metadata from the model file and applies prompt formatting automatically.

New Developer Mode:

- View model load logs, configure multiple LLMs for serving, and share an LLM over the network (not just localhost).

- Supports OpenAI-like Structured Outputs with

json_schema.

Folder Organization for Chats:

- Create folders to organize chats.

Prompt Processing Progress Indicator:

- Displays progress % for prompt processing.

Enhanced Model Loader:

- Easily configure load parameters (context, GPU offload) before model load.

- Ability to set defaults for every configurable parameter for a given model file.

- Improved model loader UI with a checkbox to control parameters.

Support for Embedding Models:

- Load and serve embedding models.

- Parallelization support for multiple models.

Vision-Enabled Models:

- Image attachments in chats and API

Show Conversation Token Count:

- Displays the current tokens and total context.

Prompt Template Customization:

- Ability to override prompt templates.

- Edit the "Jinja" template or manually provide prefixes/suffixes.

- Prebuilt chat templates (ChatML, Alpaca, blank, etc.).

Conversation Management:

- Add conversation notes.

- Clone and branch a chat on a specific message.

Customizable Chat Settings:

- Choose chat style and font size.

- Remember settings for each model on load.

Initial Translations:

- Support for Spanish, German, Russian, Turkish, Norwegian.

- Community contributions are welcomed for missing strings and new languages!

- https://github.com/lmstudio-ai/localization

Subtitles for Config Parameters:

- Descriptive subtitles for every configuration parameter.

Even More

- Download the latest LM Studio from https://lmstudio.ai/download.

- New to LM Studio? Head over to the documentation: Getting Started with LM Studio.

- For discussions and community, join our Discord server: https://discord.gg/aPQfnNkxGC

- If you want to use LM Studio at your organization at work, get in touch: LM Studio @ Work